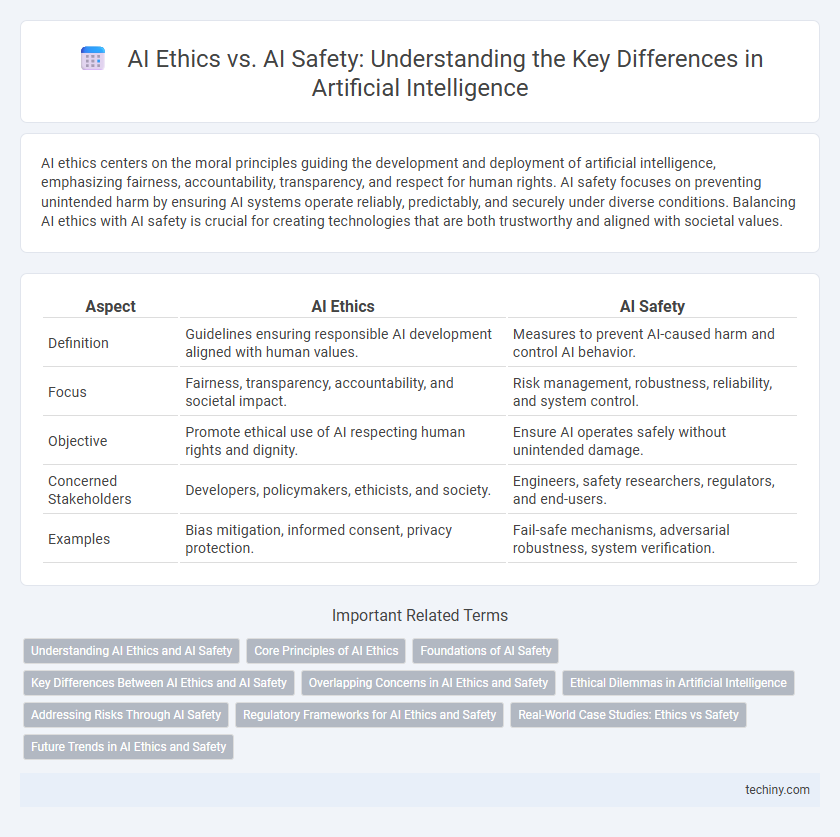

AI ethics centers on the moral principles guiding the development and deployment of artificial intelligence, emphasizing fairness, accountability, transparency, and respect for human rights. AI safety focuses on preventing unintended harm by ensuring AI systems operate reliably, predictably, and securely under diverse conditions. Balancing AI ethics with AI safety is crucial for creating technologies that are both trustworthy and aligned with societal values.

Table of Comparison

| Aspect | AI Ethics | AI Safety |

|---|---|---|

| Definition | Guidelines ensuring responsible AI development aligned with human values. | Measures to prevent AI-caused harm and control AI behavior. |

| Focus | Fairness, transparency, accountability, and societal impact. | Risk management, robustness, reliability, and system control. |

| Objective | Promote ethical use of AI respecting human rights and dignity. | Ensure AI operates safely without unintended damage. |

| Concerned Stakeholders | Developers, policymakers, ethicists, and society. | Engineers, safety researchers, regulators, and end-users. |

| Examples | Bias mitigation, informed consent, privacy protection. | Fail-safe mechanisms, adversarial robustness, system verification. |

Understanding AI Ethics and AI Safety

Understanding AI ethics involves examining the moral principles guiding the development and deployment of artificial intelligence to ensure fairness, transparency, and accountability. AI safety focuses on designing systems that operate reliably and predictably to prevent unintended harmful consequences. Both disciplines are essential to create responsible AI technologies that align with human values and societal norms.

Core Principles of AI Ethics

Core principles of AI ethics emphasize fairness, accountability, transparency, and respect for human rights to ensure responsible AI development. These principles guide the creation of AI systems that avoid bias, protect user privacy, and provide explainable decisions. Unlike AI safety, which focuses on preventing unintended technical failures, AI ethics centers on aligning AI behavior with human values and societal norms.

Foundations of AI Safety

Foundations of AI safety prioritize robust alignment techniques to ensure AI systems operate in accordance with human values and intentions, minimizing unintended harmful behavior. Core principles include transparency, robustness, and verifiability, addressing risks from model unpredictability and adversarial manipulation. Ethical frameworks guide the development process, but foundational safety research focuses on technical methodologies for reliable and secure AI deployment.

Key Differences Between AI Ethics and AI Safety

AI Ethics centers on the moral principles guiding the development and deployment of artificial intelligence, emphasizing fairness, transparency, accountability, and human rights. AI Safety focuses on preventing unintended harm from AI systems by ensuring reliability, robustness, and secure failure modes. The key differences lie in AI Ethics addressing normative questions about what AI should do, while AI Safety concentrates on technical measures to control AI behavior and mitigate risks.

Overlapping Concerns in AI Ethics and Safety

AI ethics and AI safety share overlapping concerns centered on preventing harm and ensuring accountability in AI systems. Both fields emphasize transparency, fairness, and robustness to mitigate risks such as bias, misuse, and unintended consequences. These shared priorities drive interdisciplinary approaches to develop guidelines and safeguards that promote responsible AI deployment.

Ethical Dilemmas in Artificial Intelligence

Ethical dilemmas in artificial intelligence arise from conflicts between AI development goals and societal values such as privacy, fairness, and accountability. AI ethics addresses these challenges by establishing principles that guide responsible AI design and deployment to prevent bias, discrimination, and misuse. The distinction between AI ethics and AI safety lies in ethics focusing on moral implications, while safety emphasizes technical measures to avoid harm.

Addressing Risks Through AI Safety

AI safety focuses on developing robust mechanisms to prevent malfunctions, unintended behaviors, and harmful outcomes in artificial intelligence systems, ensuring reliable alignment with human values. Addressing risks through AI safety involves rigorous testing, fail-safe designs, and transparency protocols to mitigate potential hazards before deployment. This proactive approach complements AI ethics by translating moral principles into enforceable technical standards that safeguard human well-being.

Regulatory Frameworks for AI Ethics and Safety

Regulatory frameworks for AI ethics and safety establish standards that govern the responsible development and deployment of artificial intelligence technologies, ensuring alignment with human values and risk mitigation. These frameworks emphasize transparency, accountability, fairness, and robustness, addressing ethical concerns such as bias, privacy, and autonomy alongside technical safety hazards like malfunction and adversarial attacks. Governments and international organizations collaborate to create policies and guidelines that promote trustworthy AI systems, balancing innovation with societal protection and long-term sustainability.

Real-World Case Studies: Ethics vs Safety

Real-world case studies in AI highlight critical distinctions between AI ethics and AI safety, such as the deployment of facial recognition technology where ethical concerns focus on bias, privacy, and consent, while safety issues emphasize system reliability and prevention of misuse. The Uber self-driving car incident underscores the imperative for robust safety protocols to avoid fatalities alongside ethical considerations about transparency and accountability. Balancing ethical principles with safety measures ensures responsible AI integration in society, reducing unintended harm and promoting public trust.

Future Trends in AI Ethics and Safety

Future trends in AI ethics and safety emphasize the integration of robust regulatory frameworks and advanced technical safeguards to ensure responsible AI deployment. Ethical AI development increasingly prioritizes transparency, bias mitigation, and human-centered design to address societal impacts and promote trust. Innovations in AI safety focus on developing fail-safe mechanisms, interpretability, and alignment with human values to prevent unintended consequences as AI systems become more autonomous and pervasive.

AI Ethics vs AI Safety Infographic

techiny.com

techiny.com