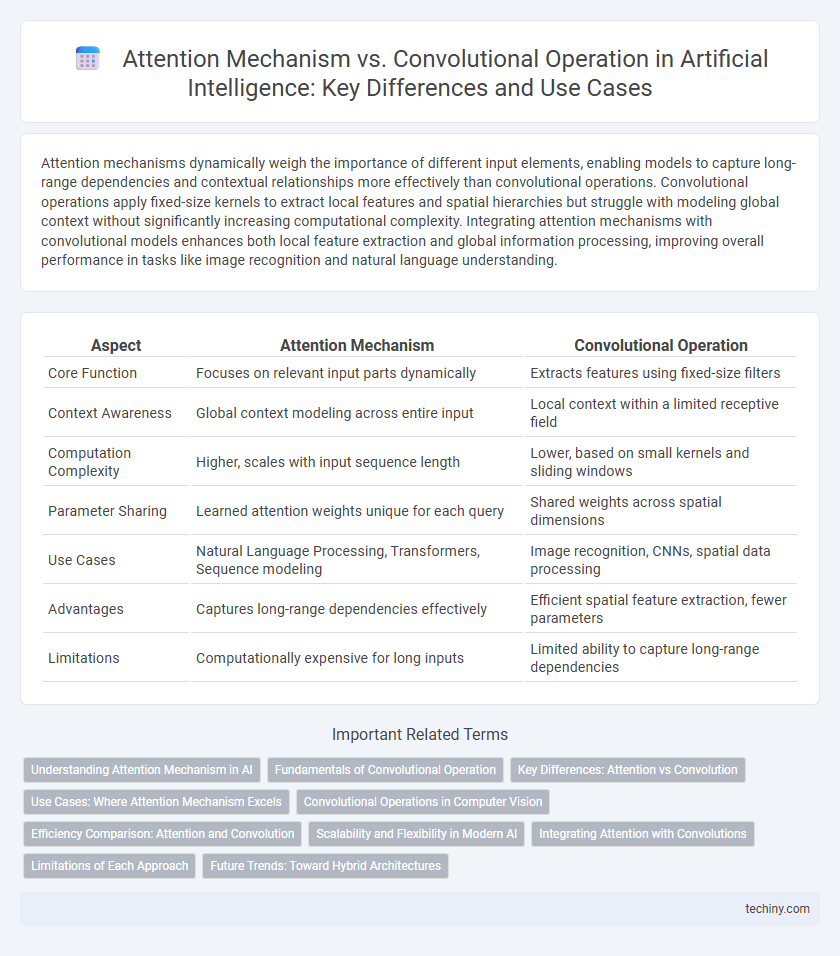

Attention mechanisms dynamically weigh the importance of different input elements, enabling models to capture long-range dependencies and contextual relationships more effectively than convolutional operations. Convolutional operations apply fixed-size kernels to extract local features and spatial hierarchies but struggle with modeling global context without significantly increasing computational complexity. Integrating attention mechanisms with convolutional models enhances both local feature extraction and global information processing, improving overall performance in tasks like image recognition and natural language understanding.

Table of Comparison

| Aspect | Attention Mechanism | Convolutional Operation |

|---|---|---|

| Core Function | Focuses on relevant input parts dynamically | Extracts features using fixed-size filters |

| Context Awareness | Global context modeling across entire input | Local context within a limited receptive field |

| Computation Complexity | Higher, scales with input sequence length | Lower, based on small kernels and sliding windows |

| Parameter Sharing | Learned attention weights unique for each query | Shared weights across spatial dimensions |

| Use Cases | Natural Language Processing, Transformers, Sequence modeling | Image recognition, CNNs, spatial data processing |

| Advantages | Captures long-range dependencies effectively | Efficient spatial feature extraction, fewer parameters |

| Limitations | Computationally expensive for long inputs | Limited ability to capture long-range dependencies |

Understanding Attention Mechanism in AI

Attention mechanism in AI enables models to dynamically weigh the importance of different input elements, enhancing context understanding and capturing long-range dependencies more effectively than traditional convolutional operations. Unlike convolutions that apply fixed local filters across inputs, attention mechanisms compute relevance scores across the entire sequence, allowing for flexible and global feature extraction. This dynamic weighting improves performance in natural language processing and computer vision tasks by focusing computational resources on the most informative parts of the data.

Fundamentals of Convolutional Operation

Convolutional operations are fundamental in artificial intelligence for extracting spatial hierarchies in data through kernel filters that scan input features, enabling pattern recognition such as edges and textures in images. These operations use localized connectivity and weight sharing to reduce computational complexity and improve feature detection efficiency. Convolutional Neural Networks (CNNs) effectively capture spatial context, making convolution essential for image processing tasks compared to attention mechanisms that focus on global dependencies.

Key Differences: Attention vs Convolution

The attention mechanism dynamically weighs the importance of different input elements, enabling models to focus on relevant features regardless of their position, which contrasts with convolutional operations that apply fixed-size, spatially localized filters to capture local patterns. Attention computes relationships between all parts of the input through learned weights, facilitating long-range dependencies, while convolution relies on sliding kernels that inherently limit the receptive field to neighboring inputs. This difference allows attention mechanisms, as used in Transformers, to excel in capturing global context compared to convolutional neural networks optimized for local feature extraction.

Use Cases: Where Attention Mechanism Excels

Attention mechanisms excel in natural language processing tasks such as machine translation, text summarization, and sentiment analysis by enabling models to capture long-range dependencies and contextual relationships within data. Unlike convolutional operations, which focus on local spatial features and are effective in image recognition and pattern detection, attention mechanisms dynamically weigh input elements, enhancing performance in sequential data and tasks requiring understanding of global context. This adaptability makes attention crucial in transformer architectures that dominate state-of-the-art results in language modeling and speech recognition.

Convolutional Operations in Computer Vision

Convolutional Operations in computer vision utilize spatially localized filters to extract hierarchical features from input images, enabling efficient pattern recognition and object detection. These operations are highly effective in capturing translation-invariant features due to their shared weights and receptive fields across the spatial dimensions. Convolutional Neural Networks (CNNs) leverage these operations to achieve state-of-the-art performance in image classification, segmentation, and recognition tasks by systematically learning spatial hierarchies.

Efficiency Comparison: Attention and Convolution

Attention mechanisms demonstrate higher computational complexity O(n2) compared to convolutional operations with linear complexity O(n), impacting efficiency in large-scale inputs. Convolutional layers excel in capturing local features using shared weights, enabling faster processing and reduced memory usage. Attention mechanisms provide dynamic, global context modeling but require optimized architectures like sparse or linear attention to enhance scalability and efficiency in practice.

Scalability and Flexibility in Modern AI

Attention mechanisms offer superior scalability compared to convolutional operations by dynamically weighting input features, enabling models like Transformers to handle varying input sizes efficiently without fixed receptive fields. Flexibility is enhanced in attention-based models, as they can capture long-range dependencies and contextual relationships beyond the local patterns convolutional layers focus on. Convolutional operations remain computationally efficient for grid-like data, but their limited scalability and fixed kernel size restrict adaptability in complex AI tasks requiring global context understanding.

Integrating Attention with Convolutions

Integrating attention mechanisms with convolutional operations enhances deep learning models by enabling dynamic feature weighting alongside spatial hierarchies. Attention modules selectively highlight salient regions within convolutional feature maps, improving tasks like image recognition and segmentation. Combining attention with convolutions leverages both local pattern extraction and global context understanding, driving higher accuracy and interpretability in AI systems.

Limitations of Each Approach

Attention mechanisms excel at capturing long-range dependencies in data but often suffer from high computational complexity and memory usage, especially with large input sequences. Convolutional operations efficiently process local patterns and require fewer resources, yet they struggle to model global context due to their limited receptive fields. Balancing these trade-offs is crucial for designing hybrid models that leverage the strengths of both approaches in artificial intelligence applications.

Future Trends: Toward Hybrid Architectures

Future trends in artificial intelligence emphasize hybrid architectures that combine attention mechanisms with convolutional operations to leverage their complementary strengths. Attention mechanisms excel at capturing long-range dependencies and global context, while convolutional operations efficiently model local spatial features. Integrating these approaches enables models to achieve improved performance and scalability in complex tasks such as natural language processing and computer vision.

Attention Mechanism vs Convolutional Operation Infographic

techiny.com

techiny.com