Backpropagation and genetic algorithms serve distinct roles in training artificial intelligence models. Backpropagation optimizes neural networks by minimizing error through gradient descent, enabling efficient learning in supervised tasks. Genetic algorithms, inspired by natural selection, explore a broader solution space by evolving populations of candidate solutions, making them effective for optimization problems where gradient information is unavailable or unreliable.

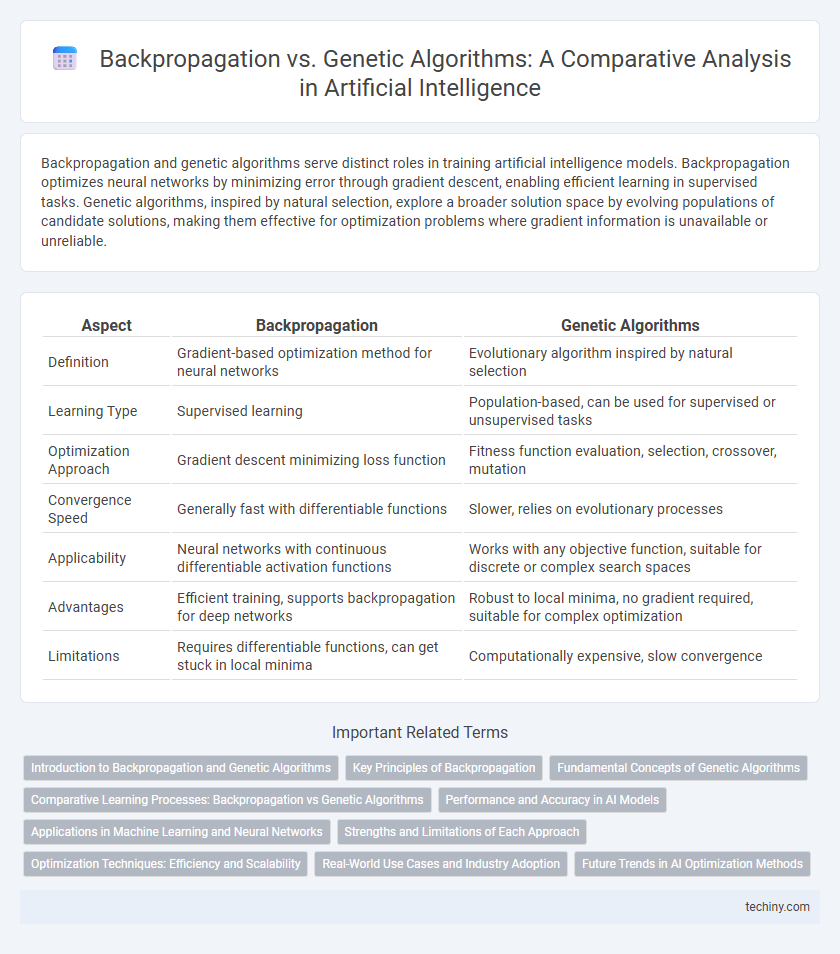

Table of Comparison

| Aspect | Backpropagation | Genetic Algorithms |

|---|---|---|

| Definition | Gradient-based optimization method for neural networks | Evolutionary algorithm inspired by natural selection |

| Learning Type | Supervised learning | Population-based, can be used for supervised or unsupervised tasks |

| Optimization Approach | Gradient descent minimizing loss function | Fitness function evaluation, selection, crossover, mutation |

| Convergence Speed | Generally fast with differentiable functions | Slower, relies on evolutionary processes |

| Applicability | Neural networks with continuous differentiable activation functions | Works with any objective function, suitable for discrete or complex search spaces |

| Advantages | Efficient training, supports backpropagation for deep networks | Robust to local minima, no gradient required, suitable for complex optimization |

| Limitations | Requires differentiable functions, can get stuck in local minima | Computationally expensive, slow convergence |

Introduction to Backpropagation and Genetic Algorithms

Backpropagation is a supervised learning algorithm used to train artificial neural networks by minimizing the error through gradient descent and adjusting weights via the chain rule of calculus. Genetic Algorithms are evolutionary optimization techniques inspired by natural selection, employing operators such as mutation, crossover, and selection to evolve solutions over generations. Both methods serve different purposes: backpropagation excels in fine-tuning neural network parameters, while genetic algorithms optimize complex, multidimensional search spaces without requiring gradient information.

Key Principles of Backpropagation

Backpropagation is a supervised learning algorithm primarily used to train artificial neural networks by minimizing the error between predicted and actual outputs through gradient descent. It relies on the chain rule of calculus to calculate the gradient of the loss function with respect to each weight, enabling efficient weight updates layer by layer. This process iteratively adjusts network weights to optimize performance, contrasting the population-based search and mutation strategies characteristic of genetic algorithms.

Fundamental Concepts of Genetic Algorithms

Genetic algorithms are optimization techniques inspired by natural selection, utilizing populations of candidate solutions that evolve through selection, crossover, and mutation processes. These algorithms encode solutions as chromosomes, applying fitness functions to evaluate and guide evolutionary progress toward optimal or near-optimal solutions. Unlike backpropagation, which relies on gradient descent to minimize error in neural networks, genetic algorithms operate without gradient information, making them suitable for complex or non-differentiable optimization problems in artificial intelligence.

Comparative Learning Processes: Backpropagation vs Genetic Algorithms

Backpropagation utilizes gradient descent to iteratively minimize error by adjusting neural network weights through calculated partial derivatives. Genetic Algorithms employ evolutionary strategies, including selection, crossover, and mutation, to optimize solutions without requiring gradient information. While Backpropagation excels in fine-tuning differentiable models, Genetic Algorithms are advantageous for global search in complex, non-differentiable problem spaces.

Performance and Accuracy in AI Models

Backpropagation consistently delivers higher accuracy in training neural networks by efficiently minimizing errors through gradient descent, making it the preferred method for supervised learning tasks requiring precise model tuning. Genetic algorithms excel in exploring complex, multimodal search spaces and optimizing non-differentiable functions, often providing robust solutions when data is sparse or the problem landscape is highly irregular. Performance-wise, backpropagation is faster and more computationally efficient for well-structured problems, whereas genetic algorithms can achieve competitive results in diverse optimization scenarios but with higher computational costs.

Applications in Machine Learning and Neural Networks

Backpropagation, a gradient-based optimization algorithm, efficiently trains deep neural networks by minimizing error through weight adjustments, making it ideal for supervised learning tasks like image recognition and speech processing. Genetic algorithms, inspired by natural selection, explore a broader search space by evolving populations of solutions, excelling in optimization problems where gradient information is unavailable or non-differentiable, such as neural architecture search and hyperparameter tuning. Combining backpropagation's precision with genetic algorithms' global search capabilities enhances machine learning models' robustness and adaptability in complex environments.

Strengths and Limitations of Each Approach

Backpropagation excels in efficiently training deep neural networks by minimizing error through gradient descent, making it ideal for supervised learning tasks with large labeled datasets. Genetic algorithms offer robust optimization across complex, multimodal search spaces without requiring gradient information, suitable for evolving network architectures or solving non-differentiable problems. Backpropagation's limitation lies in getting trapped in local minima and needing differentiable functions, while genetic algorithms tend to be computationally intensive and slower in convergence.

Optimization Techniques: Efficiency and Scalability

Backpropagation utilizes gradient descent to efficiently optimize neural network weights by minimizing error, making it highly scalable for large datasets and deep architectures. Genetic algorithms employ evolutionary strategies to explore complex solution spaces without requiring differentiable functions, offering robustness in global optimization but often at the cost of slower convergence and higher computational expense. Combining these methods can leverage backpropagation's speed and genetic algorithms' exploration capabilities to enhance optimization in artificial intelligence applications.

Real-World Use Cases and Industry Adoption

Backpropagation dominates in deep learning applications such as image recognition, natural language processing, and autonomous systems due to its efficiency in training neural networks with large datasets. Genetic algorithms excel in optimization problems where search spaces are complex and poorly understood, making them valuable in robotics, scheduling, and engineering design. Industries like finance and healthcare leverage backpropagation for predictive analytics, while automotive and aerospace sectors adopt genetic algorithms for evolving control systems and structural optimization.

Future Trends in AI Optimization Methods

Backpropagation remains a cornerstone in training deep neural networks due to its efficiency in gradient-based optimization, but genetic algorithms are gaining attention for their robustness in exploring complex, multi-modal search spaces without gradient information. Future trends in AI optimization methods emphasize hybrid approaches that combine the precision of backpropagation with the global search capabilities of genetic algorithms to enhance adaptability and convergence speed. Research is increasingly focused on developing novel algorithms that leverage evolutionary strategies alongside gradient descent to address challenges in scalability and real-time learning.

Backpropagation vs Genetic Algorithms Infographic

techiny.com

techiny.com