Bag-of-Words represents text by counting word occurrences without capturing word order or semantic meaning, resulting in sparse and high-dimensional vectors. Word Embedding transforms words into dense, low-dimensional vectors that preserve semantic relationships, enabling more effective natural language processing tasks. Embeddings such as Word2Vec or GloVe enhance model performance by capturing context and word similarity compared to the simplistic Bag-of-Words approach.

Table of Comparison

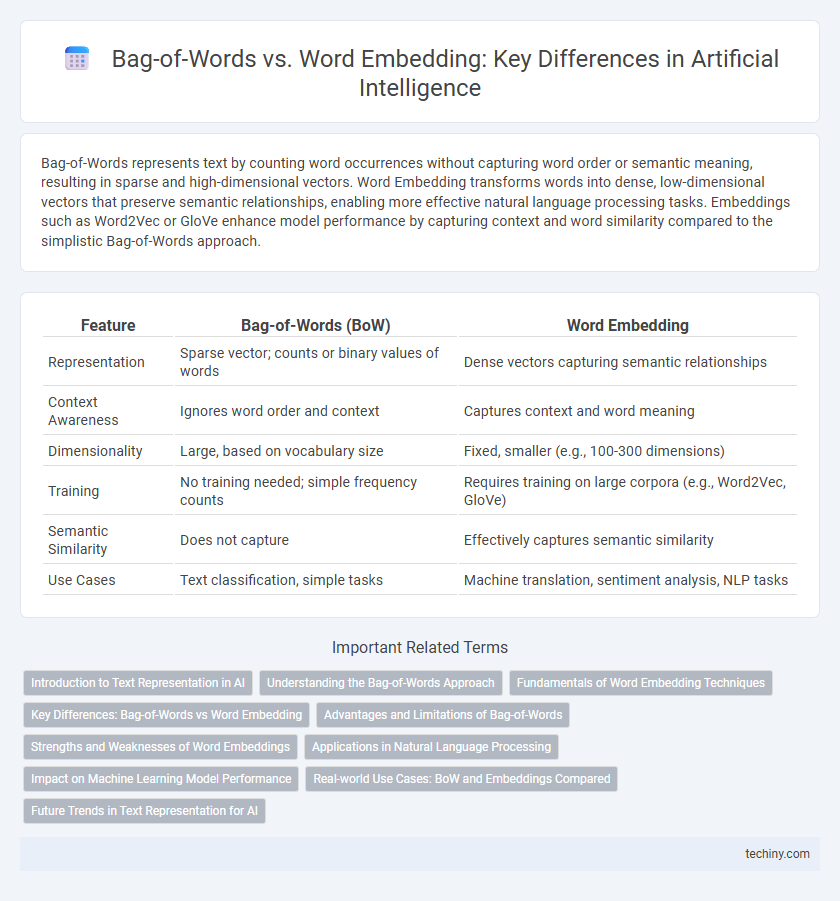

| Feature | Bag-of-Words (BoW) | Word Embedding |

|---|---|---|

| Representation | Sparse vector; counts or binary values of words | Dense vectors capturing semantic relationships |

| Context Awareness | Ignores word order and context | Captures context and word meaning |

| Dimensionality | Large, based on vocabulary size | Fixed, smaller (e.g., 100-300 dimensions) |

| Training | No training needed; simple frequency counts | Requires training on large corpora (e.g., Word2Vec, GloVe) |

| Semantic Similarity | Does not capture | Effectively captures semantic similarity |

| Use Cases | Text classification, simple tasks | Machine translation, sentiment analysis, NLP tasks |

Introduction to Text Representation in AI

Bag-of-Words (BoW) represents text by counting word frequency, capturing term occurrence but ignoring word order and context, which limits semantic understanding. Word Embedding techniques, such as Word2Vec and GloVe, transform words into dense vector representations that encode semantic relationships and contextual information. These embedding models enhance natural language processing tasks by enabling machines to comprehend meaning and similarity between words more effectively than traditional BoW methods.

Understanding the Bag-of-Words Approach

The Bag-of-Words (BoW) approach in Artificial Intelligence transforms text into fixed-length vectors by counting word occurrences, disregarding grammar and word order, making it simple yet effective for many natural language processing tasks. This method creates a vocabulary from a corpus and represents each document as a frequency vector of these vocabulary words, facilitating straightforward text classification and clustering. Despite its simplicity, BoW lacks semantic understanding and context awareness compared to advanced techniques like Word Embedding, which encode word meanings in dense, continuous vectors.

Fundamentals of Word Embedding Techniques

Word Embedding techniques transform text into dense vector representations, capturing semantic relationships between words based on context, unlike the Bag-of-Words model which relies on sparse, frequency-based vectors. Common embedding methods include Word2Vec, which uses continuous bag-of-words and skip-gram models to predict surrounding words, and GloVe, which generates embeddings through matrix factorization of word co-occurrence statistics. These embeddings enable machines to understand word similarity and meaning more effectively in natural language processing tasks.

Key Differences: Bag-of-Words vs Word Embedding

Bag-of-Words represents text as fixed-length vectors based on word frequency, disregarding word order and contextual meaning, resulting in sparse and high-dimensional data. Word Embedding captures semantic relationships by mapping words into dense, continuous vector spaces where similar words have closer representations, enabling better understanding of context and nuances. Key differences include Bag-of-Words' simplicity and interpretability versus Word Embedding's ability to retain semantic information and improve performance in NLP tasks.

Advantages and Limitations of Bag-of-Words

Bag-of-Words (BoW) offers simplicity and ease of implementation, making it effective for text classification tasks with limited computational resources. However, BoW ignores word order and context, leading to loss of semantic meaning and inability to capture polysemy or synonymy. Its high dimensionality and sparsity can result in inefficiency for large vocabularies compared to dense vector representations like word embeddings.

Strengths and Weaknesses of Word Embeddings

Word embeddings provide dense vector representations that capture semantic relationships between words, enabling improved understanding of context and meaning compared to the sparse, high-dimensional Bag-of-Words model. They excel in tasks requiring semantic similarity, analogies, and handling synonyms but require large corpora and extensive training to generate accurate representations. However, embeddings can struggle with out-of-vocabulary words and context-dependent meanings, limiting their adaptability in dynamic language scenarios.

Applications in Natural Language Processing

Bag-of-Words (BoW) represents text by word frequency but lacks semantic context, making it effective for simple document classification tasks. Word Embedding techniques like Word2Vec and GloVe capture semantic relationships between words, enhancing performance in complex NLP applications such as sentiment analysis, machine translation, and named entity recognition. Embeddings enable models to understand word similarity and context, significantly improving language understanding and generation accuracy.

Impact on Machine Learning Model Performance

Bag-of-Words (BoW) captures word frequency but often ignores context and semantic relationships, which limits its effectiveness in complex natural language processing tasks. Word Embedding techniques like Word2Vec and GloVe represent words in continuous vector spaces, preserving semantic similarities and improving the ability of machine learning models to understand language nuances. Models utilizing word embeddings typically exhibit higher accuracy and better generalization compared to those relying on Bag-of-Words features.

Real-world Use Cases: BoW and Embeddings Compared

Bag-of-Words (BoW) remains effective in document classification tasks involving smaller datasets or where interpretability of word frequency is crucial, such as spam detection and sentiment analysis. Word Embeddings, including models like Word2Vec and GloVe, excel in capturing semantic relationships, enabling advanced use cases like machine translation, semantic search, and chatbot response generation. In practice, embeddings outperform BoW when handling large-scale, context-rich text data requiring nuanced understanding of word meaning and context.

Future Trends in Text Representation for AI

Future trends in text representation for AI emphasize the shift from traditional Bag-of-Words models, which rely on sparse and high-dimensional vectors, towards advanced word embedding techniques like Word2Vec, GloVe, and contextual embeddings such as BERT and GPT. These embedding models enable richer semantic understanding by capturing context, word relationships, and polysemy, enhancing performance in natural language processing tasks. Emerging innovations focus on dynamic, domain-specific embeddings and multimodal representations to improve AI's interpretability and adaptability across diverse applications.

Bag-of-Words vs Word Embedding Infographic

techiny.com

techiny.com