Bagging reduces variance by training multiple independent models on random subsets of the data and aggregating their predictions, making it effective for high-variance algorithms like decision trees. Boosting sequentially trains models, each correcting errors of the previous ones, which reduces both bias and variance but can risk overfitting if not properly regularized. Choosing between bagging and boosting depends on the problem complexity, data size, and the need to balance bias-variance trade-offs for optimal AI model performance.

Table of Comparison

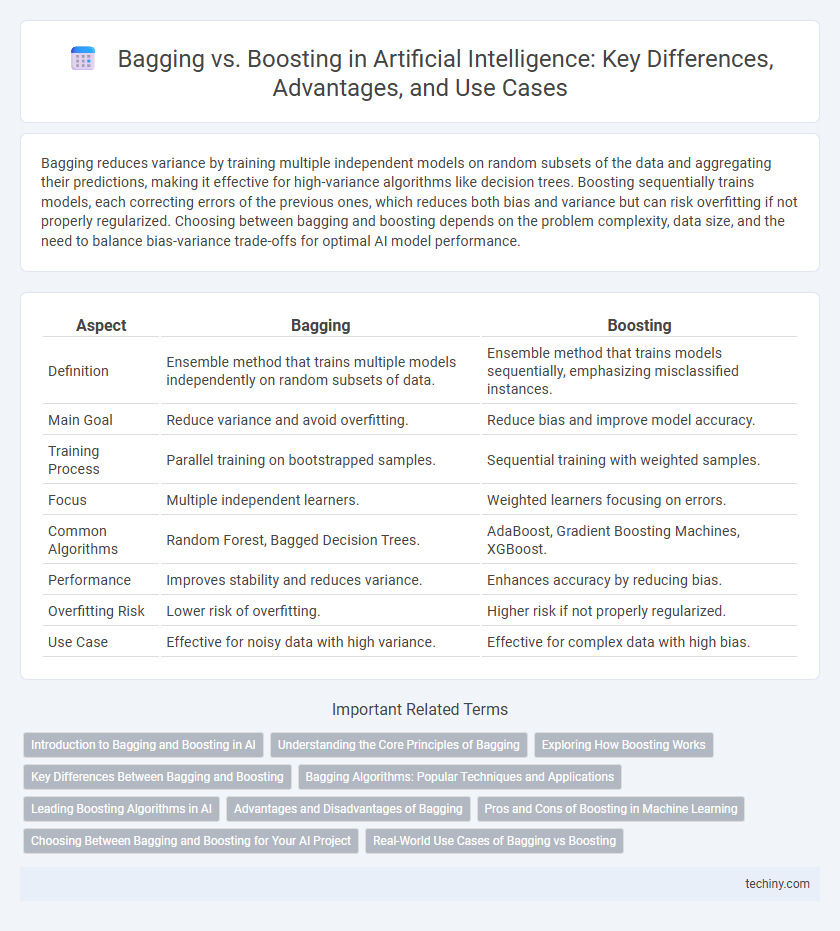

| Aspect | Bagging | Boosting |

|---|---|---|

| Definition | Ensemble method that trains multiple models independently on random subsets of data. | Ensemble method that trains models sequentially, emphasizing misclassified instances. |

| Main Goal | Reduce variance and avoid overfitting. | Reduce bias and improve model accuracy. |

| Training Process | Parallel training on bootstrapped samples. | Sequential training with weighted samples. |

| Focus | Multiple independent learners. | Weighted learners focusing on errors. |

| Common Algorithms | Random Forest, Bagged Decision Trees. | AdaBoost, Gradient Boosting Machines, XGBoost. |

| Performance | Improves stability and reduces variance. | Enhances accuracy by reducing bias. |

| Overfitting Risk | Lower risk of overfitting. | Higher risk if not properly regularized. |

| Use Case | Effective for noisy data with high variance. | Effective for complex data with high bias. |

Introduction to Bagging and Boosting in AI

Bagging and Boosting are ensemble learning techniques designed to improve the accuracy and stability of machine learning models. Bagging, or Bootstrap Aggregating, generates multiple independent models by training on varied subsets of data and combines their predictions through averaging or voting to reduce variance. Boosting sequentially trains models, emphasizing previously misclassified instances to minimize bias and create a strong, aggregated predictive model from weak learners.

Understanding the Core Principles of Bagging

Bagging, or Bootstrap Aggregating, enhances model stability and accuracy by training multiple independent base models on different random subsets of the original dataset and aggregating their predictions through majority voting or averaging. This ensemble technique primarily reduces variance and helps prevent overfitting in complex models like decision trees. By relying on parallel training and diverse data samples, Bagging improves generalization without drastically increasing bias.

Exploring How Boosting Works

Boosting improves model accuracy by sequentially training weak learners, where each new model focuses on correcting errors made by previous models. The weighted combination of these learners enhances prediction performance by minimizing bias and variance. Key algorithms like AdaBoost and Gradient Boosting rely on this iterative re-weighting mechanism to build a strong composite classifier.

Key Differences Between Bagging and Boosting

Bagging reduces variance by training multiple independent models on random subsets of data using techniques like bootstrap sampling, leading to improved model stability and accuracy. Boosting sequentially trains weak learners where each model focuses on correcting errors made by previous ones, thereby reducing bias and improving overall prediction performance. While bagging emphasizes parallelization and variance reduction, boosting targets bias reduction through adaptive weight adjustments and model dependencies.

Bagging Algorithms: Popular Techniques and Applications

Bagging algorithms, such as Random Forest and Bootstrap Aggregating, enhance model stability and accuracy by training multiple base learners on random subsets of the training data and aggregating their predictions. These techniques effectively reduce variance and prevent overfitting, making them ideal for handling noisy datasets and complex classification tasks. Common applications of bagging include fraud detection, medical diagnosis, and customer segmentation, where robust and reliable predictive performance is crucial.

Leading Boosting Algorithms in AI

Leading boosting algorithms in AI include AdaBoost, Gradient Boosting Machines (GBM), and XGBoost, each enhancing model accuracy by combining multiple weak learners into a strong ensemble. XGBoost is celebrated for its scalability, speed, and regularization capabilities, making it a top choice in machine learning competitions and real-world applications. Gradient Boosting Machines optimize predictive performance through sequential tree building, minimizing errors with gradient descent techniques.

Advantages and Disadvantages of Bagging

Bagging enhances model stability and accuracy by reducing variance through training multiple base learners on different subsets of the dataset, making it effective for high-variance algorithms like decision trees. It is less prone to overfitting compared to boosting but may struggle with biased base models since it does not reduce bias significantly. The main disadvantage includes increased computational cost due to training multiple models and less improvement in predictive performance when the base learners are weak or already stable.

Pros and Cons of Boosting in Machine Learning

Boosting enhances model accuracy by sequentially combining weak learners to reduce bias and variance, making it highly effective for complex datasets. Its main drawbacks include susceptibility to overfitting, especially with noisy data, and increased computational cost compared to bagging techniques. While boosting often achieves superior performance, it requires careful tuning of hyperparameters such as learning rate and number of estimators to prevent model degradation.

Choosing Between Bagging and Boosting for Your AI Project

Choosing between bagging and boosting for your AI project depends on the nature of your data and the model's robustness. Bagging, such as with Random Forests, excels at reducing variance and preventing overfitting in noisy datasets by training multiple independent models on bootstrapped samples. Boosting algorithms like AdaBoost or Gradient Boosting focus on minimizing bias by sequentially adjusting weights to correct previous errors, making them ideal for improving predictive accuracy on complex patterns.

Real-World Use Cases of Bagging vs Boosting

Bagging is widely applied in random forest algorithms for fraud detection and risk assessment due to its ability to reduce variance and prevent overfitting in large datasets. Boosting methods like AdaBoost and Gradient Boosting are favored in customer churn prediction and credit scoring because they improve model accuracy by sequentially correcting errors. Real-world applications demonstrate bagging's strength in stable, high-variance environments, while boosting excels where fine-grained predictive performance is crucial.

Bagging vs Boosting Infographic

techiny.com

techiny.com