Batch Gradient Descent processes the entire dataset to compute gradients, ensuring stable convergence but requiring significant computational resources, especially with large datasets. Stochastic Gradient Descent updates model parameters using individual data points, leading to faster iterations and the ability to escape local minima, though it introduces more noise and variability in updates. Choosing between these methods depends on dataset size, computational capacity, and the need for convergence stability versus speed.

Table of Comparison

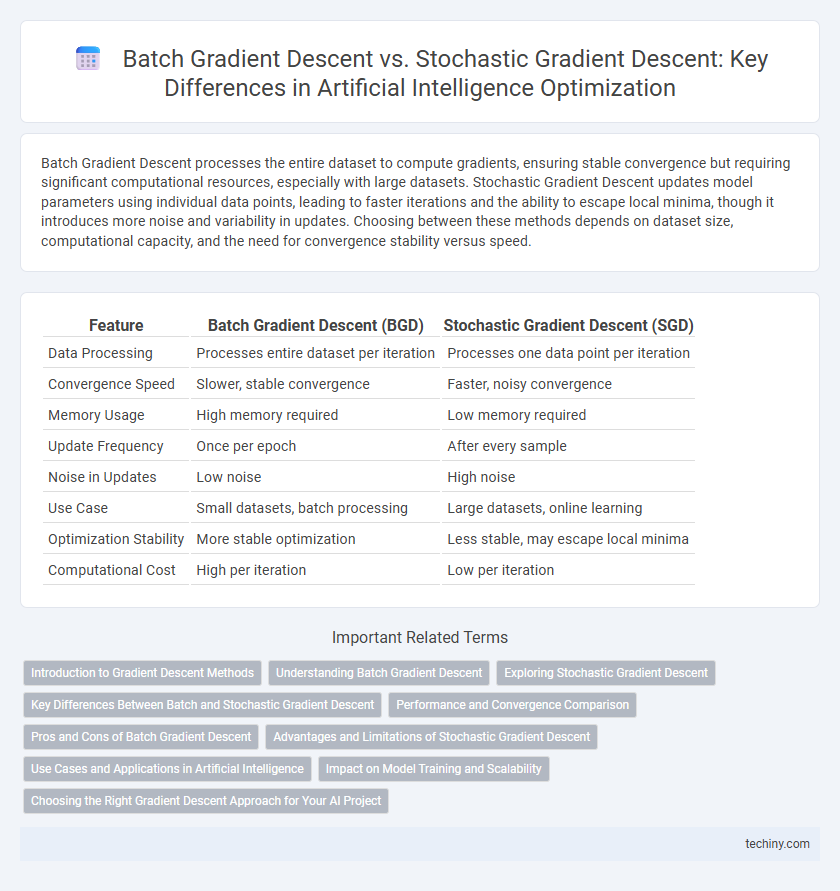

| Feature | Batch Gradient Descent (BGD) | Stochastic Gradient Descent (SGD) |

|---|---|---|

| Data Processing | Processes entire dataset per iteration | Processes one data point per iteration |

| Convergence Speed | Slower, stable convergence | Faster, noisy convergence |

| Memory Usage | High memory required | Low memory required |

| Update Frequency | Once per epoch | After every sample |

| Noise in Updates | Low noise | High noise |

| Use Case | Small datasets, batch processing | Large datasets, online learning |

| Optimization Stability | More stable optimization | Less stable, may escape local minima |

| Computational Cost | High per iteration | Low per iteration |

Introduction to Gradient Descent Methods

Batch Gradient Descent calculates the gradient using the entire dataset, ensuring a stable and accurate convergence but often requiring significant computational resources. Stochastic Gradient Descent updates model parameters using one training example at a time, enabling faster iterations and the ability to escape local minima but at the cost of higher variance in updates. Both methods aim to minimize the cost function in machine learning models by iteratively adjusting parameters toward the global minimum.

Understanding Batch Gradient Descent

Batch Gradient Descent calculates the gradient using the entire training dataset, ensuring a stable and accurate update of model parameters in each iteration. This method is computationally intensive but provides smooth convergence towards the global minimum of the loss function. It is particularly effective for datasets that fit comfortably in memory and where precise gradient estimation is prioritized over faster, noisier updates.

Exploring Stochastic Gradient Descent

Stochastic Gradient Descent (SGD) updates model parameters using a single training example at a time, enabling faster iterations and more frequent updates compared to Batch Gradient Descent, which processes the entire dataset per update. This makes SGD particularly effective for large-scale machine learning tasks where computational resources or time are limited. The inherent noise in SGD's parameter updates can help escape local minima, improving convergence to better solutions in complex optimization landscapes.

Key Differences Between Batch and Stochastic Gradient Descent

Batch Gradient Descent calculates the gradient using the entire dataset, resulting in stable but potentially slow convergence, especially with large datasets. Stochastic Gradient Descent computes the gradient for each training example individually, enabling faster iterations and more frequent updates but with higher variance in the convergence path. Batch Gradient Descent is preferred for precise convergence while Stochastic Gradient Descent excels in scalability and efficiency for massive or streaming data.

Performance and Convergence Comparison

Batch Gradient Descent processes the entire dataset to compute gradients, leading to slower but more stable convergence suitable for convex problems. Stochastic Gradient Descent updates parameters using individual data points, offering faster iterations and better performance in large-scale or non-convex tasks despite noisy convergence paths. The trade-off between convergence speed and stability makes SGD preferable for deep learning, while Batch Gradient Descent excels in smaller, well-defined scenarios.

Pros and Cons of Batch Gradient Descent

Batch Gradient Descent processes the entire dataset to compute the gradient, ensuring stable and accurate convergence, particularly for convex functions. It requires substantial memory and computational resources, making it less scalable for very large datasets or real-time applications. The method's slow updates can hinder performance in dynamic environments, but it benefits from reduced variance in gradient estimates, promoting consistent descent paths.

Advantages and Limitations of Stochastic Gradient Descent

Stochastic Gradient Descent (SGD) offers faster convergence and better scalability on large datasets by updating model parameters using individual training examples, enabling efficient online learning and reduced memory usage. However, its inherent randomness causes noisy updates that may lead to fluctuations around the global minimum, requiring careful tuning of the learning rate and potential use of techniques like learning rate decay or momentum. Despite this, SGD excels in handling complex, high-dimensional data and non-convex optimization problems common in deep learning.

Use Cases and Applications in Artificial Intelligence

Batch Gradient Descent is ideal for training models on large, static datasets where memory efficiency and convergence stability are critical, commonly used in deep learning for image recognition and natural language processing tasks. Stochastic Gradient Descent excels in scenarios requiring online learning or dealing with streaming data, such as real-time recommendation systems and adaptive robotics. Both methods optimize neural network weights but differ in computational speed and noise tolerance, influencing their application in artificial intelligence workflows.

Impact on Model Training and Scalability

Batch Gradient Descent processes the entire dataset in each iteration, ensuring stable and accurate gradient estimates but often resulting in slower training times and higher memory consumption, which can limit scalability for large datasets. Stochastic Gradient Descent updates model parameters using individual data points, enabling faster convergence and improved scalability for massive datasets while introducing higher variance in updates that may lead to less stable training paths. The choice between these methods directly impacts training efficiency, model convergence speed, and the ability to scale AI models across diverse data volumes and computational resources.

Choosing the Right Gradient Descent Approach for Your AI Project

Batch Gradient Descent processes the entire dataset to compute gradients, ensuring stable convergence but often requiring significant computational resources, making it ideal for smaller datasets or when precise accuracy is critical. Stochastic Gradient Descent updates model parameters using individual data points, enabling faster iterations and better scalability for large-scale AI projects but may introduce more noise and less stable convergence. Selecting the appropriate gradient descent method depends on dataset size, computational capacity, and the required balance between speed and model accuracy in your AI application.

Batch Gradient Descent vs Stochastic Gradient Descent Infographic

techiny.com

techiny.com