Cross-validation offers a robust method for evaluating Artificial Intelligence models by partitioning data into multiple subsets, ensuring that each sample is tested against training data, which minimizes overfitting risks and provides a more reliable estimation of model performance. Holdout validation, while simpler and faster, divides data into a single training set and a test set, making it more prone to variance in performance metrics depending on how the data is split. Selecting cross-validation over holdout validation enhances model generalization and accuracy assessment in AI projects.

Table of Comparison

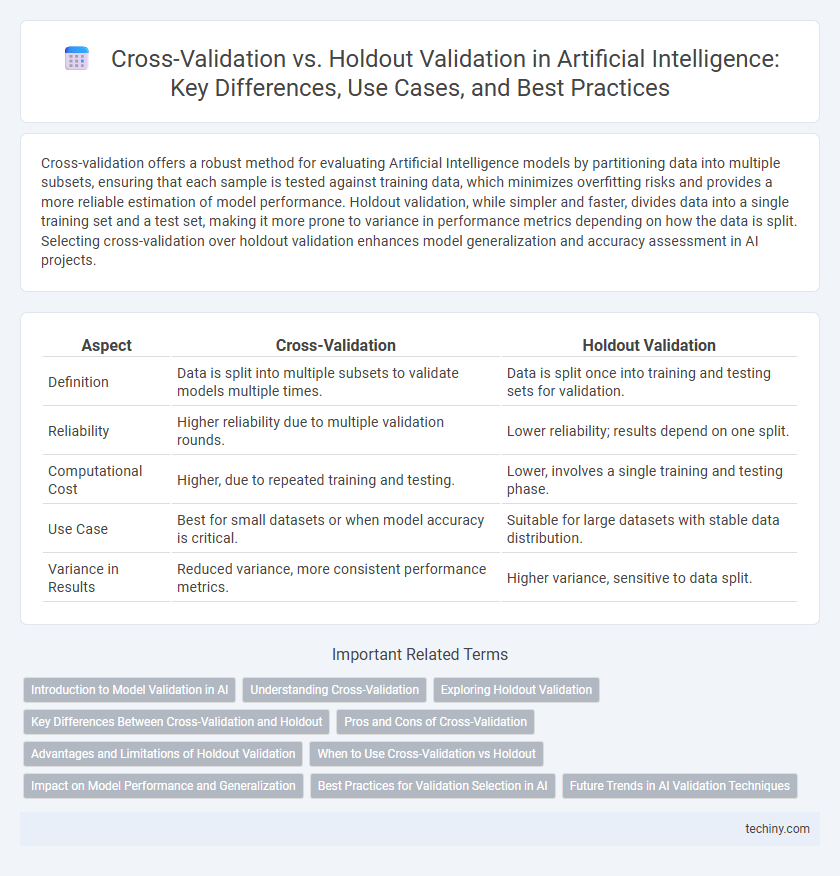

| Aspect | Cross-Validation | Holdout Validation |

|---|---|---|

| Definition | Data is split into multiple subsets to validate models multiple times. | Data is split once into training and testing sets for validation. |

| Reliability | Higher reliability due to multiple validation rounds. | Lower reliability; results depend on one split. |

| Computational Cost | Higher, due to repeated training and testing. | Lower, involves a single training and testing phase. |

| Use Case | Best for small datasets or when model accuracy is critical. | Suitable for large datasets with stable data distribution. |

| Variance in Results | Reduced variance, more consistent performance metrics. | Higher variance, sensitive to data split. |

Introduction to Model Validation in AI

Model validation in artificial intelligence ensures the reliability and generalizability of predictive models by assessing their performance on unseen data. Cross-validation techniques, such as k-fold cross-validation, provide a robust evaluation by repeatedly splitting the dataset into multiple training and testing subsets, reducing variance in the performance estimate. Holdout validation divides the dataset into a single training and testing set, offering a simpler but potentially less stable measure of model accuracy.

Understanding Cross-Validation

Cross-validation is a robust statistical method used in artificial intelligence to assess model performance by partitioning the dataset into multiple subsets, ensuring each subset serves as both training and validation data across iterations. This technique reduces bias and variance in model evaluation compared to holdout validation, which relies on a single split of data into training and test sets. Cross-validation, especially k-fold cross-validation, provides a more reliable estimate of model generalization by averaging results over k different training-validation splits.

Exploring Holdout Validation

Holdout validation separates data into distinct training and testing sets, preserving the independence of evaluation for unbiased model performance assessment. This method is computationally efficient, making it suitable for large datasets or when rapid model iteration is required. Holdout validation's simplicity facilitates straightforward implementation but may introduce variability in performance estimates depending on the specific data split chosen.

Key Differences Between Cross-Validation and Holdout

Cross-validation divides the dataset into multiple folds to iteratively train and validate the model, ensuring more reliable performance estimates by reducing variance and bias. Holdout validation splits the data once into training and testing sets, which is faster but can lead to overfitting or underestimating model performance due to the single partition. Cross-validation is preferred for small datasets or when precision in model evaluation is crucial, while holdout validation suits large datasets or quick initial assessments.

Pros and Cons of Cross-Validation

Cross-validation offers robust model evaluation by utilizing multiple training and testing splits, reducing overfitting and providing more reliable performance metrics compared to holdout validation. It is computationally intensive and may require significantly more processing time, especially with large datasets or complex models. Though cross-validation enhances generalization estimates, it can introduce variance if data is not properly shuffled or stratified.

Advantages and Limitations of Holdout Validation

Holdout validation offers the advantage of simplicity and speed, making it suitable for large datasets where computational efficiency is crucial. However, it has limitations such as higher variance and potential bias in performance estimates due to relying on a single train-test split. This method may lead to less reliable model evaluation compared to cross-validation, especially with smaller datasets.

When to Use Cross-Validation vs Holdout

Cross-validation is ideal for small to medium datasets where maximizing training data and obtaining a reliable estimate of model performance is crucial. Holdout validation suits large datasets, offering faster evaluation by splitting data into separate training and testing sets without repeated training cycles. Selecting cross-validation improves model robustness and generalization on limited data, while holdout validation supports rapid prototyping with abundant data.

Impact on Model Performance and Generalization

Cross-validation provides a more reliable estimate of model performance by using multiple train-test splits, reducing variance and overfitting risks in artificial intelligence models. Holdout validation, while simpler and faster, risks biased evaluation due to reliance on a single split, potentially leading to poor generalization on unseen data. Effective generalization in AI models is improved through comprehensive cross-validation, which ensures robustness across diverse data subsets.

Best Practices for Validation Selection in AI

Cross-validation offers robust model evaluation by partitioning data into multiple training and testing sets, reducing variance and improving generalization in AI projects. Holdout validation, while simpler and faster, risks biased performance estimates due to limited training data subsets and is less reliable for small datasets. Best practices recommend employing k-fold cross-validation for comprehensive model assessment and reserving holdout validation for final performance confirmation on unseen data to balance accuracy and computational efficiency.

Future Trends in AI Validation Techniques

Emerging AI validation techniques increasingly integrate cross-validation with scalable holdout methods to enhance model robustness and generalizability in large datasets. Future trends emphasize automated validation pipelines using meta-learning to optimize hyperparameters dynamically across diverse AI architectures. Leveraging synthetic data and real-time feedback loops will further refine validation processes, ensuring adaptive and reliable AI system performance.

Cross-Validation vs Holdout Validation Infographic

techiny.com

techiny.com