Data augmentation enhances machine learning models by creating varied versions of existing datasets through techniques like rotation, scaling, and noise addition, improving model robustness and generalization. Data synthesis involves generating entirely new, artificial data using algorithms such as GANs or simulations, which expands datasets when real data is scarce or sensitive. Both methods address data limitations but differ in approach: augmentation modifies existing data, while synthesis produces novel, synthetic examples.

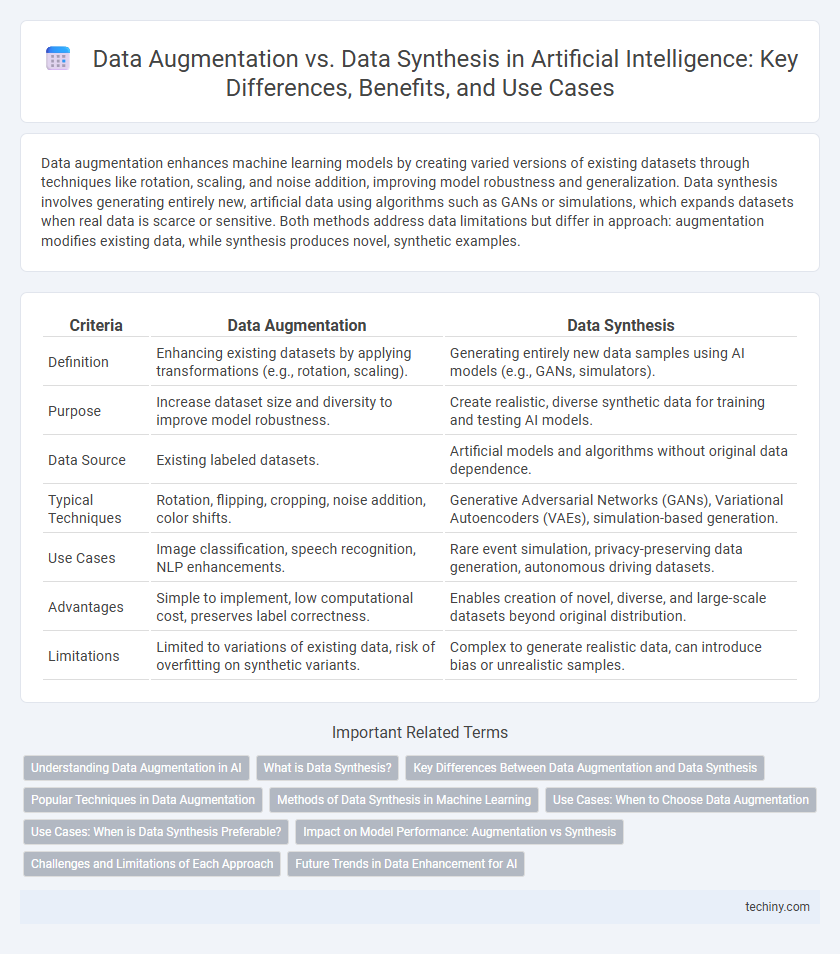

Table of Comparison

| Criteria | Data Augmentation | Data Synthesis |

|---|---|---|

| Definition | Enhancing existing datasets by applying transformations (e.g., rotation, scaling). | Generating entirely new data samples using AI models (e.g., GANs, simulators). |

| Purpose | Increase dataset size and diversity to improve model robustness. | Create realistic, diverse synthetic data for training and testing AI models. |

| Data Source | Existing labeled datasets. | Artificial models and algorithms without original data dependence. |

| Typical Techniques | Rotation, flipping, cropping, noise addition, color shifts. | Generative Adversarial Networks (GANs), Variational Autoencoders (VAEs), simulation-based generation. |

| Use Cases | Image classification, speech recognition, NLP enhancements. | Rare event simulation, privacy-preserving data generation, autonomous driving datasets. |

| Advantages | Simple to implement, low computational cost, preserves label correctness. | Enables creation of novel, diverse, and large-scale datasets beyond original distribution. |

| Limitations | Limited to variations of existing data, risk of overfitting on synthetic variants. | Complex to generate realistic data, can introduce bias or unrealistic samples. |

Understanding Data Augmentation in AI

Data augmentation in AI involves generating modified versions of existing data to enhance model training without altering the original labels, improving generalization and robustness. Techniques such as image rotation, cropping, and noise injection increase dataset diversity and help prevent overfitting. Unlike data synthesis, which creates entirely new data often using generative models, data augmentation modifies real samples to simulate variations encountered in real-world scenarios.

What is Data Synthesis?

Data synthesis refers to the process of generating artificial data that mimics real-world datasets using techniques such as generative adversarial networks (GANs) or simulation models. This method creates entirely new, diverse data points to enhance training datasets, improving machine learning model robustness and generalization. Unlike data augmentation, which alters existing data, data synthesis produces novel samples that can address data scarcity and privacy concerns in artificial intelligence development.

Key Differences Between Data Augmentation and Data Synthesis

Data augmentation involves applying transformations such as rotation, scaling, and flipping to existing datasets to increase diversity without altering the original data distribution. Data synthesis, in contrast, generates entirely new data instances through techniques like generative adversarial networks (GANs) or variational autoencoders (VAEs), creating novel samples that can expand the dataset beyond its initial scope. Key differences include augmentation's reliance on modifying real data versus synthesis's creation of artificial data, impacting model generalization and training robustness in distinct ways.

Popular Techniques in Data Augmentation

Popular techniques in data augmentation for artificial intelligence include image transformations such as rotation, scaling, flipping, and color jittering to diversify training datasets without collecting new data. Synthetic data generation methods like GANs (Generative Adversarial Networks) and SMOTE (Synthetic Minority Over-sampling Technique) create entirely new data points, enhancing model robustness by addressing class imbalances. Data augmentation increases model generalization by modifying existing data samples, while data synthesis generates novel examples to simulate real-world variations.

Methods of Data Synthesis in Machine Learning

Data synthesis in machine learning involves generating artificial data using techniques such as generative adversarial networks (GANs), variational autoencoders (VAEs), and rule-based simulations to enhance training datasets. GANs create realistic synthetic samples by learning data distributions through adversarial training, while VAEs enable the generation of diverse data points by encoding and decoding latent representations. Rule-based simulations produce structured synthetic data by applying domain-specific rules and constraints, facilitating model robustness in scenarios with limited real-world data.

Use Cases: When to Choose Data Augmentation

Data augmentation is ideal for improving model robustness in tasks with limited labeled datasets, such as image classification and speech recognition, by applying transformations like rotations, flips, or noise injection to existing data. It is particularly effective when the original data distribution closely represents the target domain, enabling models to generalize better without altering underlying semantics. Choose data augmentation when preserving authentic data characteristics is crucial and manual data collection is costly or time-consuming.

Use Cases: When is Data Synthesis Preferable?

Data synthesis is preferable in scenarios requiring large-scale, diverse datasets without risking privacy breaches, such as in healthcare or finance where access to real data is restricted. It enables the generation of realistic yet artificial data to train machine learning models while preserving confidentiality and complying with regulations like GDPR. Data augmentation is limited to variations of existing data, making synthesis essential for creating entirely new, high-quality datasets essential for robust AI model performance.

Impact on Model Performance: Augmentation vs Synthesis

Data augmentation enhances model performance by generating varied examples from existing data, improving robustness and generalization without introducing entirely new distributions. Data synthesis creates artificial data through models like GANs or simulators, potentially expanding the dataset diversity but risking distributional discrepancies that may affect model accuracy. Empirical studies show augmentation typically yields more consistent improvements, while synthesis effectiveness depends on the quality and realism of generated samples.

Challenges and Limitations of Each Approach

Data augmentation often faces challenges related to preserving label integrity and introducing realistic variability without overfitting, while data synthesis struggles with generating high-fidelity, diverse examples that accurately reflect complex data distributions. Limitations of data augmentation include potential redundancy and limited innovation in sample diversity, whereas synthetic data may suffer from artifacts and biases introduced by generative models. Both approaches require careful validation to ensure the augmented or synthetic datasets improve model generalizability without compromising accuracy.

Future Trends in Data Enhancement for AI

Future trends in data enhancement for AI emphasize advanced data augmentation techniques leveraging generative adversarial networks (GANs) to create more diverse and realistic training samples, improving model robustness. Data synthesis is evolving with synthetic data platforms that enable scalable, privacy-preserving datasets tailored for specific AI applications, accelerating development cycles. Integration of AI-driven augmentation and synthesis methods will optimize data pipelines, enhance model generalization, and address data scarcity challenges across industries.

Data Augmentation vs Data Synthesis Infographic

techiny.com

techiny.com