Decision trees provide a straightforward, interpretable model for classification and regression tasks but are prone to overfitting with complex data. Random forests improve accuracy by aggregating multiple decision trees, reducing variance through bagging and feature randomness. This ensemble approach enhances robustness and generalization compared to individual decision trees.

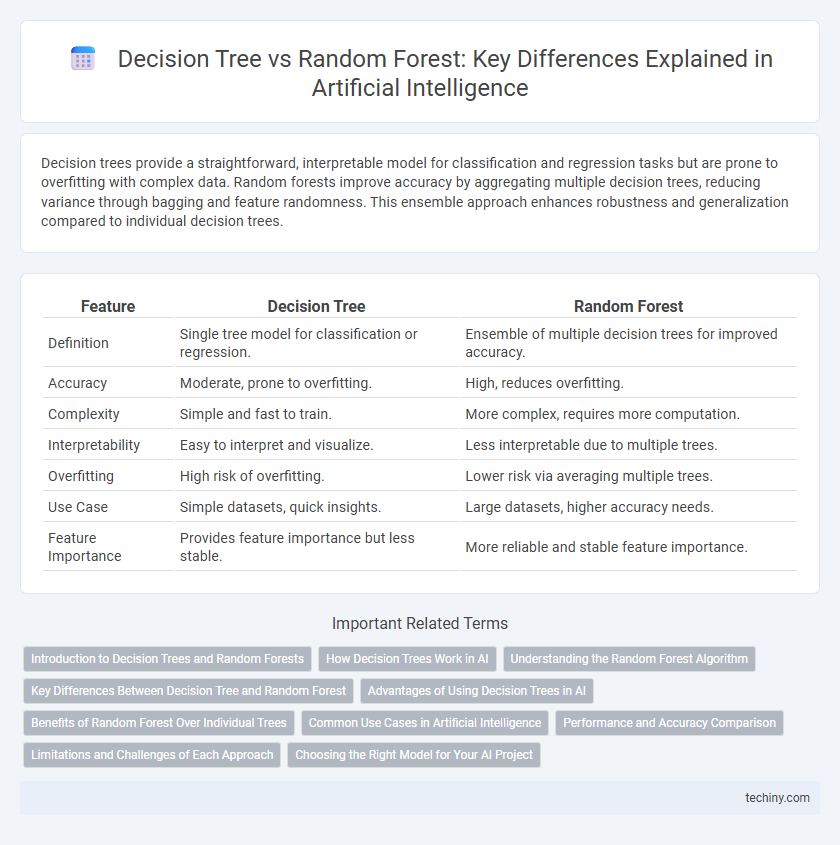

Table of Comparison

| Feature | Decision Tree | Random Forest |

|---|---|---|

| Definition | Single tree model for classification or regression. | Ensemble of multiple decision trees for improved accuracy. |

| Accuracy | Moderate, prone to overfitting. | High, reduces overfitting. |

| Complexity | Simple and fast to train. | More complex, requires more computation. |

| Interpretability | Easy to interpret and visualize. | Less interpretable due to multiple trees. |

| Overfitting | High risk of overfitting. | Lower risk via averaging multiple trees. |

| Use Case | Simple datasets, quick insights. | Large datasets, higher accuracy needs. |

| Feature Importance | Provides feature importance but less stable. | More reliable and stable feature importance. |

Introduction to Decision Trees and Random Forests

Decision trees are supervised learning algorithms used for classification and regression tasks, splitting data recursively based on feature values to create a tree structure of decisions. Random forests enhance decision trees by constructing an ensemble of trees through bootstrap sampling and random feature selection, improving model accuracy and reducing overfitting. Both methods are fundamental in machine learning, with decision trees offering interpretability and random forests providing robust predictive performance.

How Decision Trees Work in AI

Decision Trees in Artificial Intelligence function by recursively splitting datasets based on feature values, creating a tree-like model of decisions and their possible outcomes. Each node represents a test on an attribute, branches correspond to feature values, and leaf nodes represent class labels or regression outcomes. This hierarchical structure enables clear interpretability and straightforward decision-making in classification and regression tasks.

Understanding the Random Forest Algorithm

Random Forest is an ensemble learning algorithm that builds multiple decision trees during training and merges their outcomes to improve prediction accuracy and control overfitting. Each tree in the forest is trained on a random subset of the data with random feature selection, enhancing model robustness and reducing variance. This method leverages bagging and feature randomness, making Random Forest particularly effective for classification and regression tasks in complex datasets.

Key Differences Between Decision Tree and Random Forest

Decision Trees use a single model to make predictions by splitting data based on feature values, often leading to overfitting and lower accuracy on complex datasets. Random Forests aggregate the output of multiple decision trees to enhance generalization, improve accuracy, and reduce overfitting through ensemble learning techniques like bagging and feature randomness. Key differences include model complexity, robustness to noise, and predictive performance, where Random Forests outperform Decision Trees in most real-world applications.

Advantages of Using Decision Trees in AI

Decision Trees offer clear interpretability and visualization, making them ideal for understanding decision-making paths in AI applications. Their ability to handle both numerical and categorical data efficiently supports versatile problem-solving without extensive data preprocessing. Decision Trees also provide fast computation and require less memory, enabling quick deployment in real-time AI systems.

Benefits of Random Forest Over Individual Trees

Random Forest enhances predictive accuracy and robustness by aggregating multiple decision trees, reducing overfitting common in individual trees. It handles large datasets and high-dimensional features more efficiently, providing better generalization across diverse data types. This ensemble learning approach improves model stability and reduces variance, making it ideal for complex classification and regression tasks.

Common Use Cases in Artificial Intelligence

Decision trees excel in applications requiring interpretable models such as customer churn prediction, credit scoring, and clinical decision support due to their straightforward visual representation of decision paths. Random forests outperform in complex tasks like image recognition, fraud detection, and natural language processing by aggregating multiple decision trees to improve accuracy and reduce overfitting. Both algorithms are foundational in supervised learning but are chosen based on the trade-off between interpretability and predictive performance in AI projects.

Performance and Accuracy Comparison

Decision Tree models excel in interpretability and fast computation but often suffer from overfitting, leading to lower accuracy on complex datasets. Random Forest, an ensemble of multiple decision trees, significantly enhances performance by reducing variance and improving predictive accuracy through bootstrap aggregation and feature randomness. Empirical studies demonstrate that Random Forest consistently outperforms single Decision Trees, achieving higher accuracy and robustness in classification and regression tasks across diverse datasets.

Limitations and Challenges of Each Approach

Decision Trees often suffer from high variance and overfitting, making them less reliable on complex datasets with noisy information. Random Forests mitigate these issues by averaging multiple trees, but they face challenges in interpretability and require significant computational resources, especially with large numbers of trees. Both approaches may struggle with handling highly imbalanced data and can be sensitive to irrelevant features, impacting overall predictive performance.

Choosing the Right Model for Your AI Project

Choosing the right model for your AI project depends on the complexity and size of your dataset; decision trees offer simplicity and interpretability, making them ideal for smaller, less complex data. Random forests enhance accuracy and robustness by aggregating multiple decision trees, reducing overfitting and improving generalization in large-scale, high-dimensional datasets. Consider computational resources, model interpretability, and prediction accuracy to determine whether a single decision tree or a random forest better suits your project requirements.

Decision Tree vs Random Forest Infographic

techiny.com

techiny.com