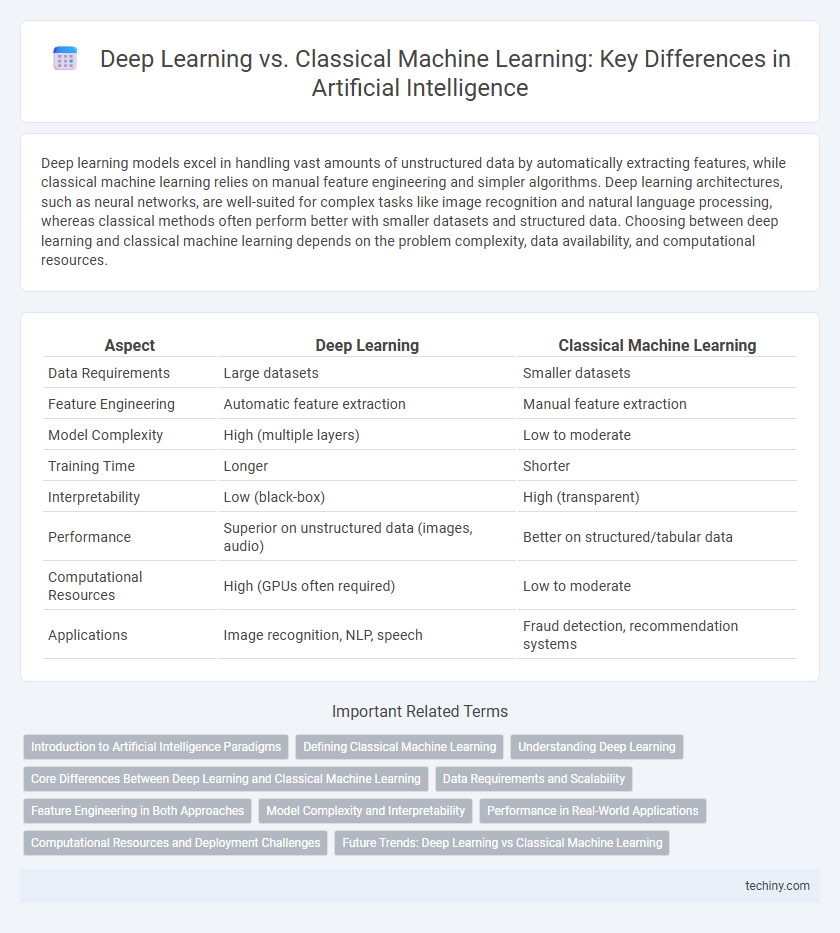

Deep learning models excel in handling vast amounts of unstructured data by automatically extracting features, while classical machine learning relies on manual feature engineering and simpler algorithms. Deep learning architectures, such as neural networks, are well-suited for complex tasks like image recognition and natural language processing, whereas classical methods often perform better with smaller datasets and structured data. Choosing between deep learning and classical machine learning depends on the problem complexity, data availability, and computational resources.

Table of Comparison

| Aspect | Deep Learning | Classical Machine Learning |

|---|---|---|

| Data Requirements | Large datasets | Smaller datasets |

| Feature Engineering | Automatic feature extraction | Manual feature extraction |

| Model Complexity | High (multiple layers) | Low to moderate |

| Training Time | Longer | Shorter |

| Interpretability | Low (black-box) | High (transparent) |

| Performance | Superior on unstructured data (images, audio) | Better on structured/tabular data |

| Computational Resources | High (GPUs often required) | Low to moderate |

| Applications | Image recognition, NLP, speech | Fraud detection, recommendation systems |

Introduction to Artificial Intelligence Paradigms

Deep learning and classical machine learning represent two fundamental paradigms within artificial intelligence, differing primarily in their approach to feature extraction and data representation. Classical machine learning relies on handcrafted features and shallow models, making it effective for structured data with limited complexity. Deep learning utilizes multi-layer neural networks to automatically learn hierarchical features from large-scale, unstructured datasets, enabling breakthroughs in image recognition, natural language processing, and speech synthesis.

Defining Classical Machine Learning

Classical Machine Learning involves algorithms that learn patterns from data based on predefined features and statistical methods, such as decision trees, support vector machines, and linear regression. It relies heavily on feature engineering and domain expertise to extract relevant information before training models. Unlike deep learning, classical machine learning typically requires less computational power and is more interpretable for structured data analysis.

Understanding Deep Learning

Deep learning utilizes multi-layered neural networks to automatically extract features from large datasets, enabling higher accuracy in complex tasks like image recognition and natural language processing. Unlike classical machine learning, which relies heavily on manual feature engineering and simpler algorithms, deep learning models improve performance with increased data and computational power. Understanding deep learning involves grasping concepts such as backpropagation, convolutional layers, and the role of activation functions in enabling nonlinear transformations.

Core Differences Between Deep Learning and Classical Machine Learning

Deep learning employs multi-layered neural networks that autonomously extract hierarchical feature representations from vast amounts of unstructured data, enabling superior performance in tasks like image and speech recognition. Classical machine learning relies heavily on manual feature engineering and works efficiently with structured datasets but often struggles with scalability and complex pattern recognition. The end-to-end learning capability and scalability of deep learning models differentiate them fundamentally from the feature-dependent, algorithm-specific approaches of classical machine learning.

Data Requirements and Scalability

Deep learning requires vast amounts of labeled data and high computational power, making it highly scalable for complex tasks like image and speech recognition. Classical machine learning performs well with smaller datasets and simpler features but often struggles to scale effectively with increasing data complexity. The scalability of deep learning architectures such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs) enables superior performance in large-scale, data-intensive applications.

Feature Engineering in Both Approaches

Deep learning automates feature engineering by using multi-layer neural networks to extract hierarchical representations from raw data, significantly reducing the need for manual intervention. Classical machine learning relies heavily on handcrafted features designed through domain expertise, which can limit model adaptability and performance on complex data sets. The shift towards deep learning emphasizes end-to-end learning and feature discovery, enhancing accuracy and scalability in tasks like image recognition and natural language processing.

Model Complexity and Interpretability

Deep Learning models typically feature higher complexity with multiple layers of nonlinear transformations, enabling them to capture intricate patterns in large datasets but often sacrificing interpretability. Classical Machine Learning algorithms, such as decision trees and linear regression, offer simpler structures that are easier to interpret but may struggle with high-dimensional or unstructured data. Balancing model complexity and interpretability is crucial when choosing between deep learning and classical approaches for specific artificial intelligence applications.

Performance in Real-World Applications

Deep learning consistently outperforms classical machine learning algorithms in complex real-world applications such as image recognition, natural language processing, and autonomous driving due to its ability to automatically extract hierarchical features from large datasets. Classical machine learning methods like decision trees and support vector machines often require manual feature engineering, limiting their scalability and accuracy when faced with high-dimensional or unstructured data. The superior performance of deep neural networks is evident in benchmarks involving big data, where their adaptability and end-to-end learning processes deliver more robust and precise results.

Computational Resources and Deployment Challenges

Deep learning models require significantly more computational resources due to their complex architectures and large datasets, often necessitating GPUs or TPUs for efficient training, whereas classical machine learning algorithms typically operate effectively on standard CPUs with less memory. Deployment of deep learning solutions poses challenges such as higher latency, model interpretability issues, and increased infrastructure costs, while classical machine learning models benefit from simpler integration and faster inference on edge devices. Resource demand and deployment complexity make deep learning less suitable for real-time or resource-constrained environments compared to classical approaches.

Future Trends: Deep Learning vs Classical Machine Learning

Future trends indicate deep learning will dominate artificial intelligence due to its ability to process unstructured data and improve accuracy through neural network advancements. Classical machine learning, relying on feature engineering and simpler algorithms, will gain traction in scenarios requiring interpretability and lower computational costs. Hybrid models combining deep learning's power with classical methods' efficiency are emerging as a promising direction for scalable, transparent AI solutions.

Deep Learning vs Classical Machine Learning Infographic

techiny.com

techiny.com