Diffusion models generate high-quality images by iteratively refining noise through a gradual denoising process, offering improved stability and diversity compared to GANs. GANs rely on adversarial training between generator and discriminator networks, often facing challenges like mode collapse and training instability. The diffusion approach excels in producing more realistic and varied outputs, making it a compelling choice for image synthesis tasks in artificial intelligence.

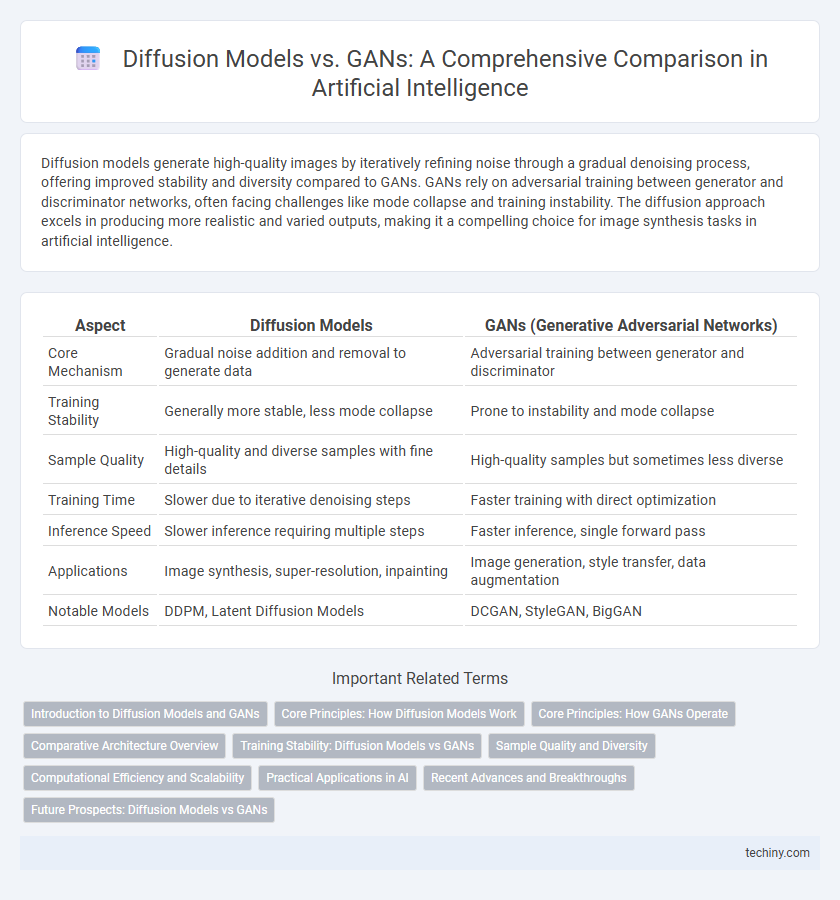

Table of Comparison

| Aspect | Diffusion Models | GANs (Generative Adversarial Networks) |

|---|---|---|

| Core Mechanism | Gradual noise addition and removal to generate data | Adversarial training between generator and discriminator |

| Training Stability | Generally more stable, less mode collapse | Prone to instability and mode collapse |

| Sample Quality | High-quality and diverse samples with fine details | High-quality samples but sometimes less diverse |

| Training Time | Slower due to iterative denoising steps | Faster training with direct optimization |

| Inference Speed | Slower inference requiring multiple steps | Faster inference, single forward pass |

| Applications | Image synthesis, super-resolution, inpainting | Image generation, style transfer, data augmentation |

| Notable Models | DDPM, Latent Diffusion Models | DCGAN, StyleGAN, BigGAN |

Introduction to Diffusion Models and GANs

Diffusion Models generate data by iteratively refining random noise into coherent outputs, leveraging a forward and reverse process to model complex distributions. Generative Adversarial Networks (GANs) consist of a generator and a discriminator engaged in a minimax game, producing realistic data through adversarial training. Both approaches excel in high-quality image synthesis but differ fundamentally in their training dynamics and noise handling mechanisms.

Core Principles: How Diffusion Models Work

Diffusion models generate images by iteratively denoising data starting from pure noise, using a Markov chain to reverse a gradual noising process applied during training. Each step predicts the noise component and refines the data distribution, enabling high-fidelity sample synthesis and stable convergence. Unlike GANs, which rely on adversarial training between a generator and discriminator, diffusion models optimize a variational lower bound of data likelihood, resulting in improved training stability and diversity in output.

Core Principles: How GANs Operate

Generative Adversarial Networks (GANs) function through a dual-model architecture consisting of a generator and a discriminator, which are trained simultaneously in a competitive process. The generator creates synthetic data samples aiming to mimic real data distributions, while the discriminator evaluates and distinguishes between real and generated samples, providing feedback that refines the generator's output. This adversarial training paradigm enables GANs to capture complex data distributions, fostering realistic image synthesis and high-fidelity generative tasks.

Comparative Architecture Overview

Diffusion models employ a sequential denoising process that gradually transforms random noise into data, leveraging a Markov chain to generate high-fidelity samples with improved stability compared to GANs. GANs consist of two adversarial networks, a generator and a discriminator, that compete to produce realistic outputs but often face mode collapse and training instability. Diffusion models feature more complex iterative sampling with slower inference, whereas GANs enable faster generation but require careful balancing of competing networks during training.

Training Stability: Diffusion Models vs GANs

Diffusion models exhibit greater training stability compared to GANs by avoiding adversarial training pitfalls such as mode collapse and oscillations. The iterative denoising process in diffusion models enables more consistent convergence, while GANs often require careful balance between generator and discriminator to prevent instability. This inherent stability in diffusion models leads to more reliable generation quality across diverse datasets.

Sample Quality and Diversity

Diffusion Models generate samples with higher diversity and more detailed textures compared to GANs, which often excel in producing sharper images but can suffer from mode collapse, limiting sample variety. Research shows that Diffusion Models achieve better log-likelihood scores and outperform GANs on metrics like FID and IS, highlighting their robustness in capturing complex data distributions. Advances in model architectures and training strategies for Diffusion Models continue to enhance sample fidelity without compromising diversity, making them a strong alternative to GANs for generative tasks.

Computational Efficiency and Scalability

Diffusion models exhibit higher computational efficiency in training due to their iterative denoising process, which can be parallelized effectively across GPUs, whereas GANs often require complex adversarial training that is less stable and more resource-intensive. Scalability in diffusion models is enhanced by their ability to generate high-quality samples with increased resolution through controlled diffusion steps, while GANs struggle with mode collapse and training instability as model size grows. Recent advancements in guided diffusion techniques further optimize resource usage, making diffusion models more suitable for large-scale, high-fidelity image synthesis compared to traditional GAN architectures.

Practical Applications in AI

Diffusion models excel in generating high-quality images by progressively refining noise, making them ideal for applications like medical imaging and detailed artistic creation. GANs (Generative Adversarial Networks) are widely used in real-time video generation, style transfer, and data augmentation due to their faster convergence and ability to produce sharp images. Both models enhance AI capabilities, with diffusion models preferred for precision tasks and GANs favored for speed-sensitive applications.

Recent Advances and Breakthroughs

Diffusion models have recently surpassed GANs in image synthesis quality due to their improved training stability and ability to generate diverse, high-fidelity samples. Breakthroughs like improved noise scheduling and scalable architectures have enhanced diffusion models' performance in tasks such as text-to-image generation and super-resolution. Meanwhile, advancements in GANs focus on network architecture optimization and loss function refinement but often struggle to match the robustness and versatility demonstrated by diffusion-based approaches.

Future Prospects: Diffusion Models vs GANs

Diffusion models demonstrate strong potential for future advancements in generating high-fidelity images with greater stability compared to GANs, which often suffer from mode collapse and training instability. Recent research indicates that diffusion models' probabilistic framework enables better diversity and control over outputs, making them ideal for applications in creative industries and medical imaging. GANs remain valuable for real-time image synthesis due to faster generation speeds, but diffusion models are poised to dominate with ongoing improvements in efficiency and scalability.

Diffusion Models vs GANs Infographic

techiny.com

techiny.com