Early stopping prevents overfitting by halting training once model performance on a validation set begins to degrade, effectively controlling complexity without altering the model architecture. Regularization techniques, such as L1 or L2 penalties, modify the loss function to constrain model parameters and reduce overfitting by encouraging simpler models. Combining early stopping with regularization often yields more robust AI models, enhancing generalization on unseen data.

Table of Comparison

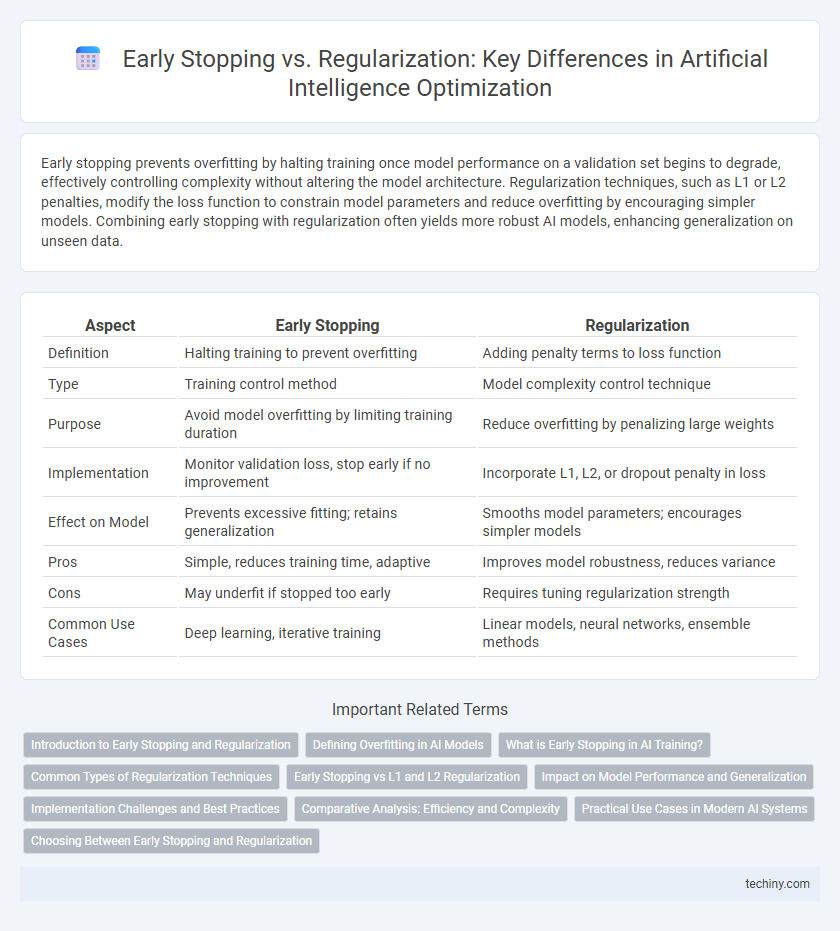

| Aspect | Early Stopping | Regularization |

|---|---|---|

| Definition | Halting training to prevent overfitting | Adding penalty terms to loss function |

| Type | Training control method | Model complexity control technique |

| Purpose | Avoid model overfitting by limiting training duration | Reduce overfitting by penalizing large weights |

| Implementation | Monitor validation loss, stop early if no improvement | Incorporate L1, L2, or dropout penalty in loss |

| Effect on Model | Prevents excessive fitting; retains generalization | Smooths model parameters; encourages simpler models |

| Pros | Simple, reduces training time, adaptive | Improves model robustness, reduces variance |

| Cons | May underfit if stopped too early | Requires tuning regularization strength |

| Common Use Cases | Deep learning, iterative training | Linear models, neural networks, ensemble methods |

Introduction to Early Stopping and Regularization

Early stopping and regularization are essential techniques in artificial intelligence to prevent overfitting during model training. Early stopping monitors model performance on a validation set and halts training once performance degrades, preserving generalization capabilities. Regularization introduces penalties such as L1 or L2 norms to the loss function, constraining model complexity and promoting simpler, more robust solutions.

Defining Overfitting in AI Models

Overfitting in AI models occurs when a model learns not just the underlying patterns but also the noise in the training data, leading to poor generalization on new inputs. Early stopping mitigates overfitting by halting training once performance on a validation set begins to degrade, preventing the model from capturing noise. Regularization techniques, such as L1 and L2 penalties, reduce overfitting by constraining model complexity through added terms in the loss function, encouraging simpler models that generalize better.

What is Early Stopping in AI Training?

Early stopping is a training technique in artificial intelligence that halts the learning process once the model's performance on a validation dataset begins to degrade, preventing overfitting. It monitors the error or loss on validation data after each epoch and stops training when no improvement is observed for a predefined number of iterations. This method effectively balances model complexity and generalization without adding explicit regularization terms to the loss function.

Common Types of Regularization Techniques

Common types of regularization techniques in artificial intelligence include L1 (Lasso) and L2 (Ridge) regularization, which add penalty terms to the loss function to prevent overfitting by constraining model complexity. Dropout is another widely used method that randomly disables neurons during training to improve model generalization. Early stopping differs by halting training when validation performance deteriorates, but regularization inherently modifies the model to enhance stability and reduce variance.

Early Stopping vs L1 and L2 Regularization

Early stopping is a training technique that halts model optimization once performance on a validation set deteriorates, effectively preventing overfitting by limiting training duration. L1 regularization adds a penalty proportional to the absolute value of coefficients, promoting sparsity and feature selection, while L2 regularization penalizes the square of coefficients, encouraging smaller but non-zero weights for improved generalization. Compared to L1 and L2, early stopping directly monitors validation error, making it a dynamic form of regularization that complements or substitutes traditional norm-based penalties in controlling model complexity.

Impact on Model Performance and Generalization

Early stopping prevents overfitting by halting training once validation error starts to increase, effectively controlling model complexity and improving generalization. Regularization techniques like L1 and L2 add penalty terms to the loss function, reducing model variance and enhancing robustness on unseen data. Both methods improve model performance by balancing bias and variance, but early stopping offers a dynamic, data-driven approach while regularization provides explicit complexity control.

Implementation Challenges and Best Practices

Early stopping requires careful monitoring of validation loss to prevent premature termination or prolonged training, complicating implementation in dynamic datasets where validation metrics fluctuate. Regularization methods like L2 or dropout demand fine-tuning hyperparameters to balance bias-variance trade-offs, posing challenges in selecting optimal values without over-penalizing the model. Best practices include combining early stopping with adaptive learning rates and conducting extensive hyperparameter sweeps to ensure robust convergence and generalization.

Comparative Analysis: Efficiency and Complexity

Early stopping reduces overfitting by halting training once validation performance begins to deteriorate, offering computational efficiency without extra hyperparameter tuning. Regularization techniques, such as L1 and L2 penalties, add complexity by modifying the loss function and require careful tuning to balance bias and variance. In terms of efficiency, early stopping is generally faster, while regularization provides more control and robustness, especially in models prone to high variance.

Practical Use Cases in Modern AI Systems

Early stopping effectively prevents overfitting in deep learning models by halting training once validation performance plateaus, making it ideal for resource-constrained environments like mobile AI applications. Regularization techniques such as L1, L2, and dropout enhance model generalization by adding penalties or randomly omitting neurons during training, widely used in complex architectures like convolutional neural networks for image recognition. Combining early stopping with regularization optimizes performance in AI systems deployed for natural language processing, autonomous driving, and recommendation engines.

Choosing Between Early Stopping and Regularization

Choosing between early stopping and regularization depends on the model complexity and training data size; early stopping is effective for preventing overfitting by halting training once validation loss plateaus, while regularization techniques such as L1, L2, or dropout impose penalties that constrain model weights during training. Early stopping requires careful monitoring of validation performance, making it suitable for iterative training processes, whereas regularization integrates directly into the loss function, allowing continuous control over model complexity. Combining both methods often yields better generalization by balancing bias and variance in deep learning models.

Early stopping vs Regularization Infographic

techiny.com

techiny.com