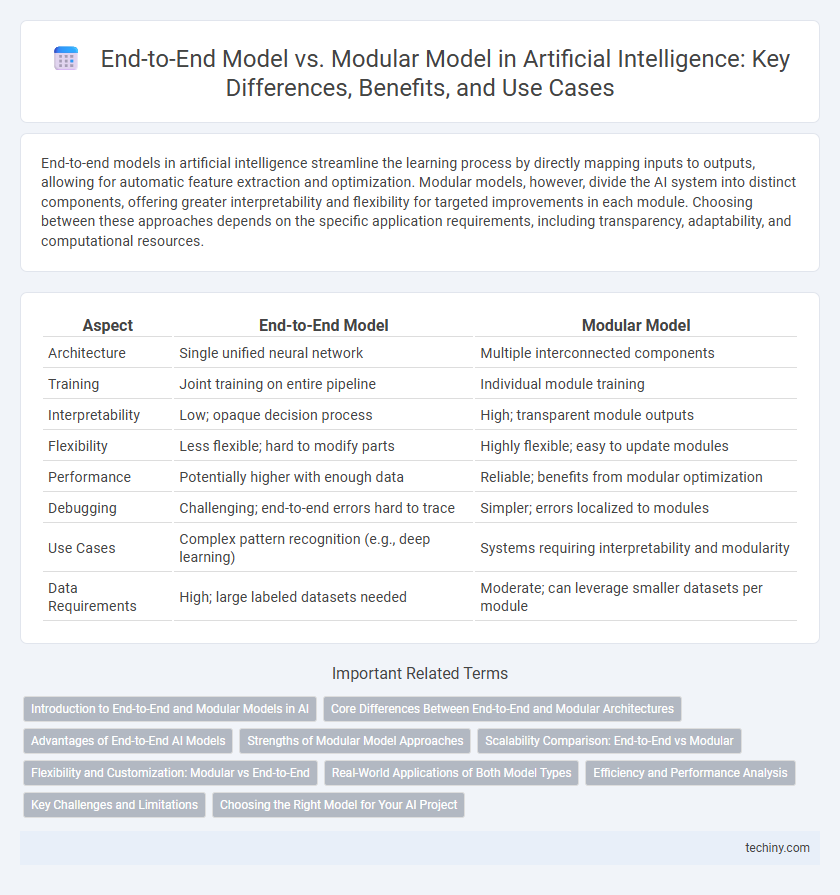

End-to-end models in artificial intelligence streamline the learning process by directly mapping inputs to outputs, allowing for automatic feature extraction and optimization. Modular models, however, divide the AI system into distinct components, offering greater interpretability and flexibility for targeted improvements in each module. Choosing between these approaches depends on the specific application requirements, including transparency, adaptability, and computational resources.

Table of Comparison

| Aspect | End-to-End Model | Modular Model |

|---|---|---|

| Architecture | Single unified neural network | Multiple interconnected components |

| Training | Joint training on entire pipeline | Individual module training |

| Interpretability | Low; opaque decision process | High; transparent module outputs |

| Flexibility | Less flexible; hard to modify parts | Highly flexible; easy to update modules |

| Performance | Potentially higher with enough data | Reliable; benefits from modular optimization |

| Debugging | Challenging; end-to-end errors hard to trace | Simpler; errors localized to modules |

| Use Cases | Complex pattern recognition (e.g., deep learning) | Systems requiring interpretability and modularity |

| Data Requirements | High; large labeled datasets needed | Moderate; can leverage smaller datasets per module |

Introduction to End-to-End and Modular Models in AI

End-to-end models in artificial intelligence process raw data through a single, unified architecture to produce outputs without intermediate steps, enhancing efficiency in tasks like image recognition and natural language processing. Modular models divide the AI system into distinct components or modules, each specialized for a specific subtask, allowing easier debugging, interpretability, and customization. Choosing between end-to-end and modular approaches depends on factors such as data availability, task complexity, and system transparency requirements.

Core Differences Between End-to-End and Modular Architectures

End-to-end models process raw input data through a single unified architecture, optimizing directly for the desired output and enabling seamless feature learning and representation. Modular models divide tasks into distinct components or modules, each responsible for specific sub-functions, allowing targeted optimization and interpretability. The core difference lies in end-to-end models' holistic learning approach versus modular models' compartmentalized processing and explicit intermediate outputs.

Advantages of End-to-End AI Models

End-to-End AI models streamline the learning process by integrating all components into a single, unified architecture, reducing error propagation across stages. These models excel in optimizing performance through joint parameter tuning, which leads to improved accuracy and efficiency compared to Modular Models that rely on separately trained parts. Their ability to leverage large datasets holistically enhances feature extraction and decision-making, making them ideal for complex AI tasks such as natural language processing and autonomous driving.

Strengths of Modular Model Approaches

Modular model approaches in artificial intelligence excel by enabling specialized optimization of individual components, which enhances interpretability and maintainability. Each module can be independently updated or replaced, facilitating scalability and flexibility in complex systems. This decomposition allows for targeted error analysis and more efficient training on diverse datasets, improving overall system robustness.

Scalability Comparison: End-to-End vs Modular

End-to-end models offer streamlined scalability by learning representations directly from data, reducing the need for manual feature engineering, which suits applications with abundant labeled data and computational resources. Modular models enhance scalability through flexible component upgrades and easier error isolation, allowing targeted improvements without retraining the entire system, making them preferable for complex systems requiring adaptability and interpretability. Scalability in end-to-end versus modular AI architectures depends on the trade-off between integration simplicity and modular flexibility across diverse deployment environments.

Flexibility and Customization: Modular vs End-to-End

Modular AI models offer superior flexibility and customization by allowing individual components to be independently developed, updated, and optimized, enabling tailored solutions for specific tasks. End-to-end models provide streamlined training processes but often lack the granular control needed for fine-tuning distinct modules within complex systems. This distinction makes modular architectures preferable for applications requiring adaptive workflows and component interoperability.

Real-World Applications of Both Model Types

End-to-end models excel in real-world applications like autonomous driving and voice recognition by directly mapping inputs to outputs, reducing complexity and enabling faster deployment. Modular models offer advantages in healthcare diagnostics and financial fraud detection by allowing individual component optimization, easier debugging, and better interpretability. Both models address scalability, but end-to-end systems thrive in environments requiring rapid adaptation, while modular systems suit scenarios demanding transparency and component-specific improvements.

Efficiency and Performance Analysis

End-to-end models integrate all processing stages into a single system, often leading to higher efficiency by reducing latency and simplifying the training pipeline, whereas modular models compartmentalize tasks, which can enhance interpretability and targeted optimization. Performance analysis shows end-to-end approaches typically excel in complex tasks such as natural language understanding and image recognition due to holistic feature learning, while modular systems may outperform in scenarios requiring adaptability and precise control over individual components. Choosing between these architectures depends on specific AI application demands, balancing the trade-offs between computational efficiency, scalability, and fine-grained performance tuning.

Key Challenges and Limitations

End-to-end AI models face key challenges in interpretability and require large amounts of labeled data to generalize effectively. Modular models offer improved transparency and easier debugging but struggle with integration complexity and reduced adaptability across diverse tasks. Balancing model complexity, data efficiency, and system maintainability remains a critical limitation in both approaches.

Choosing the Right Model for Your AI Project

Selecting the appropriate AI model hinges on project complexity and data availability; end-to-end models excel in processing large datasets with streamlined workflows, while modular models offer flexibility and interpretability by breaking tasks into manageable components. Industries requiring transparency and easier debugging often prefer modular approaches, whereas applications demanding rapid pattern recognition and learning leverage end-to-end architectures. Evaluating factors such as scalability, maintainability, and domain-specific requirements ensures optimal model choice for AI project success.

End-to-End Model vs Modular Model Infographic

techiny.com

techiny.com