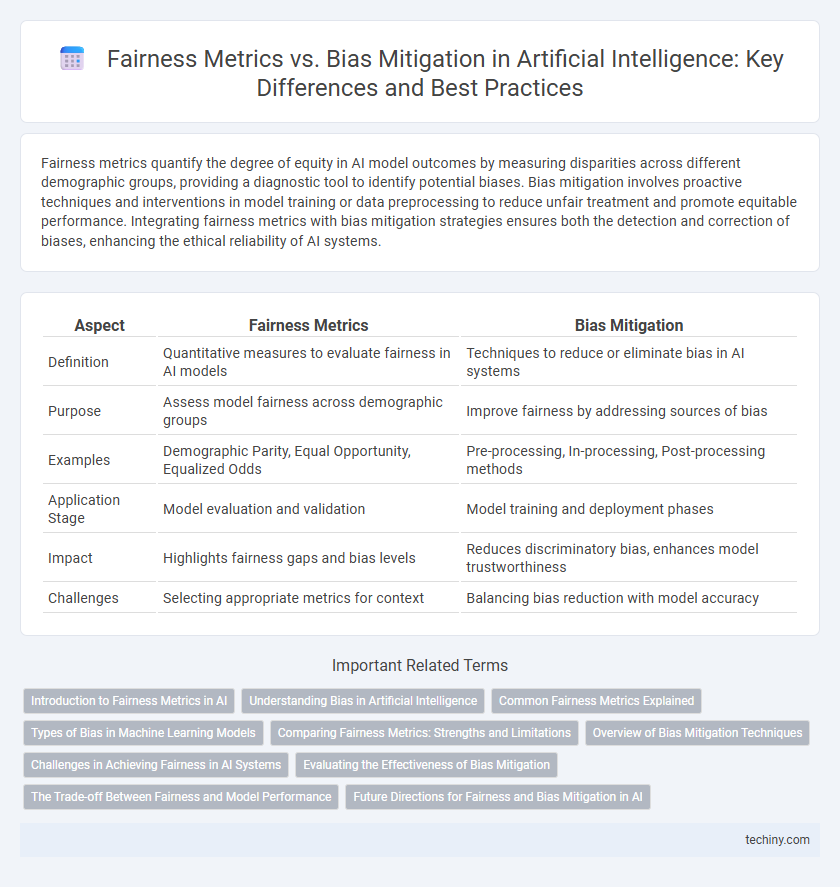

Fairness metrics quantify the degree of equity in AI model outcomes by measuring disparities across different demographic groups, providing a diagnostic tool to identify potential biases. Bias mitigation involves proactive techniques and interventions in model training or data preprocessing to reduce unfair treatment and promote equitable performance. Integrating fairness metrics with bias mitigation strategies ensures both the detection and correction of biases, enhancing the ethical reliability of AI systems.

Table of Comparison

| Aspect | Fairness Metrics | Bias Mitigation |

|---|---|---|

| Definition | Quantitative measures to evaluate fairness in AI models | Techniques to reduce or eliminate bias in AI systems |

| Purpose | Assess model fairness across demographic groups | Improve fairness by addressing sources of bias |

| Examples | Demographic Parity, Equal Opportunity, Equalized Odds | Pre-processing, In-processing, Post-processing methods |

| Application Stage | Model evaluation and validation | Model training and deployment phases |

| Impact | Highlights fairness gaps and bias levels | Reduces discriminatory bias, enhances model trustworthiness |

| Challenges | Selecting appropriate metrics for context | Balancing bias reduction with model accuracy |

Introduction to Fairness Metrics in AI

Fairness metrics in AI quantify how algorithms treat different demographic groups to ensure equitable outcomes, with common measures including demographic parity, equalized odds, and predictive rate parity. These metrics provide essential insights that guide bias mitigation strategies by highlighting disparities in model predictions or errors across protected attributes such as race, gender, or age. Evaluating fairness metrics enables developers to identify, monitor, and reduce discriminatory effects, fostering AI systems that align with ethical standards and legal compliance.

Understanding Bias in Artificial Intelligence

Understanding bias in artificial intelligence requires evaluating fairness metrics such as demographic parity, equal opportunity, and predictive parity to identify disparities in model outcomes across different groups. Bias mitigation techniques like reweighing, adversarial debiasing, and data augmentation are essential to reduce unfair treatment and improve decision-making accuracy. Continuous monitoring and validation of AI systems with these fairness metrics ensure ethical AI deployment and compliance with regulatory standards.

Common Fairness Metrics Explained

Common fairness metrics in artificial intelligence include statistical parity, equal opportunity, and disparate impact, each quantifying different aspects of bias in model predictions. Statistical parity measures the equal selection rate across groups, while equal opportunity focuses on equal true positive rates for all demographics. Disparate impact evaluates the ratio of favorable outcomes between groups, providing a threshold to detect potential bias and guide bias mitigation strategies.

Types of Bias in Machine Learning Models

Machine learning models often exhibit various types of bias, including sampling bias, measurement bias, and algorithmic bias, which impact fairness metrics differently. Fairness metrics such as Demographic Parity, Equalized Odds, and Predictive Parity quantify disparate treatment and outcomes across protected groups, guiding bias mitigation strategies. Effective bias mitigation requires identifying the specific bias type influencing model predictions to apply precise adjustments like re-sampling, adversarial debiasing, or fairness-aware learning algorithms.

Comparing Fairness Metrics: Strengths and Limitations

Fairness metrics such as demographic parity, equalized odds, and predictive parity each measure different aspects of algorithmic fairness, offering strengths in identifying specific biases within AI models. Demographic parity ensures equal positive rates across groups but may overlook differences in base rates, while equalized odds accounts for error rates yet can be complex to satisfy simultaneously with other metrics. Limitations arise as no single metric perfectly captures fairness, requiring careful selection based on the context and ethical considerations in bias mitigation strategies.

Overview of Bias Mitigation Techniques

Bias mitigation techniques in artificial intelligence encompass pre-processing, in-processing, and post-processing methods designed to reduce unfairness in model outcomes. Pre-processing approaches adjust training data to balance representation, in-processing techniques modify algorithms to incorporate fairness constraints during learning, and post-processing methods alter model predictions to correct biased outputs. Assessing fairness metrics such as demographic parity, equalized odds, and predictive parity guides the selection and evaluation of these bias mitigation strategies.

Challenges in Achieving Fairness in AI Systems

Fairness metrics such as demographic parity, equalized odds, and predictive parity provide quantifiable measures to evaluate bias in AI systems, yet they often conflict, making it difficult to satisfy all fairness criteria simultaneously. Bias mitigation techniques like reweighing, adversarial debiasing, and fair representation learning face challenges including preserving model accuracy and addressing intersectional and context-dependent biases. The dynamic nature of societal values and the complexity of real-world data further complicate the achievement of truly fair AI systems.

Evaluating the Effectiveness of Bias Mitigation

Evaluating the effectiveness of bias mitigation in artificial intelligence relies heavily on fairness metrics such as demographic parity, equalized odds, and disparate impact ratio to quantify reductions in algorithmic bias. These metrics enable practitioners to assess whether mitigation techniques achieve more equitable outcomes across different demographic groups without compromising overall model performance. Continuous monitoring using these fairness indicators ensures that bias mitigation efforts lead to meaningful improvements in AI fairness and ethical compliance.

The Trade-off Between Fairness and Model Performance

Fairness metrics such as demographic parity, equal opportunity, and disparate impact quantifiably assess bias within artificial intelligence models but often introduce a trade-off with model performance, where enhancing fairness can lead to reduced accuracy or predictive power. Bias mitigation techniques, including pre-processing, in-processing, and post-processing approaches, aim to balance this trade-off by minimizing disparate treatment while maintaining acceptable performance levels. Navigating the fairness-performance trade-off remains a pivotal challenge in deploying AI systems that are both ethical and effective across diverse populations.

Future Directions for Fairness and Bias Mitigation in AI

Emerging fairness metrics are essential for capturing nuanced biases in AI systems and facilitating more precise bias mitigation strategies. Future directions emphasize integrating adaptive fairness assessments with real-time bias correction to enhance algorithmic transparency and accountability. Leveraging interdisciplinary approaches, including ethical frameworks and domain-specific knowledge, will drive the development of robust, fair AI models across diverse applications.

Fairness Metrics vs Bias Mitigation Infographic

techiny.com

techiny.com