Feedforward Neural Networks process data in one direction, from input to output, making them ideal for static data tasks like image recognition. Recurrent Neural Networks incorporate feedback loops, enabling them to retain information across sequences, which is essential for time-dependent tasks such as language modeling and speech recognition. Understanding their structural differences helps optimize AI solutions for specific problem domains.

Table of Comparison

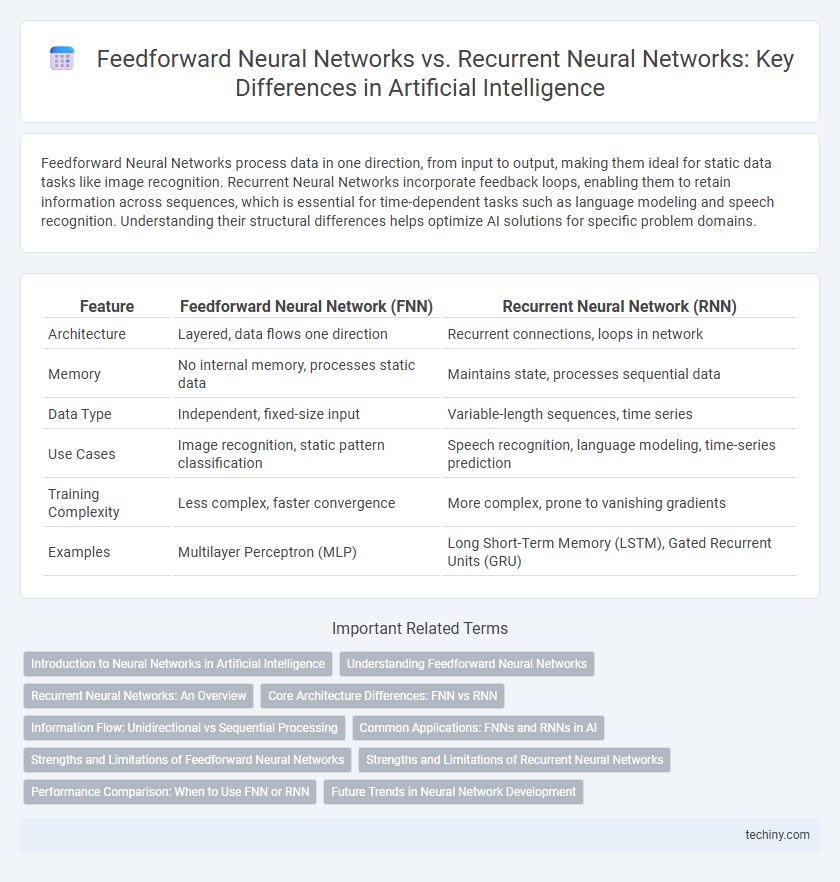

| Feature | Feedforward Neural Network (FNN) | Recurrent Neural Network (RNN) |

|---|---|---|

| Architecture | Layered, data flows one direction | Recurrent connections, loops in network |

| Memory | No internal memory, processes static data | Maintains state, processes sequential data |

| Data Type | Independent, fixed-size input | Variable-length sequences, time series |

| Use Cases | Image recognition, static pattern classification | Speech recognition, language modeling, time-series prediction |

| Training Complexity | Less complex, faster convergence | More complex, prone to vanishing gradients |

| Examples | Multilayer Perceptron (MLP) | Long Short-Term Memory (LSTM), Gated Recurrent Units (GRU) |

Introduction to Neural Networks in Artificial Intelligence

Feedforward Neural Networks (FNNs) process data in a single direction from input to output, making them suitable for static pattern recognition and function approximation in Artificial Intelligence. Recurrent Neural Networks (RNNs) incorporate feedback loops, enabling them to maintain temporal context and excel in sequence prediction tasks such as language modeling and time-series analysis. Understanding the structural differences between FNNs and RNNs is fundamental for selecting the appropriate neural architecture in AI applications involving static or sequential data.

Understanding Feedforward Neural Networks

Feedforward Neural Networks (FNNs) consist of layers where information moves strictly in one direction from input to output without loops, ideal for static data processing. These networks excel in pattern recognition tasks such as image classification due to their straightforward architecture and efficient training using backpropagation. Compared to Recurrent Neural Networks (RNNs), FNNs lack temporal memory, making them less suitable for sequential data but highly effective in scenarios where input-output relationships are independent of time.

Recurrent Neural Networks: An Overview

Recurrent Neural Networks (RNNs) are specialized neural architectures designed to process sequential data by maintaining internal memory states, enabling effective modeling of time-dependent patterns and dependencies in tasks such as speech recognition, language modeling, and time series prediction. Unlike Feedforward Neural Networks, RNNs incorporate feedback loops that allow information to persist across sequence steps, enhancing their ability to handle variable-length inputs and capture context over time. Key variants like Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRU) address challenges of vanishing gradients, improving learning of long-range dependencies in complex sequential datasets.

Core Architecture Differences: FNN vs RNN

Feedforward Neural Networks (FNN) consist of layers where connections move only in one direction from input to output, lacking cycles and memory of previous inputs. Recurrent Neural Networks (RNN) feature loops in their architecture, enabling information persistence by maintaining internal states across sequential data. This core architectural distinction allows RNNs to effectively model temporal dependencies, unlike FNNs which process inputs independently.

Information Flow: Unidirectional vs Sequential Processing

Feedforward Neural Networks process information in a unidirectional flow from input to output layers without cycles, enabling straightforward data propagation but lacking temporal context. Recurrent Neural Networks incorporate sequential processing by maintaining internal states and feedback loops, allowing them to capture dependencies across time steps for dynamic data analysis. This fundamental difference makes Feedforward architectures suited for static pattern recognition, while Recurrent models excel in handling sequential data such as time series, speech, and language modeling.

Common Applications: FNNs and RNNs in AI

Feedforward Neural Networks (FNNs) excel in image recognition and classification tasks due to their straightforward architecture and ability to process fixed-size input data efficiently. Recurrent Neural Networks (RNNs) are specialized for sequential data analysis, powering applications like language modeling, speech recognition, and time series forecasting by maintaining temporal dependencies. Both FNNs and RNNs form the backbone of AI systems, with FNNs focusing on static pattern recognition and RNNs handling dynamic sequence prediction challenges.

Strengths and Limitations of Feedforward Neural Networks

Feedforward Neural Networks excel in tasks with fixed-size input and output, offering straightforward training and faster convergence due to their simple architecture without cycles. They perform well in image recognition and static data classification but lack the capability to model temporal dependencies or sequential data effectively. Their limitation lies in processing time-series or context-rich data, where recurrent neural networks provide superior performance by maintaining internal state and feedback loops.

Strengths and Limitations of Recurrent Neural Networks

Recurrent Neural Networks (RNNs) excel in processing sequential data by maintaining temporal dependencies through internal memory states, making them ideal for tasks like language modeling and time series prediction. However, RNNs face challenges such as vanishing and exploding gradients, which hinder learning long-term dependencies, and they often require more computational resources compared to Feedforward Neural Networks. Despite these limitations, architectures like Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRUs) have been developed to mitigate gradient issues and improve performance on complex sequence modeling tasks.

Performance Comparison: When to Use FNN or RNN

Feedforward Neural Networks (FNN) excel in tasks with fixed-size input and output, offering faster training and simpler architectures, ideal for image recognition and basic classification. Recurrent Neural Networks (RNN) outperform FNNs in sequential data processing, capturing temporal dependencies crucial for speech recognition, language modeling, and time series prediction. Choosing between FNN and RNN hinges on data structure; use FNN for static inputs and RNN when context or order within sequences impacts performance accuracy.

Future Trends in Neural Network Development

Feedforward Neural Networks continue to evolve through advancements in deep learning architectures that improve pattern recognition and computational efficiency for tasks requiring fixed input-output mappings. Recurrent Neural Networks are being enhanced by integrating attention mechanisms and transformer models to better handle sequential data and long-term dependencies. Future trends emphasize hybrid models combining feedforward and recurrent elements, leveraging unsupervised learning and neuromorphic computing to achieve superior adaptability and energy-efficient AI systems.

Feedforward Neural Network vs Recurrent Neural Network Infographic

techiny.com

techiny.com