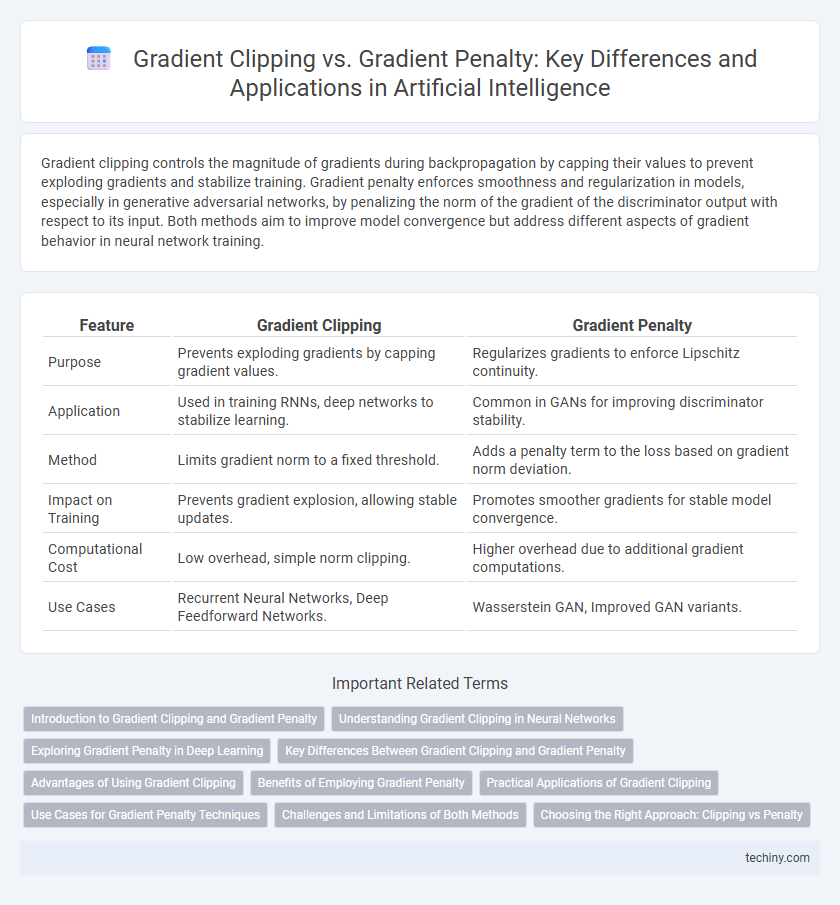

Gradient clipping controls the magnitude of gradients during backpropagation by capping their values to prevent exploding gradients and stabilize training. Gradient penalty enforces smoothness and regularization in models, especially in generative adversarial networks, by penalizing the norm of the gradient of the discriminator output with respect to its input. Both methods aim to improve model convergence but address different aspects of gradient behavior in neural network training.

Table of Comparison

| Feature | Gradient Clipping | Gradient Penalty |

|---|---|---|

| Purpose | Prevents exploding gradients by capping gradient values. | Regularizes gradients to enforce Lipschitz continuity. |

| Application | Used in training RNNs, deep networks to stabilize learning. | Common in GANs for improving discriminator stability. |

| Method | Limits gradient norm to a fixed threshold. | Adds a penalty term to the loss based on gradient norm deviation. |

| Impact on Training | Prevents gradient explosion, allowing stable updates. | Promotes smoother gradients for stable model convergence. |

| Computational Cost | Low overhead, simple norm clipping. | Higher overhead due to additional gradient computations. |

| Use Cases | Recurrent Neural Networks, Deep Feedforward Networks. | Wasserstein GAN, Improved GAN variants. |

Introduction to Gradient Clipping and Gradient Penalty

Gradient clipping is a technique used to prevent exploding gradients by capping the gradients during backpropagation, ensuring stable and efficient training of deep neural networks. Gradient penalty imposes a constraint on the gradient norm, often applied to enforce Lipschitz continuity in models like Wasserstein GANs, improving training stability and convergence. Both methods address gradient-related issues but differ in implementation and purpose within neural network optimization.

Understanding Gradient Clipping in Neural Networks

Gradient clipping is a technique used in neural networks to prevent exploding gradients by capping the gradient values during backpropagation, ensuring stable and efficient training. Unlike gradient penalty, which adds regularization terms to enforce smoothness in the gradient, gradient clipping directly modifies the gradients to keep them within a specified threshold. This approach improves convergence and prevents training instability in deep learning models, especially in recurrent neural networks and large-scale architectures.

Exploring Gradient Penalty in Deep Learning

Gradient penalty in deep learning is a regularization technique that improves model stability by constraining the norm of gradients during training, preventing exploding gradients commonly encountered in adversarial networks. Unlike gradient clipping, which simply caps gradient values, gradient penalty enforces smoothness by adding a penalty term based on the gradient norm to the loss function, promoting Lipschitz continuity. This approach enhances convergence and generalization in generative adversarial networks (GANs) and other complex neural architectures.

Key Differences Between Gradient Clipping and Gradient Penalty

Gradient clipping limits the maximum value of gradients during backpropagation to prevent exploding gradients, while gradient penalty adds a regularization term to the loss function to enforce smoothness or Lipschitz continuity in model training. Gradient clipping directly modifies gradient values to stabilize training, whereas gradient penalty influences the learning process by penalizing large gradient norms in specific regions of the input space. The key difference lies in gradient clipping being a direct gradient manipulation technique, while gradient penalty serves as a constraint embedded into the optimization objective.

Advantages of Using Gradient Clipping

Gradient clipping provides a robust method to stabilize training by preventing exploding gradients, especially in deep neural networks and recurrent models. This technique ensures gradients remain within a specified threshold, enhancing convergence speed and making optimization more reliable. It is computationally efficient compared to gradient penalty methods, which require additional regularization terms and more complex implementation.

Benefits of Employing Gradient Penalty

Gradient penalty in artificial intelligence enhances model training stability by preventing excessive gradient values that can destabilize optimization processes, especially in generative adversarial networks (GANs). It promotes smoother gradient flows, reducing the risk of mode collapse and improving generalization by enforcing Lipschitz continuity constraints. This technique leads to more robust convergence and higher-quality model outputs compared to traditional gradient clipping methods.

Practical Applications of Gradient Clipping

Gradient clipping is widely used in training deep neural networks to prevent exploding gradients by capping the magnitude of gradient vectors, ensuring stable and efficient convergence. It is particularly beneficial in recurrent neural networks (RNNs) and transformer models, where long sequences can cause gradient values to grow exponentially. Practical applications include natural language processing tasks such as language modeling and machine translation, where gradient clipping helps maintain training stability without sacrificing performance.

Use Cases for Gradient Penalty Techniques

Gradient penalty techniques are primarily used in training generative adversarial networks (GANs) to stabilize the discriminator by enforcing Lipschitz continuity, preventing gradient explosions and mode collapse. These methods improve model convergence in tasks such as image synthesis, natural language generation, and reinforcement learning by regularizing gradients during backpropagation. Compared to gradient clipping, gradient penalties offer a smoother constraint on gradient norms, enhancing training stability in high-dimensional, complex data scenarios.

Challenges and Limitations of Both Methods

Gradient Clipping often struggles with selecting appropriate threshold values, leading to either ineffective gradient control or training instability, while it can hinder convergence by truncating useful gradient information. Gradient Penalty imposes computational overhead and complexity, especially in high-dimensional spaces, and may introduce bias by enforcing overly restrictive constraints on the model's gradient norms. Both methods face challenges in balancing gradient regulation with model expressiveness, often requiring careful tuning to avoid degrading overall training performance.

Choosing the Right Approach: Clipping vs Penalty

Choosing between gradient clipping and gradient penalty depends on the specific challenges faced during neural network training. Gradient clipping effectively prevents exploding gradients by capping gradient values, making it ideal for recurrent neural networks and deep architectures sensitive to large updates. Conversely, gradient penalty enforces smoothness and regularization in loss functions, especially in generative adversarial networks (GANs), to stabilize training and improve convergence quality.

Gradient Clipping vs Gradient Penalty Infographic

techiny.com

techiny.com