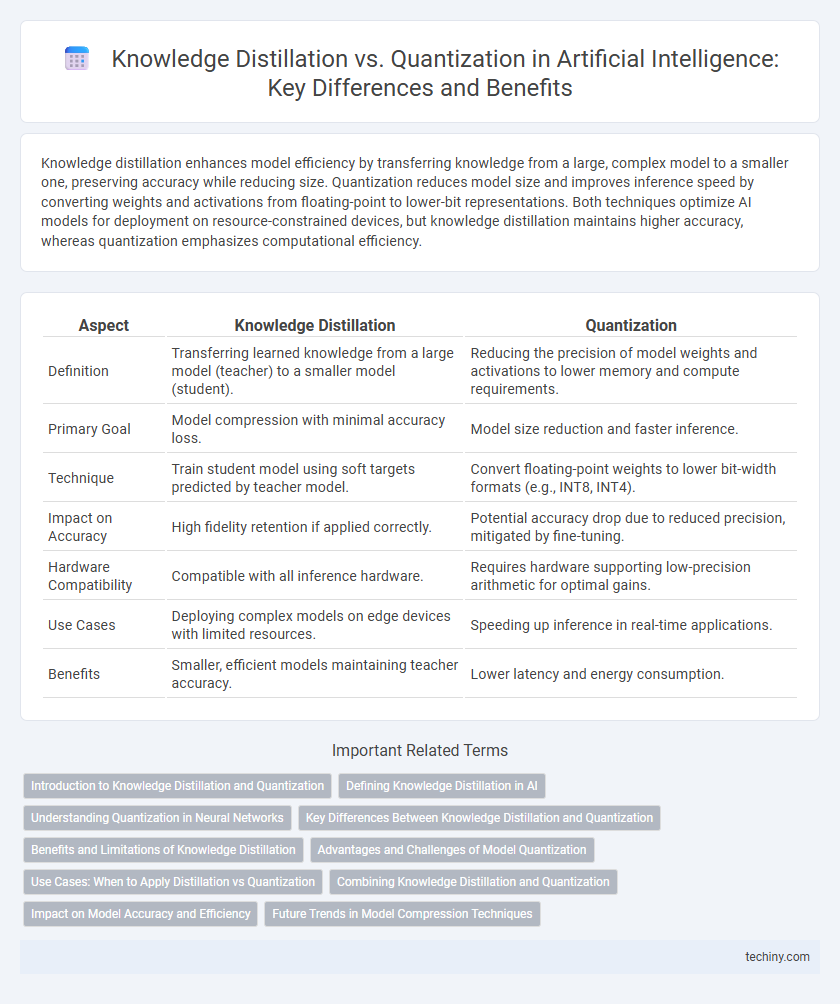

Knowledge distillation enhances model efficiency by transferring knowledge from a large, complex model to a smaller one, preserving accuracy while reducing size. Quantization reduces model size and improves inference speed by converting weights and activations from floating-point to lower-bit representations. Both techniques optimize AI models for deployment on resource-constrained devices, but knowledge distillation maintains higher accuracy, whereas quantization emphasizes computational efficiency.

Table of Comparison

| Aspect | Knowledge Distillation | Quantization |

|---|---|---|

| Definition | Transferring learned knowledge from a large model (teacher) to a smaller model (student). | Reducing the precision of model weights and activations to lower memory and compute requirements. |

| Primary Goal | Model compression with minimal accuracy loss. | Model size reduction and faster inference. |

| Technique | Train student model using soft targets predicted by teacher model. | Convert floating-point weights to lower bit-width formats (e.g., INT8, INT4). |

| Impact on Accuracy | High fidelity retention if applied correctly. | Potential accuracy drop due to reduced precision, mitigated by fine-tuning. |

| Hardware Compatibility | Compatible with all inference hardware. | Requires hardware supporting low-precision arithmetic for optimal gains. |

| Use Cases | Deploying complex models on edge devices with limited resources. | Speeding up inference in real-time applications. |

| Benefits | Smaller, efficient models maintaining teacher accuracy. | Lower latency and energy consumption. |

Introduction to Knowledge Distillation and Quantization

Knowledge distillation is a technique in artificial intelligence that transfers knowledge from a large, complex model (teacher) to a smaller, more efficient model (student) to improve performance while reducing computational cost. Quantization compresses neural networks by reducing the precision of weights and activations, typically from 32-bit floating point to lower bit-width representations like 8-bit integers, enabling faster inference and reduced memory usage. Both methods optimize AI models for deployment on resource-constrained devices, balancing accuracy and efficiency through different approaches.

Defining Knowledge Distillation in AI

Knowledge distillation in AI is a technique where a smaller, simpler model (student) learns to replicate the behavior of a larger, complex model (teacher) by mimicking its outputs. This process transfers knowledge by using softened probabilities or intermediate representations, enabling efficient model deployment without significant accuracy loss. Knowledge distillation enhances model compression and accelerates inference while maintaining performance, distinguishing it from quantization, which focuses on reducing numeric precision.

Understanding Quantization in Neural Networks

Quantization in neural networks reduces model size and accelerates inference by converting floating-point weights and activations into lower-bit representations, such as int8 or int4. This process preserves model accuracy while enabling deployment on resource-constrained devices like mobile phones and embedded systems. Effective quantization techniques include uniform quantization, dynamic quantization, and quantization-aware training, which optimize performance for specific hardware architectures.

Key Differences Between Knowledge Distillation and Quantization

Knowledge distillation transfers knowledge from a large teacher model to a smaller student model by training the student to mimic the teacher's outputs, improving model efficiency without significant accuracy loss. Quantization reduces the precision of model parameters and activations, converting floating-point weights to lower-bit representations like INT8 or INT4, which decreases model size and accelerates inference. While knowledge distillation emphasizes preserving predictive performance through soft label supervision, quantization focuses on computational optimization by minimizing numerical precision.

Benefits and Limitations of Knowledge Distillation

Knowledge distillation enhances model efficiency by transferring knowledge from a large, complex teacher model to a smaller student model, improving accuracy and reducing computational costs without significant loss of performance. It benefits applications requiring real-time inference on resource-constrained devices but may struggle with preserving nuanced decision boundaries in highly complex tasks. Limitations include dependency on the quality of the teacher model and the potential for reduced interpretability compared to the original large model.

Advantages and Challenges of Model Quantization

Model quantization reduces the computational load and memory footprint by converting high-precision weights and activations into lower-bit representations, enabling efficient deployment on edge devices with limited resources. Challenges include a potential drop in model accuracy due to quantization errors and the complexity of calibrating quantization parameters for different layers and hardware architectures. Despite these challenges, quantization accelerates inference speed and reduces power consumption, making it essential for real-time AI applications.

Use Cases: When to Apply Distillation vs Quantization

Knowledge distillation is ideal for deploying compact neural networks in resource-constrained environments like mobile devices or IoT, where preserving model accuracy is crucial while reducing size. Quantization excels in scenarios prioritizing inference speed and memory efficiency, such as edge computing or real-time applications, by converting model weights to lower precision formats like INT8 or INT4. Applying distillation suits tasks requiring retaining performance with fewer parameters, whereas quantization is preferable when low-latency and hardware compatibility are primary concerns.

Combining Knowledge Distillation and Quantization

Combining Knowledge Distillation and Quantization enables the creation of compact, efficient AI models by transferring knowledge from a large teacher model to a smaller student model while reducing bit precision for lower computational cost. This hybrid approach maintains model accuracy despite aggressive compression, making it ideal for deployment on edge devices with limited resources. Leveraging the synergy between these techniques achieves optimal balance between model size, speed, and performance in real-world AI applications.

Impact on Model Accuracy and Efficiency

Knowledge distillation transfers knowledge from a large teacher model to a smaller student model, often preserving accuracy while improving efficiency by reducing model size and inference time. Quantization compresses models by reducing precision of weights and activations, enhancing deployment speed and lowering memory usage but potentially causing accuracy degradation, especially in complex tasks. Balancing accuracy and efficiency depends on the specific use case, with distillation offering finer control over performance retention and quantization providing hardware-friendly acceleration.

Future Trends in Model Compression Techniques

Future trends in model compression techniques emphasize hybrid approaches combining knowledge distillation and quantization to achieve efficient yet accurate AI models. Advancements in adaptive quantization schemes tailored by distilled teacher models enhance performance on edge devices with limited resources. Research is increasingly directed towards automating compression pipelines using reinforcement learning to optimize both size and inference speed dynamically.

Knowledge distillation vs quantization Infographic

techiny.com

techiny.com