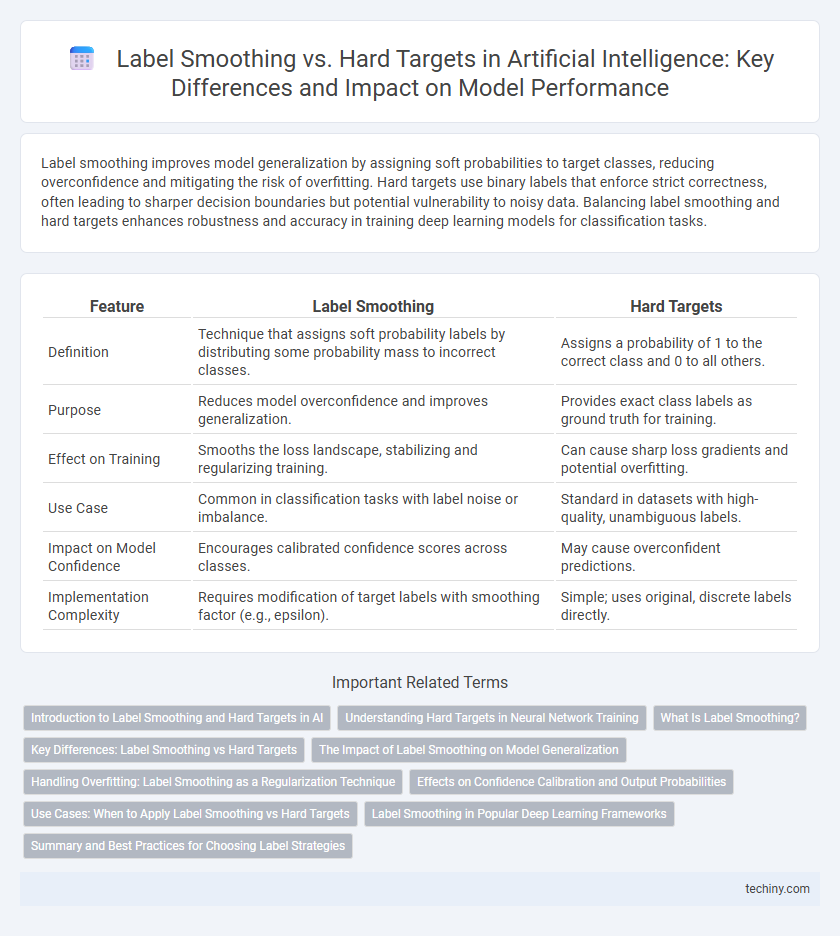

Label smoothing improves model generalization by assigning soft probabilities to target classes, reducing overconfidence and mitigating the risk of overfitting. Hard targets use binary labels that enforce strict correctness, often leading to sharper decision boundaries but potential vulnerability to noisy data. Balancing label smoothing and hard targets enhances robustness and accuracy in training deep learning models for classification tasks.

Table of Comparison

| Feature | Label Smoothing | Hard Targets |

|---|---|---|

| Definition | Technique that assigns soft probability labels by distributing some probability mass to incorrect classes. | Assigns a probability of 1 to the correct class and 0 to all others. |

| Purpose | Reduces model overconfidence and improves generalization. | Provides exact class labels as ground truth for training. |

| Effect on Training | Smooths the loss landscape, stabilizing and regularizing training. | Can cause sharp loss gradients and potential overfitting. |

| Use Case | Common in classification tasks with label noise or imbalance. | Standard in datasets with high-quality, unambiguous labels. |

| Impact on Model Confidence | Encourages calibrated confidence scores across classes. | May cause overconfident predictions. |

| Implementation Complexity | Requires modification of target labels with smoothing factor (e.g., epsilon). | Simple; uses original, discrete labels directly. |

Introduction to Label Smoothing and Hard Targets in AI

Label smoothing is a regularization technique in artificial intelligence that replaces hard target labels with a weighted distribution of labels to prevent the model from becoming overconfident. Hard targets represent the traditional approach, using one-hot encoded vectors where the correct class is assigned a probability of one and all others zero. Incorporating label smoothing enhances model generalization by mitigating the risk of overfitting to precisely labeled data.

Understanding Hard Targets in Neural Network Training

Hard targets in neural network training refer to the use of one-hot encoded labels where each example is assigned a definitive class, providing clear and unambiguous supervision. Unlike label smoothing which introduces uncertainty by distributing probabilities across classes, hard targets enforce a strict decision boundary that can speed up convergence and sharpen class distinctions. While this approach may lead to overfitting and reduced generalization, it remains essential in scenarios requiring precise classification and interpretability.

What Is Label Smoothing?

Label smoothing is a regularization technique in artificial intelligence that softens the target labels by assigning a small probability to all classes instead of a hard 0 or 1 label. This method reduces overconfidence in neural networks, improves model calibration, and enhances generalization by encouraging the model to be less certain about its predictions. Compared to hard targets, label smoothing mitigates the risk of overfitting and helps prevent the network from assigning full probability mass to a single class during training.

Key Differences: Label Smoothing vs Hard Targets

Label smoothing modifies the target labels by assigning a small probability to incorrect classes, reducing model overconfidence and improving generalization, whereas hard targets use one-hot encoded labels with absolute certainty for the correct class. This technique helps mitigate overfitting by encouraging the model to be less confident in its predictions, unlike hard targets that may lead to overconfident and less calibrated outputs. Label smoothing is particularly effective in tasks like image classification and language modeling where ambiguity and noise in the data are common.

The Impact of Label Smoothing on Model Generalization

Label smoothing enhances model generalization by preventing overconfidence in predictions, distributing label probabilities slightly away from absolute certainties. This technique reduces the likelihood of overfitting by encouraging the model to be more adaptable and less sensitive to noise in training data. Studies demonstrate that label smoothing often leads to improved performance on unseen datasets compared to using hard targets, which enforce strict one-hot labels.

Handling Overfitting: Label Smoothing as a Regularization Technique

Label smoothing reduces overfitting by preventing models from becoming overly confident in their predictions, distributing some probability mass to incorrect classes. This regularization technique enhances generalization and improves robustness by softening the target labels, which mitigates sharp decision boundaries. Unlike hard targets that enforce strict one-hot labels, label smoothing encourages the model to learn more nuanced representations and avoid memorizing noise in training data.

Effects on Confidence Calibration and Output Probabilities

Label smoothing improves confidence calibration by preventing overconfident predictions, leading to more calibrated output probabilities compared to hard targets which often produce overly confident and less reliable probabilistic estimates. It introduces a small uniform distribution over labels, softening the target distribution and reducing model overfitting on training data noise. Consequently, models trained with label smoothing exhibit better uncertainty estimation and robustness in downstream tasks requiring reliable probability assessments.

Use Cases: When to Apply Label Smoothing vs Hard Targets

Label smoothing is effective in classification tasks where model calibration and generalization are critical, such as natural language processing and image recognition, as it reduces overconfidence in predictions. Hard targets are preferred in scenarios requiring precise and unambiguous labels, like object detection or medical image segmentation, ensuring clear decision boundaries. Applying label smoothing in noisy or ambiguous datasets helps mitigate overfitting, whereas hard targets excel in clean datasets with well-defined class labels.

Label Smoothing in Popular Deep Learning Frameworks

Label smoothing is widely implemented in popular deep learning frameworks such as TensorFlow, PyTorch, and Keras, offering an effective regularization technique to improve model generalization by preventing the network from becoming overconfident in its predictions. This technique modifies the target distribution by assigning a small probability to all classes rather than a hard 1-hot vector, which reduces overfitting and enhances model calibration. Frameworks provide built-in functions or parameters to easily apply label smoothing during loss computation, optimizing training stability and performance in tasks like image classification and natural language processing.

Summary and Best Practices for Choosing Label Strategies

Label smoothing improves model generalization by assigning soft probabilities to target labels, reducing overconfidence and enhancing robustness to noisy data. Hard targets provide clear, definitive class labels, often yielding better performance in well-defined, noise-free datasets with distinct classes. Choose label smoothing when handling ambiguous or noisy labels to prevent overfitting, while hard targets remain optimal for precise classification tasks with high-quality annotated data.

Label smoothing vs hard targets Infographic

techiny.com

techiny.com