Markov Decision Processes (MDPs) model decision-making tasks where an agent has full knowledge of the environment's current state, enabling straightforward policy optimization. Partially Observable Markov Decision Processes (POMDPs) extend this framework to situations with incomplete or uncertain information about the state, incorporating observation probabilities and belief states to guide decisions. POMDPs offer a more realistic approach for complex real-world applications where sensing limitations prevent full observability, although they require significantly more computational resources than MDPs.

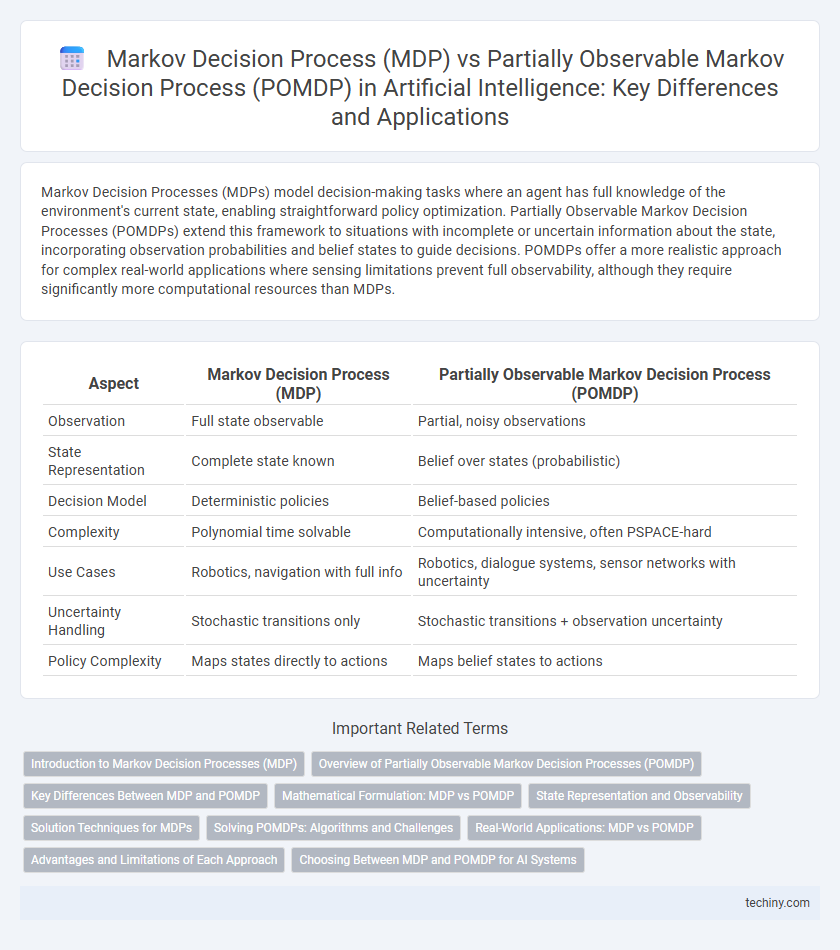

Table of Comparison

| Aspect | Markov Decision Process (MDP) | Partially Observable Markov Decision Process (POMDP) |

|---|---|---|

| Observation | Full state observable | Partial, noisy observations |

| State Representation | Complete state known | Belief over states (probabilistic) |

| Decision Model | Deterministic policies | Belief-based policies |

| Complexity | Polynomial time solvable | Computationally intensive, often PSPACE-hard |

| Use Cases | Robotics, navigation with full info | Robotics, dialogue systems, sensor networks with uncertainty |

| Uncertainty Handling | Stochastic transitions only | Stochastic transitions + observation uncertainty |

| Policy Complexity | Maps states directly to actions | Maps belief states to actions |

Introduction to Markov Decision Processes (MDP)

Markov Decision Processes (MDPs) provide a mathematical framework for modeling decision-making in environments where outcomes are partly random and partly under the control of a decision-maker. An MDP is defined by a set of states, actions, transition probabilities, and reward functions, facilitating optimal policy derivation to maximize cumulative rewards over time. This framework assumes full observability of the system's current state, distinguishing it from Partially Observable Markov Decision Processes (POMDPs) that handle uncertainty in state information.

Overview of Partially Observable Markov Decision Processes (POMDP)

Partially Observable Markov Decision Processes (POMDPs) extend Markov Decision Processes (MDPs) by incorporating uncertainty in state observation, where the agent cannot directly observe the true state but receives probabilistic signals. POMDPs model decision-making problems under uncertainty by maintaining a belief state, a probability distribution over possible states, enabling more robust policies in real-world scenarios with incomplete or noisy data. These frameworks are crucial in AI fields such as robotics, autonomous systems, and reinforcement learning, where partial observability significantly impacts strategic planning and performance.

Key Differences Between MDP and POMDP

Markov Decision Process (MDP) assumes full observability of the environment's state, enabling decision-making based on known current states, whereas Partially Observable Markov Decision Process (POMDP) accounts for uncertainty by using belief states to represent possible current states due to incomplete information. MDP models execute policies directly from observable states, while POMDP methods rely on probabilistic state estimation and belief updates informed by observation models. Computational complexity differentiates the two, with POMDPs requiring more intensive algorithms to handle state uncertainty compared to the more straightforward value iteration or policy iteration used in MDPs.

Mathematical Formulation: MDP vs POMDP

Markov Decision Processes (MDPs) are defined by a tuple (S, A, P, R, g) representing states, actions, transition probabilities, rewards, and discount factors, relying on fully observable states to model decision-making. Partially Observable Markov Decision Processes (POMDPs) extend MDPs by incorporating a set of observations (O) and an observation probability function (O), forming the tuple (S, A, P, R, O, O, g), to address uncertainty in state observations. The core difference lies in POMDPs modeling belief states as probability distributions over S, mathematically represented via Bayesian updates, making the optimization problem significantly more complex than in MDPs.

State Representation and Observability

Markov Decision Process (MDP) features a fully observable state space, allowing agents to make decisions based on complete knowledge of the environment's current state. In contrast, Partially Observable MDP (POMDP) deals with incomplete or noisy observations, requiring agents to maintain a belief state, a probability distribution over all possible states. This distinction in observability fundamentally affects the complexity of state representation and decision-making strategies in artificial intelligence applications.

Solution Techniques for MDPs

Markov Decision Processes (MDPs) employ dynamic programming methods such as value iteration and policy iteration to derive optimal policies by exploiting the full observability of the system states. These algorithms iteratively compute value functions over states using the Bellman equation, ensuring convergence to optimal solutions under the Markov property. Contrarily, Partially Observable MDPs (POMDPs) require more complex approaches like belief state updates and point-based value iteration due to uncertainty in state information, but MDP solution techniques remain fundamental for scenarios with complete state observability.

Solving POMDPs: Algorithms and Challenges

Solving Partially Observable Markov Decision Processes (POMDPs) involves complex algorithms like point-based value iteration and policy search methods that address uncertainty in state information. Unlike Markov Decision Processes (MDPs), which assume full observability, POMDP algorithms must estimate belief states to optimize decision-making under incomplete data. Key challenges include computational complexity, scalability, and maintaining tractable solutions for real-world applications.

Real-World Applications: MDP vs POMDP

Markov Decision Processes (MDPs) assume full observability of the system state, making them ideal for applications like robotics navigation and automated control systems where the environment is fully known. Partially Observable Markov Decision Processes (POMDPs) handle uncertainty in observations, enabling better decision-making in complex domains such as autonomous driving, medical diagnosis, and financial forecasting where sensor noise and incomplete information are prevalent. Real-world applications demonstrate that POMDPs provide more robust and adaptive solutions compared to MDPs in environments with hidden states or ambiguous data.

Advantages and Limitations of Each Approach

Markov Decision Processes (MDPs) offer a robust framework for decision-making in fully observable environments, enabling efficient policy optimization through well-defined state transitions and rewards; however, their limitation lies in the assumption of complete state observability, which is often unrealistic in complex real-world scenarios. Partially Observable Markov Decision Processes (POMDPs) extend MDPs by incorporating uncertainty in state observations, making them highly advantageous for applications requiring decision-making under incomplete information, though they face computational challenges due to increased complexity in belief state management and policy calculation. The trade-off between MDPs' computational efficiency and POMDPs' capability to handle uncertainty defines their suitability across various AI domains such as robotics, autonomous systems, and natural language processing.

Choosing Between MDP and POMDP for AI Systems

Choosing between Markov Decision Process (MDP) and Partially Observable MDP (POMDP) depends on the level of environmental uncertainty and observation availability in AI systems. MDPs are suitable for fully observable environments where state information is complete, enabling efficient decision-making using transition probabilities and rewards. POMDPs handle incomplete or noisy observations, incorporating belief states to optimize policies under uncertainty, making them ideal for real-world AI applications with partial observability.

Markov Decision Process (MDP) vs Partially Observable MDP (POMDP) Infographic

techiny.com

techiny.com