One-hot encoding transforms categorical variables into binary vectors, enabling algorithms to interpret data without assuming any ordinal relationship between categories. Label encoding assigns each category a unique integer value, which may introduce unintended ordinal relationships and affect model performance when categories are nominal. Choosing between one-hot encoding and label encoding depends on the nature of the data and the specific machine learning algorithm being used.

Table of Comparison

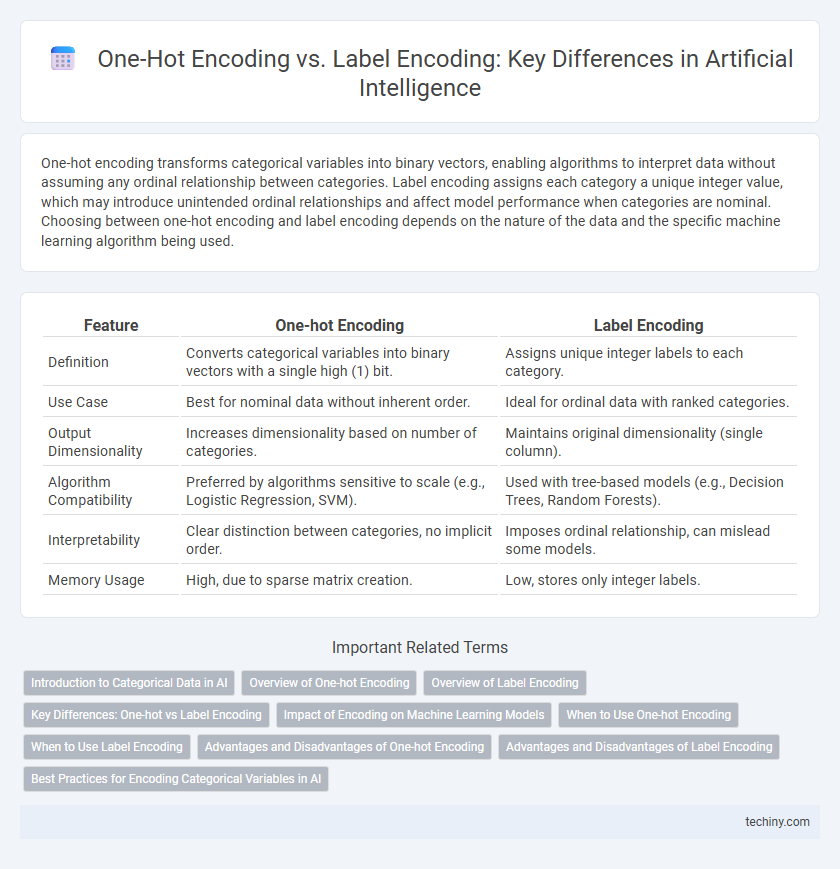

| Feature | One-hot Encoding | Label Encoding |

|---|---|---|

| Definition | Converts categorical variables into binary vectors with a single high (1) bit. | Assigns unique integer labels to each category. |

| Use Case | Best for nominal data without inherent order. | Ideal for ordinal data with ranked categories. |

| Output Dimensionality | Increases dimensionality based on number of categories. | Maintains original dimensionality (single column). |

| Algorithm Compatibility | Preferred by algorithms sensitive to scale (e.g., Logistic Regression, SVM). | Used with tree-based models (e.g., Decision Trees, Random Forests). |

| Interpretability | Clear distinction between categories, no implicit order. | Imposes ordinal relationship, can mislead some models. |

| Memory Usage | High, due to sparse matrix creation. | Low, stores only integer labels. |

Introduction to Categorical Data in AI

Categorical data in artificial intelligence refers to variables that represent discrete groups or labels, requiring specialized encoding methods to be processed by machine learning algorithms. One-hot encoding transforms each category into a binary vector, preserving the nominal nature of data without implying ordinal relationships, making it ideal for non-ordinal features. Label encoding assigns a unique integer to each category, which is computationally efficient but may introduce unintended ordinal interpretations in nominal data.

Overview of One-hot Encoding

One-hot encoding transforms categorical variables into binary vectors, enabling machine learning algorithms to interpret nominal data without implying ordinal relationships. Each category is represented by a unique vector where one element is set to 1 and the rest to 0, effectively eliminating any hierarchical bias. This encoding method is especially useful in neural networks and decision tree models to improve accuracy and model interpretability.

Overview of Label Encoding

Label Encoding assigns unique integer values to each category in categorical data, enabling machine learning algorithms to process non-numeric inputs efficiently. This method preserves ordinal relationships within data but may introduce unintended ordinal implications for nominal categories. Label Encoding is widely used in classification tasks where category order is significant and computational efficiency is required.

Key Differences: One-hot vs Label Encoding

One-hot encoding converts categorical variables into binary vectors, creating a separate column for each category, which prevents algorithms from misinterpreting categorical data as ordinal. Label encoding assigns each category a unique integer value, which is efficient but may introduce unintended ordinal relationships in machine learning models. The choice between one-hot and label encoding depends on the algorithm and the nature of the categorical variables, with one-hot preferred for nominal categories and label encoding suitable for ordinal categories.

Impact of Encoding on Machine Learning Models

One-hot encoding transforms categorical variables into binary vectors, preventing models from assuming ordinal relationships and improving accuracy in algorithms like logistic regression or neural networks. Label encoding assigns integer values to categories, which can mislead models into interpreting numerical order where none exists, potentially reducing model performance. Choosing the appropriate encoding method directly impacts the predictive power and interpretability of machine learning models.

When to Use One-hot Encoding

One-hot encoding is ideal for categorical variables with no inherent ordinal relationship, such as color or gender, as it prevents misleading the model with artificial hierarchies. It works best when the number of unique categories is relatively small to avoid high dimensionality and sparse matrices. This encoding method enhances performance for models sensitive to numerical input distinctions, including logistic regression and neural networks.

When to Use Label Encoding

Label Encoding is ideal for ordinal categorical variables where the categories have a meaningful order, such as education levels or ratings. It converts categories into integers preserving the rank, enabling algorithms to leverage the inherent hierarchy. This method is suitable for tree-based models and algorithms sensitive to numerical order, avoiding unnecessary dimensionality expansion.

Advantages and Disadvantages of One-hot Encoding

One-hot encoding offers the advantage of representing categorical variables without implying any ordinal relationship, making it ideal for nominal data and ensuring algorithms interpret categories correctly. This encoding method can lead to high-dimensional sparse matrices, which may increase computational complexity and memory usage, especially with features containing many categories. One-hot encoding's binary nature facilitates compatibility with many machine learning models, but it can suffer from the curse of dimensionality, potentially degrading performance in large-scale datasets.

Advantages and Disadvantages of Label Encoding

Label Encoding converts categorical data into numerical form by assigning unique integers to each category, providing a simple and memory-efficient representation suitable for ordinal data. Its advantage lies in preserving the order of categories, allowing algorithms to interpret ranked relationships effectively. However, Label Encoding can introduce unintended ordinal relationships in nominal data, leading to inaccurate model interpretations and biased predictions.

Best Practices for Encoding Categorical Variables in AI

One-hot encoding transforms categorical variables into binary vectors, ideal for nominal data without intrinsic order, preventing algorithmic bias in AI models. Label encoding assigns unique integers to categories, suitable for ordinal variables where order matters, but may mislead algorithms if applied to non-ordinal data. Best practices recommend choosing encoding methods based on the categorical variable's nature and ensuring consistency across training and testing datasets to optimize AI model performance and interpretability.

One-hot Encoding vs Label Encoding Infographic

techiny.com

techiny.com