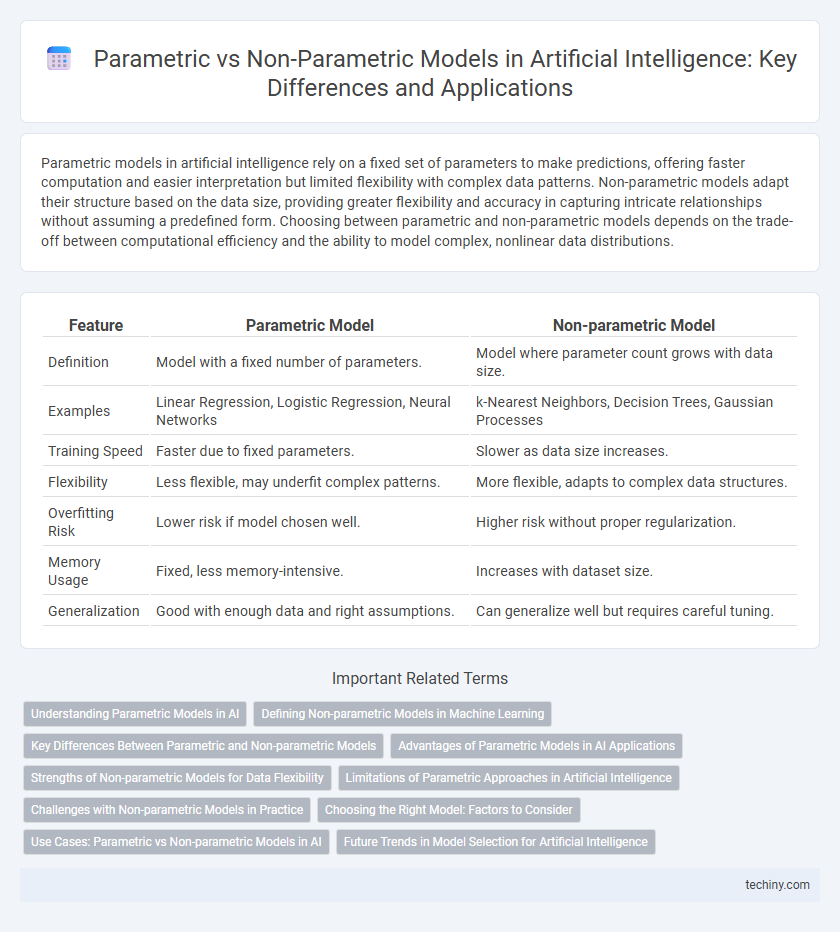

Parametric models in artificial intelligence rely on a fixed set of parameters to make predictions, offering faster computation and easier interpretation but limited flexibility with complex data patterns. Non-parametric models adapt their structure based on the data size, providing greater flexibility and accuracy in capturing intricate relationships without assuming a predefined form. Choosing between parametric and non-parametric models depends on the trade-off between computational efficiency and the ability to model complex, nonlinear data distributions.

Table of Comparison

| Feature | Parametric Model | Non-parametric Model |

|---|---|---|

| Definition | Model with a fixed number of parameters. | Model where parameter count grows with data size. |

| Examples | Linear Regression, Logistic Regression, Neural Networks | k-Nearest Neighbors, Decision Trees, Gaussian Processes |

| Training Speed | Faster due to fixed parameters. | Slower as data size increases. |

| Flexibility | Less flexible, may underfit complex patterns. | More flexible, adapts to complex data structures. |

| Overfitting Risk | Lower risk if model chosen well. | Higher risk without proper regularization. |

| Memory Usage | Fixed, less memory-intensive. | Increases with dataset size. |

| Generalization | Good with enough data and right assumptions. | Can generalize well but requires careful tuning. |

Understanding Parametric Models in AI

Parametric models in artificial intelligence rely on a fixed number of parameters to represent data patterns, enabling efficient learning and inference processes. These models, such as linear regression and neural networks, assume a specific functional form and capture relationships through parameter estimation, which simplifies computation and reduces memory requirements. Their effectiveness depends on the correct specification of model structure, but they may struggle with complex, high-dimensional data due to inherent rigidity.

Defining Non-parametric Models in Machine Learning

Non-parametric models in machine learning do not assume a fixed form or a predetermined number of parameters, allowing them to adapt flexibly to the complexity of data. These models, such as k-nearest neighbors, decision trees, and Gaussian processes, grow in complexity with the size of the dataset, enabling better representation of intricate patterns. Non-parametric approaches excel in scenarios where the underlying data distribution is unknown or highly variable, often outperforming parametric models in capturing nonlinear relationships.

Key Differences Between Parametric and Non-parametric Models

Parametric models rely on a fixed number of parameters and assume a specific functional form, enabling faster training but potentially limiting flexibility in capturing complex data patterns. Non-parametric models do not assume a predetermined form and can adapt their complexity based on the dataset size, offering greater flexibility at the cost of increased computational resources. Key differences include model complexity scaling, interpretability, and performance on high-dimensional data, with parametric models excelling in simplicity and speed while non-parametric models thrive in adaptability and accuracy for diverse data distributions.

Advantages of Parametric Models in AI Applications

Parametric models in artificial intelligence offer clear advantages such as reduced computational complexity and faster training times due to their fixed number of parameters. These models benefit from easier interpretability and simpler deployment in real-time applications, making them well-suited for scenarios with limited data or resource constraints. The concise parameter representation also helps prevent overfitting, enhancing generalization performance on unseen data.

Strengths of Non-parametric Models for Data Flexibility

Non-parametric models excel at handling complex and high-dimensional data without assuming a fixed form for the underlying function, allowing for greater adaptability in diverse applications. These models inherently accommodate varying data structures and distributions, providing robust performance even with limited prior knowledge about the data. Their flexibility makes them particularly effective in dynamic environments where data patterns evolve over time, enhancing predictive accuracy and generalization capabilities.

Limitations of Parametric Approaches in Artificial Intelligence

Parametric models in artificial intelligence rely on a fixed number of parameters, limiting their flexibility to capture complex data patterns and causing potential underfitting in high-dimensional or non-linear problems. These models often assume a predefined functional form, which can restrict their ability to adapt to diverse and evolving datasets commonly encountered in AI tasks. The rigidity and scalability issues inherent in parametric approaches lead to challenges in dealing with big data and real-world variability, making non-parametric models more suitable for such dynamic environments.

Challenges with Non-parametric Models in Practice

Non-parametric models often face scalability challenges due to their reliance on a vast amount of training data, leading to increased computational costs and slower prediction times. Managing memory complexity becomes difficult as these models store large datasets, causing inefficiencies in real-time applications. Furthermore, non-parametric approaches are prone to overfitting in high-dimensional spaces, complicating model generalization and performance optimization.

Choosing the Right Model: Factors to Consider

Choosing between parametric and non-parametric models depends on dataset size, complexity, and prior knowledge about data distribution. Parametric models excel with smaller datasets and structured assumptions, offering faster computation and simpler interpretation. Non-parametric models adapt better to complex, high-dimensional data without assuming a fixed form, providing greater flexibility at the cost of increased computational resources and risk of overfitting.

Use Cases: Parametric vs Non-parametric Models in AI

Parametric models in AI, such as linear regression and neural networks, excel in scenarios with fixed-size datasets and clear, predefined patterns, enabling fast training and efficient prediction. Non-parametric models like k-nearest neighbors and Gaussian processes adapt to data complexity without assuming a fixed form, making them ideal for large, complex datasets and tasks requiring high flexibility, such as image recognition and anomaly detection. Choosing between parametric and non-parametric approaches depends on the trade-off between interpretability, computational resources, and the nature of the data distribution.

Future Trends in Model Selection for Artificial Intelligence

Future trends in model selection for Artificial Intelligence emphasize adaptive hybrid approaches that combine parametric models' efficiency with non-parametric models' flexibility. Advances in automated machine learning (AutoML) and neural architecture search enable dynamic model choice tailored to specific dataset characteristics, enhancing accuracy and computational resource management. The integration of meta-learning frameworks further accelerates this trend by optimizing model selection processes based on prior experience and real-time feedback.

Parametric Model vs Non-parametric Model Infographic

techiny.com

techiny.com