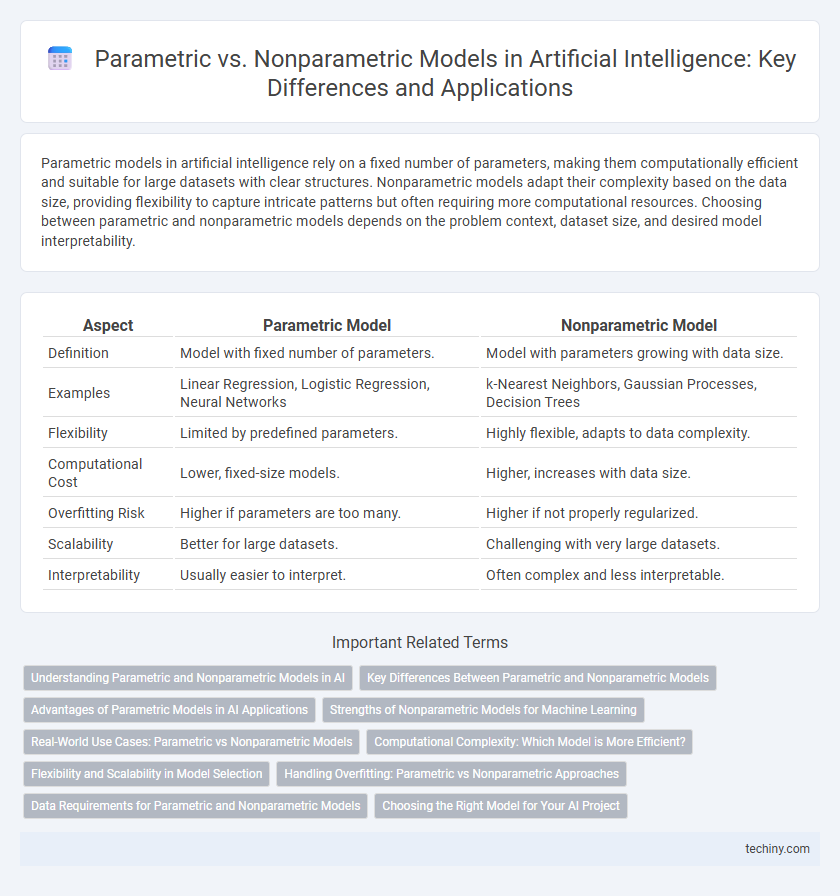

Parametric models in artificial intelligence rely on a fixed number of parameters, making them computationally efficient and suitable for large datasets with clear structures. Nonparametric models adapt their complexity based on the data size, providing flexibility to capture intricate patterns but often requiring more computational resources. Choosing between parametric and nonparametric models depends on the problem context, dataset size, and desired model interpretability.

Table of Comparison

| Aspect | Parametric Model | Nonparametric Model |

|---|---|---|

| Definition | Model with fixed number of parameters. | Model with parameters growing with data size. |

| Examples | Linear Regression, Logistic Regression, Neural Networks | k-Nearest Neighbors, Gaussian Processes, Decision Trees |

| Flexibility | Limited by predefined parameters. | Highly flexible, adapts to data complexity. |

| Computational Cost | Lower, fixed-size models. | Higher, increases with data size. |

| Overfitting Risk | Higher if parameters are too many. | Higher if not properly regularized. |

| Scalability | Better for large datasets. | Challenging with very large datasets. |

| Interpretability | Usually easier to interpret. | Often complex and less interpretable. |

Understanding Parametric and Nonparametric Models in AI

Parametric models in AI involve a fixed number of parameters determined prior to training, enabling efficient computation and interpretability but potentially limiting flexibility in capturing complex patterns. Nonparametric models dynamically adjust their parameters based on the data size, offering greater adaptability and accuracy for high-dimensional or irregular datasets but often requiring more computational resources. Understanding the trade-offs between parametric models like linear regression and nonparametric models such as k-nearest neighbors is crucial for selecting appropriate algorithms tailored to specific AI applications and data characteristics.

Key Differences Between Parametric and Nonparametric Models

Parametric models in artificial intelligence rely on a fixed number of parameters to define their structure, enabling faster training and simpler interpretation but assuming a predefined functional form. Nonparametric models, by contrast, adapt their complexity based on the size and nature of the data, offering greater flexibility without assuming a fixed model structure. Key differences include scalability, with parametric models performing well on smaller datasets and nonparametric models excelling in capturing complex patterns as data volume grows.

Advantages of Parametric Models in AI Applications

Parametric models in artificial intelligence offer significant advantages including faster training times and reduced computational resources due to their fixed number of parameters. These models excel in scenarios with limited data by providing strong inductive biases that improve generalization and reduce overfitting. Their simplicity facilitates easier interpretation and deployment in real-time AI applications compared to nonparametric counterparts.

Strengths of Nonparametric Models for Machine Learning

Nonparametric models excel in machine learning by adapting flexibly to complex patterns without assuming a fixed form, allowing them to handle diverse and high-dimensional data effectively. They require fewer assumptions, which enhances their robustness to model misspecification and improves prediction accuracy on unseen data. These models, including decision trees, kernel methods, and nearest neighbors, are particularly strong in scenarios where data distribution is unknown or changes dynamically.

Real-World Use Cases: Parametric vs Nonparametric Models

Parametric models, such as linear regression and neural networks, excel in real-world applications where data dimensionality is fixed and model interpretability is crucial, like credit scoring and medical diagnosis. Nonparametric models, including k-nearest neighbors and Gaussian processes, offer flexibility in capturing complex patterns without predefined parameters, useful for anomaly detection and personalized recommendation systems. Choosing between parametric and nonparametric models depends on data size, computational resources, and the complexity of relationships within the dataset.

Computational Complexity: Which Model is More Efficient?

Parametric models have fixed numbers of parameters, making their computational complexity generally lower and more predictable regardless of dataset size, resulting in faster training and inference times. Nonparametric models, such as k-nearest neighbors or Gaussian processes, scale their complexity with the amount of data, often leading to higher computational costs as datasets grow larger. Efficiency depends on the application size and requirements, with parametric models favored for large-scale problems and nonparametric models offering flexibility at increased computational expense.

Flexibility and Scalability in Model Selection

Parametric models assume a fixed number of parameters, offering faster training and lower computational cost but limited flexibility to capture complex data patterns. Nonparametric models adapt their complexity based on the dataset size, providing greater flexibility and better performance with large, intricate datasets at the expense of higher computational resources. Scalability challenges arise in nonparametric models due to increasing model complexity, whereas parametric models maintain consistent scalability regardless of data volume.

Handling Overfitting: Parametric vs Nonparametric Approaches

Parametric models handle overfitting by limiting the number of parameters, which constrains model complexity and reduces the risk of capturing noise in the training data. Nonparametric models adapt their complexity based on the dataset size, allowing flexibility but increasing sensitivity to overfitting when sample sizes are small. Regularization techniques and cross-validation are critical in both approaches to balance model fit and generalization in artificial intelligence applications.

Data Requirements for Parametric and Nonparametric Models

Parametric models in artificial intelligence require a fixed number of parameters, making them efficient with smaller datasets due to their assumption of a predefined functional form. Nonparametric models do not assume a specific form and adapt their complexity based on the data, necessitating larger datasets to achieve accurate results and avoid overfitting. Data requirements for parametric models are generally lower but may limit flexibility, whereas nonparametric models demand extensive data for reliable performance and capturing complex patterns.

Choosing the Right Model for Your AI Project

Selecting the right AI model depends on the nature and size of your dataset; parametric models, such as linear regression and neural networks, assume a fixed number of parameters and perform well with large datasets and clear underlying distributions. Nonparametric models, like k-nearest neighbors and Gaussian processes, adapt flexibly to data complexity without predefined parameters, making them ideal for small or irregular datasets. Understanding these differences is crucial for optimizing model accuracy, computational efficiency, and scalability in AI projects.

Parametric Model vs Nonparametric Model Infographic

techiny.com

techiny.com