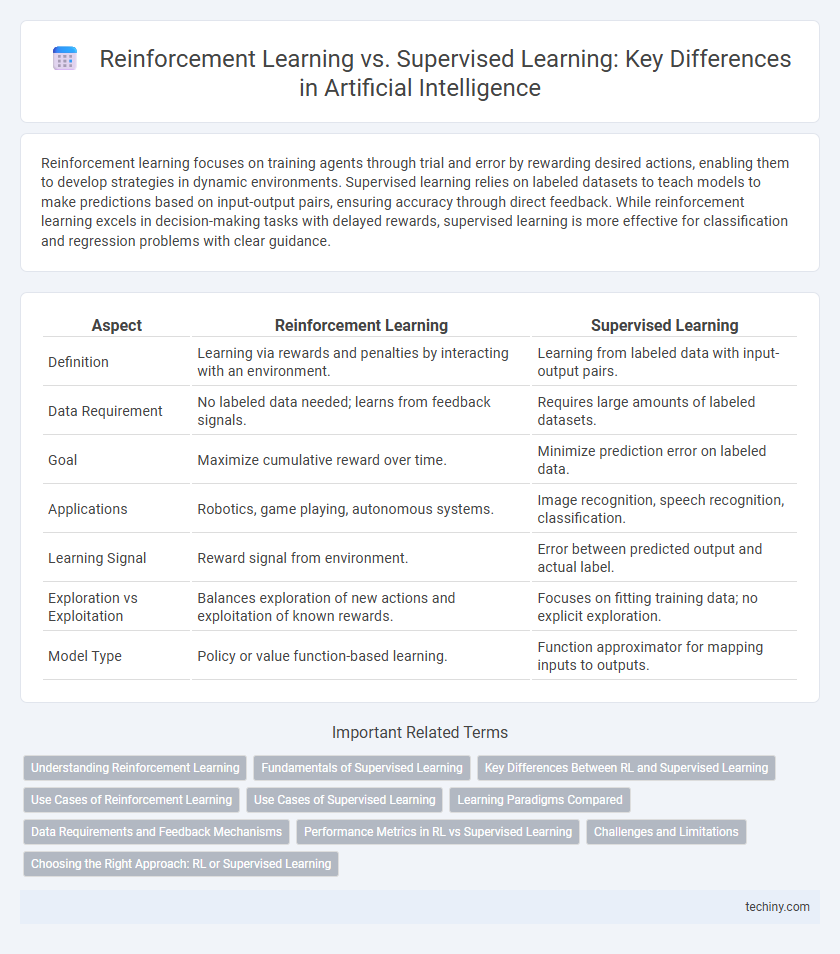

Reinforcement learning focuses on training agents through trial and error by rewarding desired actions, enabling them to develop strategies in dynamic environments. Supervised learning relies on labeled datasets to teach models to make predictions based on input-output pairs, ensuring accuracy through direct feedback. While reinforcement learning excels in decision-making tasks with delayed rewards, supervised learning is more effective for classification and regression problems with clear guidance.

Table of Comparison

| Aspect | Reinforcement Learning | Supervised Learning |

|---|---|---|

| Definition | Learning via rewards and penalties by interacting with an environment. | Learning from labeled data with input-output pairs. |

| Data Requirement | No labeled data needed; learns from feedback signals. | Requires large amounts of labeled datasets. |

| Goal | Maximize cumulative reward over time. | Minimize prediction error on labeled data. |

| Applications | Robotics, game playing, autonomous systems. | Image recognition, speech recognition, classification. |

| Learning Signal | Reward signal from environment. | Error between predicted output and actual label. |

| Exploration vs Exploitation | Balances exploration of new actions and exploitation of known rewards. | Focuses on fitting training data; no explicit exploration. |

| Model Type | Policy or value function-based learning. | Function approximator for mapping inputs to outputs. |

Understanding Reinforcement Learning

Reinforcement Learning (RL) is a machine learning paradigm where an agent learns optimal actions through trial and error by interacting with an environment, receiving rewards or penalties based on its decisions. Unlike Supervised Learning, which relies on labeled datasets, RL focuses on maximizing cumulative rewards over time without explicit input-output pairs. Key algorithms in RL include Q-Learning, Deep Q-Networks (DQN), and Policy Gradient methods, which enable applications in robotics, gaming, and autonomous systems.

Fundamentals of Supervised Learning

Supervised learning involves training a model on labeled data where input-output pairs guide the learning process to minimize prediction errors. Fundamental techniques include regression for continuous outputs and classification for categorical outputs, relying heavily on annotated datasets. This approach requires a comprehensive dataset to enable the model to generalize from examples and accurately predict unseen data.

Key Differences Between RL and Supervised Learning

Reinforcement Learning (RL) differs from Supervised Learning by using trial-and-error interactions with the environment to learn optimal policies, whereas Supervised Learning relies on labeled datasets to map inputs to outputs. RL agents receive feedback through rewards or penalties, enabling them to make sequential decisions, while Supervised Learning models minimize prediction errors from fixed training examples. Key distinctions also include RL's focus on long-term cumulative reward optimization and learning from delayed feedback, contrasting with Supervised Learning's immediate error correction based on ground truth labels.

Use Cases of Reinforcement Learning

Reinforcement Learning excels in dynamic environments requiring sequential decision-making, such as robotics for autonomous navigation, game playing like AlphaGo, and personalized recommendations adapting to user behavior. It is particularly effective for scenarios where explicit labeled data is scarce but feedback signals from the environment guide learning. Industries leveraging Reinforcement Learning include finance for stock trading strategies, healthcare for treatment optimization, and manufacturing for process automation.

Use Cases of Supervised Learning

Supervised learning excels in applications like image recognition, medical diagnosis, and natural language processing by utilizing labeled datasets to train models for accurate predictions. It is widely used in fraud detection systems, where historical transaction data helps classify fraudulent activities. Additionally, supervised learning powers recommendation engines by analyzing user preferences and behavior patterns.

Learning Paradigms Compared

Reinforcement Learning (RL) centers on training agents through trial and error by maximizing cumulative rewards from interactions with an environment, whereas Supervised Learning relies on labeled datasets to guide the model in predicting outcomes. RL optimizes decision-making policies under uncertainty and delayed feedback, contrasting with Supervised Learning's direct mapping between inputs and known outputs. The key difference lies in RL's exploration-exploitation trade-off versus Supervised Learning's focus on minimizing prediction errors using ground truth labels.

Data Requirements and Feedback Mechanisms

Reinforcement learning requires less labeled data but depends on continuous reward signals to guide agents through trial-and-error interactions with the environment. Supervised learning relies heavily on large, annotated datasets for training, using explicit input-output pairs to minimize prediction errors. Feedback in reinforcement learning is sparse and delayed, whereas supervised learning receives immediate and direct error correction after each prediction.

Performance Metrics in RL vs Supervised Learning

Performance metrics in Reinforcement Learning (RL) primarily revolve around cumulative reward and policy efficiency, measuring how well an agent maximizes long-term gains through trial and error. In Supervised Learning, metrics like accuracy, precision, recall, and F1-score evaluate model predictions against labeled datasets, emphasizing immediate correctness. RL metrics emphasize adaptability and decision quality over time, whereas supervised learning focuses on static prediction accuracy.

Challenges and Limitations

Reinforcement learning faces challenges such as extensive exploration requirements, delayed reward feedback, and instability in training, making it difficult to scale in complex environments. Supervised learning relies heavily on large, labeled datasets, which can be costly and time-consuming to obtain, limiting its applicability in real-world scenarios where data labeling is impractical. Both methods struggle with generalization to unseen data, but reinforcement learning's trial-and-error approach often requires more computational resources and longer training times compared to supervised learning.

Choosing the Right Approach: RL or Supervised Learning

Choosing between Reinforcement Learning (RL) and Supervised Learning depends on the problem structure and data availability. RL excels in environments requiring sequential decision-making with delayed rewards, such as robotics and game playing, where explicit labeling is impractical. Supervised Learning is ideal for tasks with abundant labeled data and clear input-output mappings, like image classification and fraud detection, enabling faster and more accurate model training.

Reinforcement Learning vs Supervised Learning Infographic

techiny.com

techiny.com