Retrieval-based models excel at providing accurate and relevant responses by selecting answers from a fixed dataset, ensuring consistency and reliability in information retrieval. Generative models create novel content by predicting sequences, enabling more flexible and creative interactions but sometimes at the cost of factual accuracy. Choosing between these approaches depends on the need for precise information versus creative synthesis in AI applications.

Table of Comparison

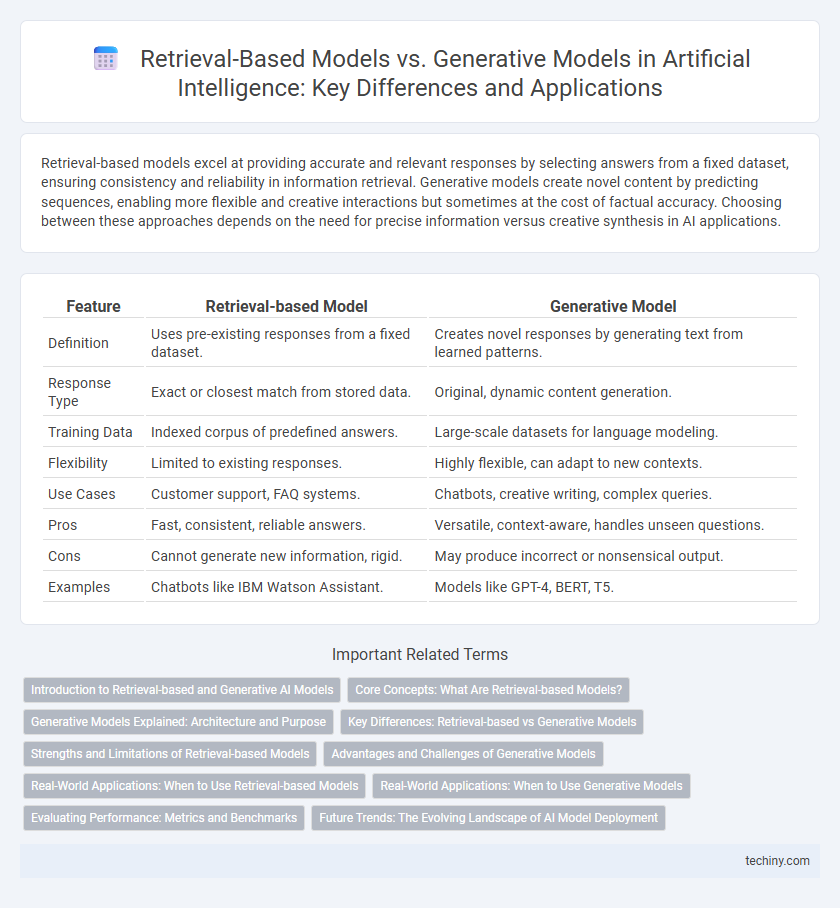

| Feature | Retrieval-based Model | Generative Model |

|---|---|---|

| Definition | Uses pre-existing responses from a fixed dataset. | Creates novel responses by generating text from learned patterns. |

| Response Type | Exact or closest match from stored data. | Original, dynamic content generation. |

| Training Data | Indexed corpus of predefined answers. | Large-scale datasets for language modeling. |

| Flexibility | Limited to existing responses. | Highly flexible, can adapt to new contexts. |

| Use Cases | Customer support, FAQ systems. | Chatbots, creative writing, complex queries. |

| Pros | Fast, consistent, reliable answers. | Versatile, context-aware, handles unseen questions. |

| Cons | Cannot generate new information, rigid. | May produce incorrect or nonsensical output. |

| Examples | Chatbots like IBM Watson Assistant. | Models like GPT-4, BERT, T5. |

Introduction to Retrieval-based and Generative AI Models

Retrieval-based AI models operate by selecting relevant information from a predefined dataset to answer queries or provide responses, ensuring accuracy and consistency based on existing content. Generative AI models, such as GPT and DALL-E, create new, original content by predicting patterns learned from vast amounts of training data, enabling the generation of text, images, and more. Both models are fundamental in artificial intelligence, with retrieval-based models excelling in factual accuracy and generative models offering creative and adaptive outputs.

Core Concepts: What Are Retrieval-based Models?

Retrieval-based models in artificial intelligence function by selecting the most relevant response from a predefined dataset or knowledge base based on the input query. These models utilize techniques such as cosine similarity, TF-IDF, or dense vector embeddings to match query vectors with stored document vectors, ensuring accurate information retrieval. Their core advantage lies in generating precise, contextually appropriate answers without creating new content, making them ideal for applications requiring factual consistency.

Generative Models Explained: Architecture and Purpose

Generative models in artificial intelligence utilize architectures such as transformers and variational autoencoders to create new data samples by learning underlying patterns from training datasets. Their purpose is to generate coherent and contextually relevant content, enabling applications like text generation, image synthesis, and speech production. Unlike retrieval-based models that select existing data, generative models produce novel outputs by understanding and replicating data distributions.

Key Differences: Retrieval-based vs Generative Models

Retrieval-based models operate by selecting the most relevant response from a predefined set of answers using similarity metrics, ensuring consistency and relevance in outputs. Generative models produce novel responses by predicting word sequences based on learned patterns from large datasets, allowing for more creative and contextually flexible interactions. Key differences include response originality, computational complexity, and adaptability to unseen queries.

Strengths and Limitations of Retrieval-based Models

Retrieval-based models excel at providing accurate and contextually relevant responses by selecting from a predefined dataset, ensuring consistency and reducing the likelihood of generating incorrect information. These models are computationally efficient and require less training data compared to generative models but are limited by their dependency on the quality and scope of the indexed data. The primary constraint lies in their inability to generate novel content or handle queries beyond their existing knowledge base, restricting flexibility in dynamic or open-ended tasks.

Advantages and Challenges of Generative Models

Generative models excel in producing creative, contextually relevant content by learning underlying data distributions, making them ideal for tasks such as text generation, image synthesis, and conversational AI. These models face challenges including high computational costs, the risk of generating inaccurate or biased information, and difficulty in controlling output specificity. Advances in techniques like reinforcement learning from human feedback (RLHF) and large-scale training datasets help improve the reliability and relevance of generative model outputs in real-world applications.

Real-World Applications: When to Use Retrieval-based Models

Retrieval-based models excel in real-world applications requiring precise and relevant responses from a fixed dataset, such as customer support chatbots and information retrieval systems. These models leverage pre-existing knowledge bases to efficiently match user queries with accurate answers, ensuring consistency and reliability. Industries like healthcare and finance benefit from retrieval-based models for compliance and fact-based decision-making tasks.

Real-World Applications: When to Use Generative Models

Generative models excel in real-world applications requiring creativity, such as content creation, chatbots, and personalized recommendations, where generating novel outputs is essential. Their ability to produce human-like text and imagery makes them ideal for customer support automation and virtual assistants. In contrast, retrieval-based models suit tasks demanding precise, factual responses by selecting existing information from a database.

Evaluating Performance: Metrics and Benchmarks

Evaluating performance in retrieval-based and generative models relies on distinct metrics tailored to their functionalities, with retrieval models often assessed through precision, recall, and mean reciprocal rank (MRR), while generative models prioritize perplexity, BLEU, and ROUGE scores. Benchmarks such as TREC and MS MARCO provide standardized datasets for retrieval tasks, enabling consistent performance comparison, whereas generative models utilize benchmarks like GPT-3's evaluation suites and Story Cloze Test to measure coherence and creativity. Selecting appropriate metrics and benchmarks is crucial for accurately gauging model effectiveness, guiding enhancements in natural language understanding and generation.

Future Trends: The Evolving Landscape of AI Model Deployment

Future trends in AI model deployment indicate a gradual shift towards hybrid architectures that integrate retrieval-based models' precise information access with generative models' creative content generation. Emphasis on scalability and real-time adaptability drives innovation in combining large-scale pre-trained generative models with external knowledge retrieval systems for improved accuracy and context relevance. Advances in decentralized AI and continuous learning protocols further enhance the deployment of hybrid models capable of evolving with emerging data and user interactions.

Retrieval-based Model vs Generative Model Infographic

techiny.com

techiny.com