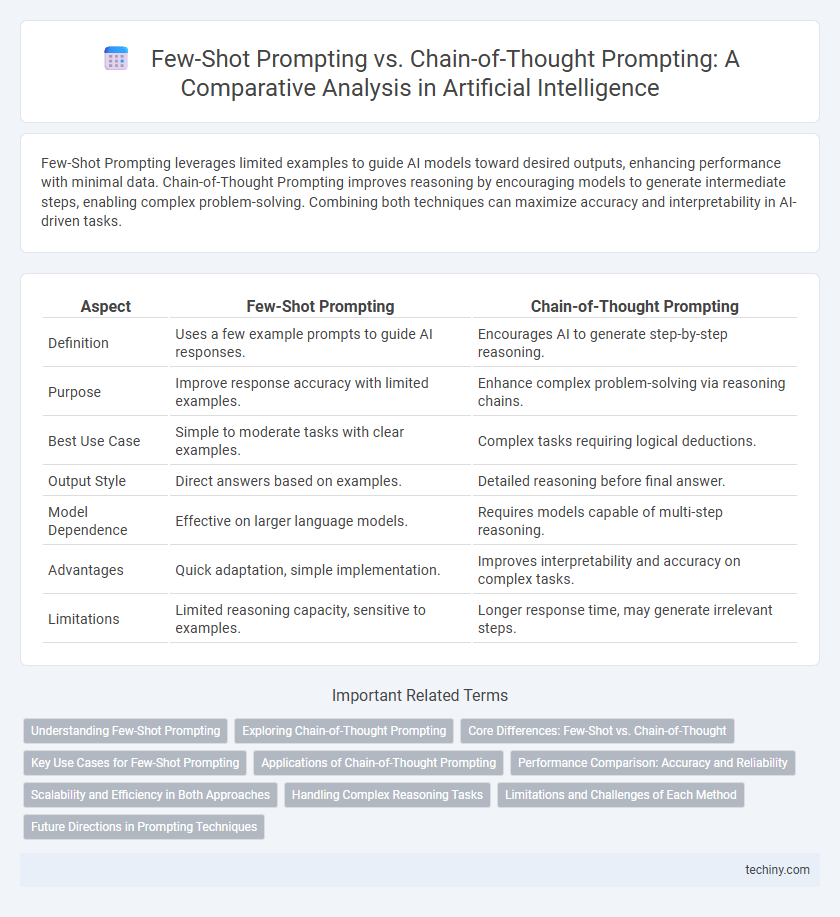

Few-Shot Prompting leverages limited examples to guide AI models toward desired outputs, enhancing performance with minimal data. Chain-of-Thought Prompting improves reasoning by encouraging models to generate intermediate steps, enabling complex problem-solving. Combining both techniques can maximize accuracy and interpretability in AI-driven tasks.

Table of Comparison

| Aspect | Few-Shot Prompting | Chain-of-Thought Prompting |

|---|---|---|

| Definition | Uses a few example prompts to guide AI responses. | Encourages AI to generate step-by-step reasoning. |

| Purpose | Improve response accuracy with limited examples. | Enhance complex problem-solving via reasoning chains. |

| Best Use Case | Simple to moderate tasks with clear examples. | Complex tasks requiring logical deductions. |

| Output Style | Direct answers based on examples. | Detailed reasoning before final answer. |

| Model Dependence | Effective on larger language models. | Requires models capable of multi-step reasoning. |

| Advantages | Quick adaptation, simple implementation. | Improves interpretability and accuracy on complex tasks. |

| Limitations | Limited reasoning capacity, sensitive to examples. | Longer response time, may generate irrelevant steps. |

Understanding Few-Shot Prompting

Few-shot prompting leverages a limited number of examples within the input to guide large language models in generating accurate and contextually relevant responses, significantly reducing the need for extensive fine-tuning. This technique enhances model adaptability by providing explicit demonstrations of the desired task, enabling effective performance even with sparse data. Understanding few-shot prompting is crucial for optimizing AI-driven natural language processing applications, as it balances efficiency with precision in diverse use cases.

Exploring Chain-of-Thought Prompting

Chain-of-Thought Prompting enhances large language model performance by enabling step-by-step reasoning, improving accuracy in complex tasks like mathematical problem-solving and logical deduction. This method leverages intermediate reasoning steps, allowing models to better handle multi-hop questions and multi-step instructions. Research shows Chain-of-Thought prompting outperforms Few-Shot Prompting in scenarios requiring detailed analytical thinking and elaborate inference chains.

Core Differences: Few-Shot vs. Chain-of-Thought

Few-Shot Prompting provides the model with a limited number of examples to guide responses, enabling quick adaptation to new tasks with minimal data. Chain-of-Thought Prompting, however, encourages the model to generate intermediate reasoning steps, improving performance on complex problem-solving by making the thought process explicit. The core difference lies in Few-Shot focusing on example-driven context setting, while Chain-of-Thought promotes step-by-step logical decomposition within the prompt.

Key Use Cases for Few-Shot Prompting

Few-shot prompting excels in scenarios requiring rapid adaptation to new tasks with minimal examples, such as language translation, sentiment analysis, and customized chatbots. It enables AI models to generalize from limited data, making it ideal for personalized content generation and domain-specific information retrieval. This approach reduces the need for extensive retraining, accelerating deployment in dynamic environments.

Applications of Chain-of-Thought Prompting

Chain-of-Thought prompting enhances complex problem-solving in AI by breaking down multi-step reasoning tasks into sequential, interpretable steps, improving performance in mathematical reasoning, logical deduction, and commonsense tasks. This technique is particularly effective in domains requiring structured thought processes, such as natural language understanding, code generation, and question answering systems. Its application enables large language models to handle intricate queries with greater accuracy and explainability, advancing AI capabilities in educational tools, diagnostic systems, and decision-support applications.

Performance Comparison: Accuracy and Reliability

Few-shot prompting demonstrates strong performance in generalization with limited examples, achieving moderate accuracy in diverse AI tasks. Chain-of-thought prompting significantly enhances reasoning capabilities, leading to higher accuracy and reliability in complex problem-solving scenarios. Comparative studies indicate chain-of-thought prompting often surpasses few-shot prompting in tasks requiring multi-step inference, improving model robustness and consistency.

Scalability and Efficiency in Both Approaches

Few-Shot Prompting demonstrates scalability by requiring minimal examples to adapt across diverse tasks, enabling rapid deployment with limited computational overhead. Chain-of-Thought Prompting enhances efficiency through structured reasoning steps that improve model interpretability and accuracy but often demands longer inference times and higher memory usage. Balancing scalability and efficiency depends on the application, where Few-Shot excels in speed and flexibility, while Chain-of-Thought supports complex problem-solving with increased resource investment.

Handling Complex Reasoning Tasks

Few-shot prompting leverages a limited number of examples to guide AI models in understanding context and performing tasks, but it may struggle with multi-step or complex reasoning scenarios. Chain-of-thought prompting enhances performance by explicitly guiding models through intermediate reasoning steps, enabling more accurate and interpretable solutions for complex problems. This approach significantly improves handling of tasks requiring logical deduction, multi-hop reasoning, and stepwise problem solving in artificial intelligence applications.

Limitations and Challenges of Each Method

Few-shot prompting often suffers from limited generalization when exposed to tasks outside its narrow training examples, resulting in decreased accuracy and reliability. Chain-of-thought prompting, while effective for complex reasoning, can struggle with increased computational cost and longer response times due to the need for multi-step intermediate reasoning. Both methods face challenges in scalability and robustness, requiring careful prompt engineering to mitigate issues like bias amplification and response inconsistency.

Future Directions in Prompting Techniques

Future directions in prompting techniques emphasize combining Few-Shot Prompting and Chain-of-Thought Prompting to enhance model reasoning and generalization across diverse tasks. Research is focusing on optimizing prompt design through automated methods and adaptive learning to improve efficiency and reduce reliance on extensive labeled data. Integration of multimodal inputs and dynamic context adjustment promises to advance prompt effectiveness in complex AI applications.

Few-Shot Prompting vs Chain-of-Thought Prompting Infographic

techiny.com

techiny.com