Supervised pretraining leverages labeled data to teach models specific patterns and relationships, enhancing accuracy in tasks where annotated examples are abundant. Unsupervised pretraining, on the other hand, enables models to learn from vast amounts of unlabeled data by identifying underlying structures and representations, which can improve generalization and reduce reliance on costly annotations. Balancing these approaches depends on the availability of labeled datasets and the desired adaptability of the AI system.

Table of Comparison

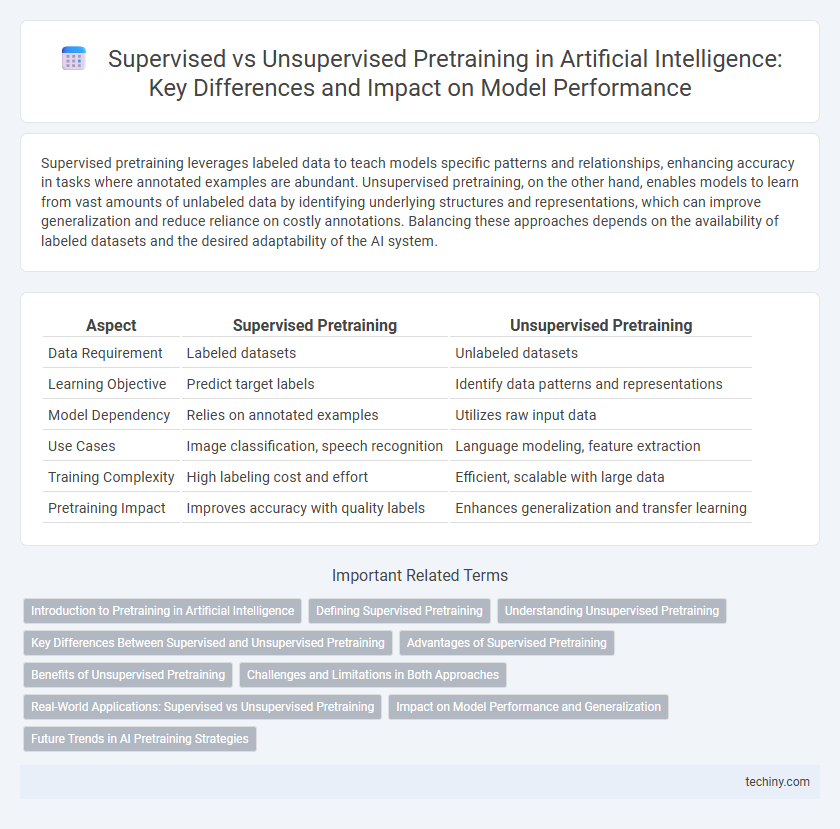

| Aspect | Supervised Pretraining | Unsupervised Pretraining |

|---|---|---|

| Data Requirement | Labeled datasets | Unlabeled datasets |

| Learning Objective | Predict target labels | Identify data patterns and representations |

| Model Dependency | Relies on annotated examples | Utilizes raw input data |

| Use Cases | Image classification, speech recognition | Language modeling, feature extraction |

| Training Complexity | High labeling cost and effort | Efficient, scalable with large data |

| Pretraining Impact | Improves accuracy with quality labels | Enhances generalization and transfer learning |

Introduction to Pretraining in Artificial Intelligence

Pretraining in artificial intelligence involves initializing models using large datasets to enhance performance on specific tasks. Supervised pretraining leverages labeled data to teach models explicit input-output relationships, while unsupervised pretraining relies on unlabeled data to learn underlying patterns and representations. Both approaches improve model accuracy and generalization by providing foundational knowledge before fine-tuning on target applications.

Defining Supervised Pretraining

Supervised pretraining in artificial intelligence involves training models on labeled datasets where the input data is paired with corresponding output labels. This approach enables the model to learn specific patterns and relationships between features and targets by minimizing prediction errors. It contrasts with unsupervised pretraining, which uses unlabeled data to capture underlying data structures without explicit guidance.

Understanding Unsupervised Pretraining

Unsupervised pretraining in artificial intelligence involves training models on large amounts of unlabeled data to learn general patterns and representations without explicit guidance. This approach leverages techniques like autoencoders and self-supervised learning to extract meaningful features that can improve downstream task performance. By capturing underlying data distributions, unsupervised pretraining enhances model robustness and reduces dependency on costly labeled datasets.

Key Differences Between Supervised and Unsupervised Pretraining

Supervised pretraining involves training AI models on labeled datasets, enabling them to learn specific patterns tied to annotated outcomes, which enhances performance on tasks requiring precise predictions. Unsupervised pretraining, by contrast, trains models on unlabeled data through methods like autoencoders or contrastive learning, helping the model capture intrinsic data structures without explicit guidance. The key differences lie in data requirements--supervised learning depends on costly labeled data while unsupervised leverages abundant unlabeled data--and in the scope of learned representations, where supervised models focus on task-specific features and unsupervised models extract general-purpose features.

Advantages of Supervised Pretraining

Supervised pretraining leverages labeled datasets to guide models in learning specific patterns and relationships, resulting in higher accuracy and faster convergence during fine-tuning phases. This approach enhances model generalization on related tasks by providing explicit feedback signals that improve feature extraction and representation quality. Consequently, supervised pretraining often outperforms unsupervised methods in applications requiring precise classification and prediction, such as image recognition and natural language processing.

Benefits of Unsupervised Pretraining

Unsupervised pretraining leverages vast amounts of unlabeled data, enabling models to learn richer, more generalizable feature representations without the costly and time-consuming need for manual annotations. This approach enhances adaptability across diverse downstream tasks and improves performance in scenarios with limited labeled samples. Consequently, unsupervised pretraining fosters robust transfer learning and promotes efficient utilization of available data in artificial intelligence systems.

Challenges and Limitations in Both Approaches

Supervised pretraining relies heavily on large labeled datasets, presenting challenges related to data annotation costs, label quality, and class imbalance that can limit model generalization. Unsupervised pretraining circumvents the need for labels but struggles with learning meaningful representations due to noise, ambiguous patterns, and the risk of capturing irrelevant or spurious correlations. Both approaches face limitations in scalability and domain adaptation, often requiring extensive fine-tuning to perform well across diverse tasks and real-world scenarios.

Real-World Applications: Supervised vs Unsupervised Pretraining

Supervised pretraining utilizes labeled datasets to enhance model accuracy in applications such as image recognition and medical diagnostics, where precise categorization is critical. Unsupervised pretraining leverages vast amounts of unlabeled data to uncover patterns and representations, proving essential in natural language processing and recommendation systems. The choice between supervised and unsupervised pretraining impacts model performance, scalability, and adaptability to real-world scenarios.

Impact on Model Performance and Generalization

Supervised pretraining leverages labeled datasets to directly optimize models for specific tasks, often resulting in higher accuracy and faster convergence on those tasks. Unsupervised pretraining, by learning from vast amounts of unlabeled data, enhances model generalization and robustness across diverse applications by capturing broader data distributions. The choice between these methods impacts model performance trade-offs, with supervised pretraining excelling in precision while unsupervised approaches promote adaptability in real-world environments.

Future Trends in AI Pretraining Strategies

Future trends in AI pretraining strategies emphasize hybrid models combining supervised and unsupervised techniques to enhance learning efficiency and accuracy. Advances in self-supervised learning leverage vast unlabeled datasets while incorporating limited labeled data to improve generalization across diverse AI applications. Emerging methods prioritize scalability and domain adaptation, enabling AI systems to continuously refine their knowledge with minimal human intervention.

Supervised Pretraining vs Unsupervised Pretraining Infographic

techiny.com

techiny.com