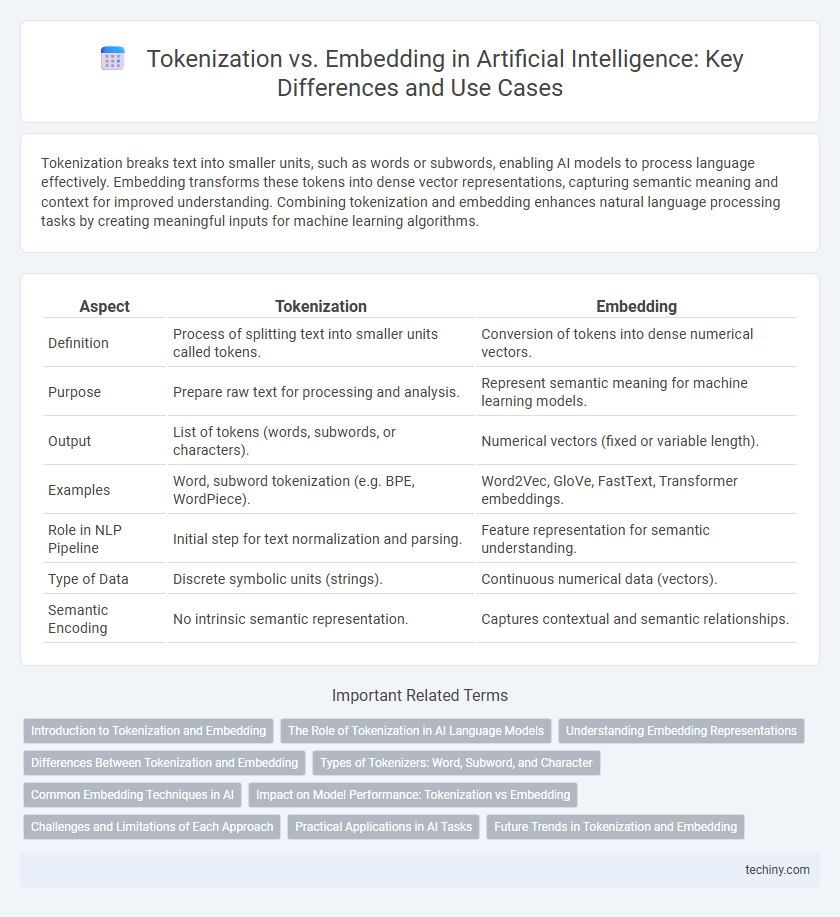

Tokenization breaks text into smaller units, such as words or subwords, enabling AI models to process language effectively. Embedding transforms these tokens into dense vector representations, capturing semantic meaning and context for improved understanding. Combining tokenization and embedding enhances natural language processing tasks by creating meaningful inputs for machine learning algorithms.

Table of Comparison

| Aspect | Tokenization | Embedding |

|---|---|---|

| Definition | Process of splitting text into smaller units called tokens. | Conversion of tokens into dense numerical vectors. |

| Purpose | Prepare raw text for processing and analysis. | Represent semantic meaning for machine learning models. |

| Output | List of tokens (words, subwords, or characters). | Numerical vectors (fixed or variable length). |

| Examples | Word, subword tokenization (e.g. BPE, WordPiece). | Word2Vec, GloVe, FastText, Transformer embeddings. |

| Role in NLP Pipeline | Initial step for text normalization and parsing. | Feature representation for semantic understanding. |

| Type of Data | Discrete symbolic units (strings). | Continuous numerical data (vectors). |

| Semantic Encoding | No intrinsic semantic representation. | Captures contextual and semantic relationships. |

Introduction to Tokenization and Embedding

Tokenization is the process of breaking down text into smaller units such as words, subwords, or characters, enabling machines to analyze and process natural language effectively. Embedding converts these tokens into dense vector representations that capture semantic relationships, allowing AI models to understand context and meaning beyond simple word matching. Together, tokenization and embedding form the foundation for natural language processing tasks, enhancing machine comprehension and enabling sophisticated language understanding.

The Role of Tokenization in AI Language Models

Tokenization in AI language models divides text into manageable units, such as words or subwords, enabling efficient processing and understanding of language patterns. This foundational step converts raw text into tokens that embedding algorithms transform into numerical vectors, capturing semantic relationships between words. Effective tokenization enhances model accuracy by preserving contextual nuances and improving the granularity of input data for subsequent embedding layers.

Understanding Embedding Representations

Embedding representations transform words or tokens into dense vectors that capture semantic relationships within high-dimensional space, enabling machines to understand context and meaning beyond mere tokenization. Unlike tokenization, which breaks text into discrete units, embeddings encode subtle linguistic nuances and similarities by mapping tokens to continuous numerical values trained on large corpora. This semantic richness within embeddings is fundamental for advanced natural language processing tasks such as sentiment analysis, machine translation, and contextual search.

Differences Between Tokenization and Embedding

Tokenization involves breaking down text into smaller units like words or subwords, serving as the initial step in natural language processing pipelines. Embedding transforms these tokens into dense vector representations that capture semantic meanings and contextual relationships in a multi-dimensional space. While tokenization prepares raw text data for computational models, embedding provides the numerical abstraction necessary for machines to interpret linguistic nuances.

Types of Tokenizers: Word, Subword, and Character

Tokenization in artificial intelligence involves breaking text into smaller units, with three primary types: word, subword, and character tokenizers. Word tokenizers split text by spaces or punctuation, providing whole-word representations but struggling with out-of-vocabulary terms. Subword tokenizers, such as Byte Pair Encoding (BPE) and WordPiece, break words into smaller units to balance vocabulary size and generalization, while character tokenizers process text at the individual character level, enabling fine-grained analysis but often increasing sequence length.

Common Embedding Techniques in AI

Common embedding techniques in AI include Word2Vec, GloVe, and FastText, which transform textual data into dense vector representations capturing semantic relationships. These embeddings facilitate more effective natural language processing by enabling algorithms to understand word context and similarity. Compared to tokenization, which simply segments text into basic units, embeddings provide richer, multidimensional features essential for deep learning models.

Impact on Model Performance: Tokenization vs Embedding

Tokenization breaks text into manageable units, enabling models to process language efficiently, but the choice of tokenization method directly influences the granularity and context captured. Embedding transforms these tokens into dense vector representations that encapsulate semantic meaning, significantly enhancing model understanding and prediction accuracy. Optimizing tokenization and embedding together leads to improved model performance, especially in handling complex language tasks and capturing contextual nuances.

Challenges and Limitations of Each Approach

Tokenization in artificial intelligence often struggles with handling out-of-vocabulary words and complex linguistic structures, which can hinder model performance on nuanced text. Embedding techniques face challenges related to capturing contextual meaning accurately, especially in polysemous words and rare terms, leading to potential semantic ambiguity. Both approaches require substantial computational resources and careful tuning to optimize effectiveness across diverse NLP tasks.

Practical Applications in AI Tasks

Tokenization breaks text into smaller units such as words or subwords, serving as the initial step in natural language processing tasks like text classification, machine translation, and sentiment analysis. Embedding transforms these tokens into dense vector representations that capture semantic meanings, enabling algorithms to understand context and relationships in applications such as language modeling, information retrieval, and question answering systems. Efficient tokenization combined with high-quality embeddings enhances model performance in tasks involving language understanding and generation.

Future Trends in Tokenization and Embedding

Future trends in tokenization and embedding emphasize more efficient, context-aware models leveraging subword tokenization and dynamic embeddings to improve language understanding and generation. Advances in transformer architectures and continual learning facilitate embedding representations that better capture semantic nuances and adapt to evolving language use. Integration of multimodal data promises tokenization techniques capable of encoding diverse inputs, enhancing AI applications across text, image, and audio processing.

Tokenization vs Embedding Infographic

techiny.com

techiny.com