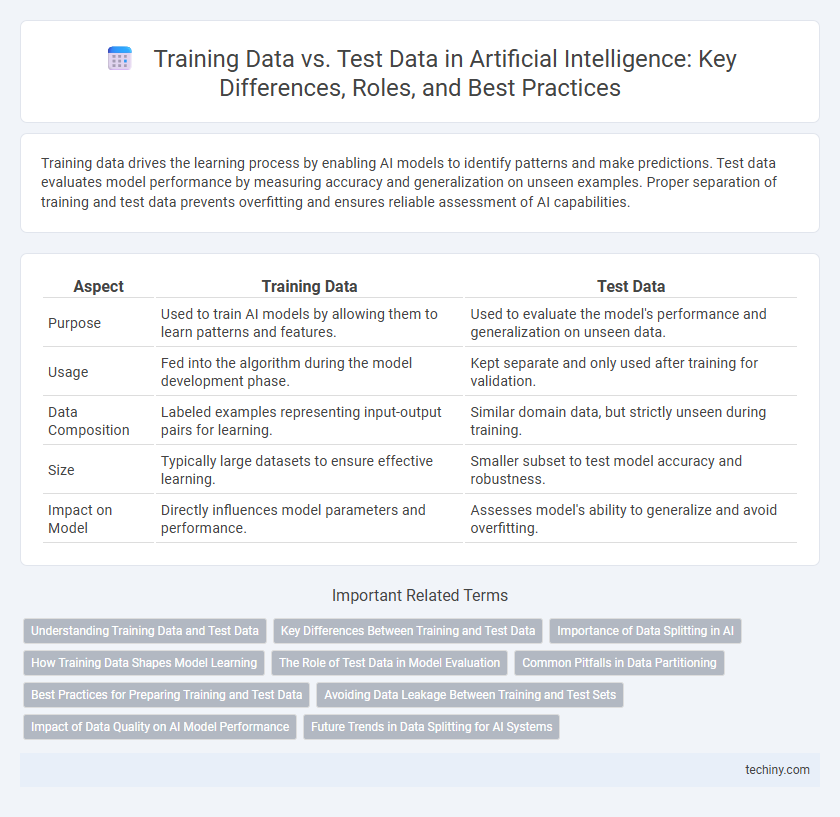

Training data drives the learning process by enabling AI models to identify patterns and make predictions. Test data evaluates model performance by measuring accuracy and generalization on unseen examples. Proper separation of training and test data prevents overfitting and ensures reliable assessment of AI capabilities.

Table of Comparison

| Aspect | Training Data | Test Data |

|---|---|---|

| Purpose | Used to train AI models by allowing them to learn patterns and features. | Used to evaluate the model's performance and generalization on unseen data. |

| Usage | Fed into the algorithm during the model development phase. | Kept separate and only used after training for validation. |

| Data Composition | Labeled examples representing input-output pairs for learning. | Similar domain data, but strictly unseen during training. |

| Size | Typically large datasets to ensure effective learning. | Smaller subset to test model accuracy and robustness. |

| Impact on Model | Directly influences model parameters and performance. | Assesses model's ability to generalize and avoid overfitting. |

Understanding Training Data and Test Data

Training data consists of labeled examples used to teach AI models to recognize patterns and make predictions, forming the foundation for machine learning algorithms. Test data evaluates the model's performance on unseen inputs, ensuring the model generalizes well beyond the training set. Understanding the distinction between training data and test data is critical for optimizing model accuracy and preventing overfitting in artificial intelligence applications.

Key Differences Between Training and Test Data

Training data is used to teach the AI model by allowing it to learn patterns and relationships within the dataset, enabling it to make predictions or decisions. Test data, in contrast, is employed to evaluate the model's performance on unseen information, measuring its generalization ability and accuracy. The key differences include their roles in the machine learning pipeline, with training data optimizing model parameters and test data serving as an unbiased benchmark for validating model effectiveness.

Importance of Data Splitting in AI

Data splitting into training data and test data is crucial for building robust artificial intelligence models, as it ensures unbiased evaluation of model performance on unseen data. Training data enables the model to learn underlying patterns, while test data assesses its generalization capability, preventing overfitting. Proper data splitting techniques, such as stratified sampling, maintain the distribution of classes and enhance the reliability of AI model validation.

How Training Data Shapes Model Learning

Training data provides the foundational examples that enable machine learning algorithms to identify patterns and relationships within the dataset. The quality, diversity, and representativeness of training data directly impact the model's ability to generalize well to new, unseen data. Effective training data selection minimizes bias and overfitting, ensuring robust model performance during real-world deployment.

The Role of Test Data in Model Evaluation

Test data plays a critical role in model evaluation by providing an unbiased assessment of the artificial intelligence model's performance on unseen inputs. It measures the model's ability to generalize beyond the training data, ensuring robustness and preventing overfitting. Accurate evaluation with test data helps identify potential weaknesses and guides improvements in AI model development.

Common Pitfalls in Data Partitioning

Common pitfalls in data partitioning for artificial intelligence include data leakage, where test data inadvertently influences the training process, leading to overly optimistic performance metrics. Another frequent issue is imbalanced splitting, which results in training or test sets not accurately representing the overall data distribution, compromising model generalization. Inconsistent preprocessing between training and test datasets further degrades model evaluation by introducing biases that do not reflect real-world scenarios.

Best Practices for Preparing Training and Test Data

Effective preparation of training and test data involves carefully curating diverse and representative datasets to ensure robust model performance and generalization. Data preprocessing techniques such as normalization, handling missing values, and removing duplicates enhance data quality and consistency across both sets. Maintaining strict separation between training and test datasets prevents data leakage and ensures unbiased evaluation of model accuracy.

Avoiding Data Leakage Between Training and Test Sets

Ensuring strict separation between training data and test data is crucial to avoid data leakage, which can lead to overly optimistic model performance metrics. Techniques such as careful data partitioning, using stratified sampling, and preventing feature overlap between sets help maintain the integrity of model evaluation. Proper handling of data leakage preserves the model's ability to generalize to unseen data and improves overall reliability in real-world applications.

Impact of Data Quality on AI Model Performance

High-quality training data ensures that AI models learn accurate patterns, directly enhancing their predictive performance and generalization capabilities. Test data with representative, clean, and unbiased samples provides a reliable assessment of model accuracy, preventing misleading performance metrics. Poor data quality, including noise or imbalance, can cause overfitting or underfitting, significantly degrading model robustness and real-world applicability.

Future Trends in Data Splitting for AI Systems

Future trends in data splitting for AI systems emphasize dynamic and adaptive methods that enhance model generalization and robustness. Techniques such as stratified sampling, time-series aware splits, and automated data partitioning driven by meta-learning algorithms are increasingly adopted to reflect real-world variability and minimize data leakage. Advances in synthetic data generation and federated learning also contribute to more diversified and representative training and test datasets, improving AI performance across diverse applications.

Training Data vs Test Data Infographic

techiny.com

techiny.com