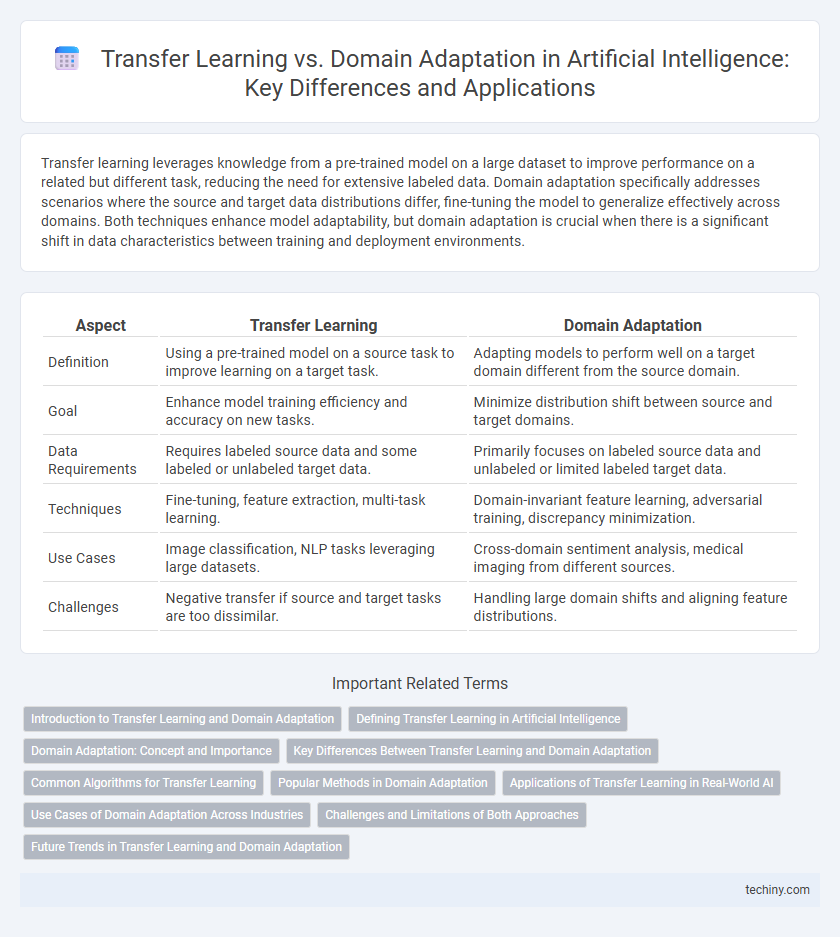

Transfer learning leverages knowledge from a pre-trained model on a large dataset to improve performance on a related but different task, reducing the need for extensive labeled data. Domain adaptation specifically addresses scenarios where the source and target data distributions differ, fine-tuning the model to generalize effectively across domains. Both techniques enhance model adaptability, but domain adaptation is crucial when there is a significant shift in data characteristics between training and deployment environments.

Table of Comparison

| Aspect | Transfer Learning | Domain Adaptation |

|---|---|---|

| Definition | Using a pre-trained model on a source task to improve learning on a target task. | Adapting models to perform well on a target domain different from the source domain. |

| Goal | Enhance model training efficiency and accuracy on new tasks. | Minimize distribution shift between source and target domains. |

| Data Requirements | Requires labeled source data and some labeled or unlabeled target data. | Primarily focuses on labeled source data and unlabeled or limited labeled target data. |

| Techniques | Fine-tuning, feature extraction, multi-task learning. | Domain-invariant feature learning, adversarial training, discrepancy minimization. |

| Use Cases | Image classification, NLP tasks leveraging large datasets. | Cross-domain sentiment analysis, medical imaging from different sources. |

| Challenges | Negative transfer if source and target tasks are too dissimilar. | Handling large domain shifts and aligning feature distributions. |

Introduction to Transfer Learning and Domain Adaptation

Transfer learning enables artificial intelligence models to leverage knowledge from one task or dataset to improve performance on a different but related task, reducing the need for extensive labeled data. Domain adaptation, a subset of transfer learning, specifically addresses the challenge of shifting data distributions between the source and target domains to enhance model generalization. Both techniques optimize model adaptability, with transfer learning focusing on task transfer and domain adaptation on mitigating domain discrepancies.

Defining Transfer Learning in Artificial Intelligence

Transfer learning in artificial intelligence involves leveraging pre-trained models on large datasets to improve performance on related but distinct tasks with limited data. It enables knowledge transfer by reusing learned features, reducing the need for extensive training from scratch. This approach enhances efficiency and accuracy in applications such as image recognition, natural language processing, and speech recognition.

Domain Adaptation: Concept and Importance

Domain adaptation is a crucial subfield of transfer learning that focuses on applying a model trained in one domain (source) to improve performance in a different, yet related domain (target) where labeled data is scarce or unavailable. It addresses domain shift issues by aligning feature distributions between the source and target domains, enhancing model generalization and robustness in real-world scenarios. The importance of domain adaptation lies in its ability to reduce the need for extensive labeled data in the target domain, thereby accelerating the deployment of AI models across varying environments and applications.

Key Differences Between Transfer Learning and Domain Adaptation

Transfer learning involves leveraging knowledge from a pre-trained model on a source task to improve performance on a related but different target task, often across different domains. Domain adaptation, a subset of transfer learning, specifically addresses the challenge of adapting a model trained in one domain to perform well in a different but related domain by reducing domain shift. Key differences lie in their focus: transfer learning emphasizes cross-task knowledge transfer, while domain adaptation centers on mitigating discrepancies between source and target domain data distributions.

Common Algorithms for Transfer Learning

Common algorithms for transfer learning include fine-tuning pre-trained neural networks, which adjusts existing models on new tasks with limited data. Feature-based transfer methods such as Transfer Component Analysis (TCA) align feature distributions between source and target domains to improve performance. Domain adaptation techniques like Domain-Adversarial Neural Networks (DANN) reduce domain discrepancies by learning domain-invariant representations, enhancing cross-domain generalization.

Popular Methods in Domain Adaptation

Popular methods in domain adaptation include adversarial training, which aligns feature distributions between source and target domains using domain discriminators. Another key approach involves discrepancy-based techniques like Maximum Mean Discrepancy (MMD) to minimize distributional divergence. Self-training methods leverage pseudo-labeling on target domain data to iteratively improve model performance.

Applications of Transfer Learning in Real-World AI

Transfer learning accelerates AI model development by leveraging pre-trained models for tasks with limited data, significantly enhancing applications in medical imaging, natural language processing, and autonomous driving. By utilizing knowledge from related domains, transfer learning enables improved accuracy and efficiency in real-world AI systems such as facial recognition, speech synthesis, and fraud detection. These applications demonstrate the scalability and adaptability of transfer learning across diverse industries, optimizing performance where domain-specific data is scarce.

Use Cases of Domain Adaptation Across Industries

Domain adaptation enables AI models to apply learned knowledge from one domain to another, optimizing performance in industries such as healthcare, finance, and retail where data distributions vary significantly. In healthcare, domain adaptation improves diagnostic accuracy by adapting models trained on one population to different demographic groups. Financial institutions leverage domain adaptation to enhance fraud detection across diverse transaction types, while retailers utilize it to personalize recommendations in new market segments with limited labeled data.

Challenges and Limitations of Both Approaches

Transfer learning faces challenges such as negative transfer, where pre-trained models on source tasks may fail to generalize to target tasks with vastly different distributions or features. Domain adaptation struggles with limitations including sensitivity to domain shifts and the requirement for labeled or partially labeled target data, which can be costly and time-consuming to obtain. Both approaches are constrained by the scarcity of robust metrics to accurately measure transferability and domain similarity, affecting model performance assessment.

Future Trends in Transfer Learning and Domain Adaptation

Emerging trends in transfer learning and domain adaptation emphasize leveraging large-scale pre-trained models and fine-tuning them for specific target domains, enhancing efficiency and performance across diverse applications. Techniques integrating self-supervised learning with domain adaptation aim to minimize labeled data requirements while maximizing model generalization. Future research focuses on developing adaptive algorithms that dynamically adjust to evolving domain shifts in real-time environments, enabling robust AI deployment in complex, changing scenarios.

transfer learning vs domain adaptation Infographic

techiny.com

techiny.com