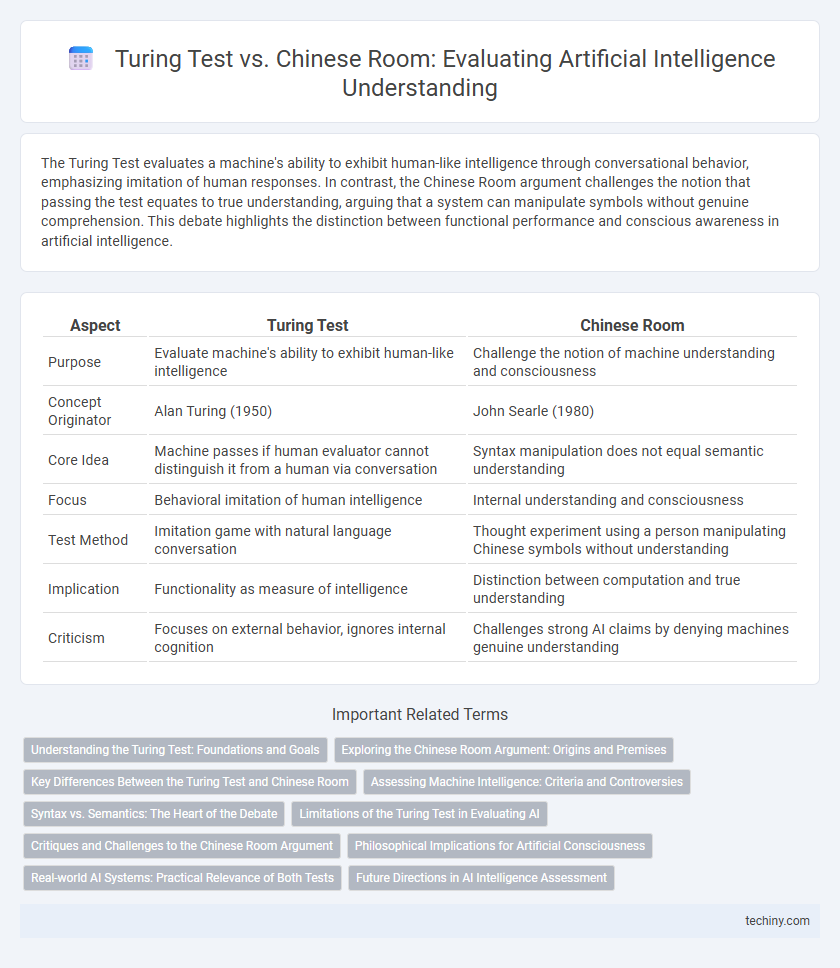

The Turing Test evaluates a machine's ability to exhibit human-like intelligence through conversational behavior, emphasizing imitation of human responses. In contrast, the Chinese Room argument challenges the notion that passing the test equates to true understanding, arguing that a system can manipulate symbols without genuine comprehension. This debate highlights the distinction between functional performance and conscious awareness in artificial intelligence.

Table of Comparison

| Aspect | Turing Test | Chinese Room |

|---|---|---|

| Purpose | Evaluate machine's ability to exhibit human-like intelligence | Challenge the notion of machine understanding and consciousness |

| Concept Originator | Alan Turing (1950) | John Searle (1980) |

| Core Idea | Machine passes if human evaluator cannot distinguish it from a human via conversation | Syntax manipulation does not equal semantic understanding |

| Focus | Behavioral imitation of human intelligence | Internal understanding and consciousness |

| Test Method | Imitation game with natural language conversation | Thought experiment using a person manipulating Chinese symbols without understanding |

| Implication | Functionality as measure of intelligence | Distinction between computation and true understanding |

| Criticism | Focuses on external behavior, ignores internal cognition | Challenges strong AI claims by denying machines genuine understanding |

Understanding the Turing Test: Foundations and Goals

The Turing Test, proposed by Alan Turing in 1950, assesses a machine's ability to exhibit intelligent behavior indistinguishable from a human, focusing on linguistic interaction rather than internal understanding. It aims to evaluate the outward performance and functional mimicry of human intelligence without requiring consciousness or comprehension. In contrast, the Chinese Room argument challenges whether passing the Turing Test truly demonstrates genuine understanding or merely simulates it through symbol manipulation.

Exploring the Chinese Room Argument: Origins and Premises

The Chinese Room argument, proposed by philosopher John Searle in 1980, challenges the notion that passing the Turing Test equates to genuine understanding in artificial intelligence. It posits that a system manipulating symbols based on syntax alone, without grasping semantic meaning, cannot be said to truly "understand" language. This thought experiment highlights the distinction between functional behavior and conscious comprehension, questioning the limits of computational models in replicating human cognitive processes.

Key Differences Between the Turing Test and Chinese Room

The Turing Test evaluates a machine's ability to exhibit human-like intelligence through conversation, emphasizing behavior indistinguishable from a human. The Chinese Room argument, proposed by John Searle, challenges this by asserting that syntactic manipulation of symbols does not equate to genuine understanding or consciousness. Key differences lie in the Turing Test's focus on external imitation of intelligence versus the Chinese Room's critique of internal semantic comprehension.

Assessing Machine Intelligence: Criteria and Controversies

The Turing Test evaluates machine intelligence based on a machine's ability to exhibit human-like conversational behavior indistinguishable from a human, emphasizing external behavioral criteria. In contrast, the Chinese Room argument challenges this by highlighting the absence of genuine understanding or consciousness, asserting that syntactic processing alone is insufficient for true intelligence. These differing criteria fuel ongoing controversies in assessing machine intelligence, questioning whether behavioral imitation or internal cognitive processes should define artificial intelligence.

Syntax vs. Semantics: The Heart of the Debate

The Turing Test evaluates a machine's ability to mimic human-like syntax in conversation without addressing true understanding of semantics, while the Chinese Room argument challenges this by emphasizing that symbol manipulation lacks genuine semantic comprehension. John Searle's critique underscores that following syntactic rules does not equate to possessing intrinsic meaning or intentionality within AI systems. This debate highlights the critical difference between surface-level language processing and deep semantic understanding as central to artificial intelligence development.

Limitations of the Turing Test in Evaluating AI

The Turing Test evaluates a machine's ability to exhibit human-like conversation but fails to assess genuine understanding or consciousness, as exemplified by the Chinese Room argument. While the Turing Test measures linguistic indistinguishability, it overlooks the semantic comprehension and intentionality intrinsic to true intelligence. This limitation highlights the need for more robust evaluation methods that address the depth of AI cognition beyond surface-level interactions.

Critiques and Challenges to the Chinese Room Argument

The Chinese Room argument faces critiques regarding its reliance on syntax over semantics, prompting challenges that question whether manipulating symbols genuinely equates to understanding or consciousness. Critics argue that the system as a whole, rather than the individual within the room, might exhibit true comprehension, undermining the argument's claim that machines lack intentionality. Furthermore, advancements in AI, particularly in natural language processing and neural networks, challenge the rigidity of the Chinese Room by demonstrating increasingly sophisticated contextual understanding.

Philosophical Implications for Artificial Consciousness

The Turing Test evaluates a machine's ability to exhibit human-like intelligence through behavior, emphasizing external performance as a benchmark for artificial consciousness. In contrast, the Chinese Room argument challenges this notion by highlighting the absence of genuine understanding or subjective experience despite syntactic processing. These philosophical implications question whether true artificial consciousness requires more than functional equivalence, probing the intrinsic nature of awareness in AI systems.

Real-world AI Systems: Practical Relevance of Both Tests

The Turing Test evaluates AI's ability to exhibit human-like conversational behavior, influencing chatbot and virtual assistant development in real-world applications. The Chinese Room argument challenges this by questioning whether syntactic processing equates to genuine understanding, emphasizing the need for AI systems to demonstrate semantic comprehension for tasks like language translation and autonomous decision-making. Together, these perspectives drive advancements in natural language processing and explainability in AI deployment across industries.

Future Directions in AI Intelligence Assessment

Emerging methodologies in AI intelligence assessment move beyond the traditional Turing Test, emphasizing context-aware evaluations and cognitive emulation inspired by the Chinese Room argument. Future directions prioritize interpretability, embedding ethical frameworks, and integrating multimodal understanding to capture genuine comprehension rather than mere linguistic mimicry. Advances in neuro-symbolic AI and explainable models aim to redefine benchmarks, fostering systems that reflect human-like reasoning and contextual awareness.

Turing Test vs Chinese Room Infographic

techiny.com

techiny.com