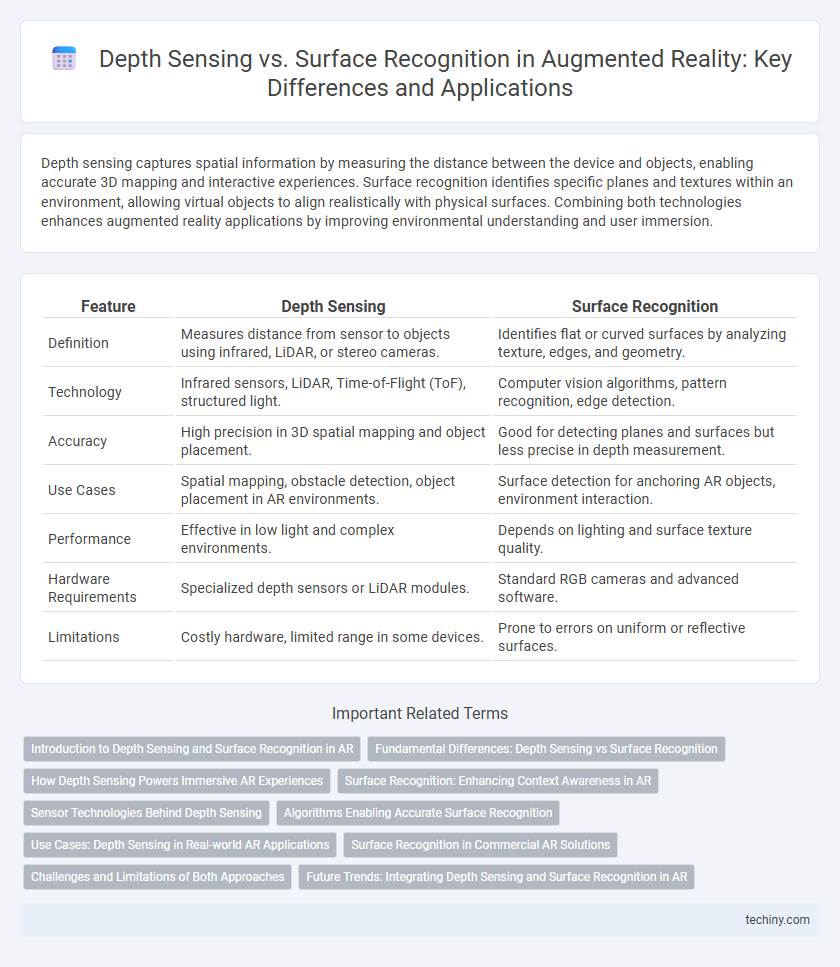

Depth sensing captures spatial information by measuring the distance between the device and objects, enabling accurate 3D mapping and interactive experiences. Surface recognition identifies specific planes and textures within an environment, allowing virtual objects to align realistically with physical surfaces. Combining both technologies enhances augmented reality applications by improving environmental understanding and user immersion.

Table of Comparison

| Feature | Depth Sensing | Surface Recognition |

|---|---|---|

| Definition | Measures distance from sensor to objects using infrared, LiDAR, or stereo cameras. | Identifies flat or curved surfaces by analyzing texture, edges, and geometry. |

| Technology | Infrared sensors, LiDAR, Time-of-Flight (ToF), structured light. | Computer vision algorithms, pattern recognition, edge detection. |

| Accuracy | High precision in 3D spatial mapping and object placement. | Good for detecting planes and surfaces but less precise in depth measurement. |

| Use Cases | Spatial mapping, obstacle detection, object placement in AR environments. | Surface detection for anchoring AR objects, environment interaction. |

| Performance | Effective in low light and complex environments. | Depends on lighting and surface texture quality. |

| Hardware Requirements | Specialized depth sensors or LiDAR modules. | Standard RGB cameras and advanced software. |

| Limitations | Costly hardware, limited range in some devices. | Prone to errors on uniform or reflective surfaces. |

Introduction to Depth Sensing and Surface Recognition in AR

Depth sensing in augmented reality (AR) utilizes technologies such as LiDAR, structured light, and time-of-flight cameras to accurately measure the distance between the device and objects in the environment, enabling precise spatial mapping and object occlusion. Surface recognition involves identifying and interpreting flat or textured surfaces like floors, walls, and tables using computer vision algorithms to anchor virtual content reliably within a physical space. Combining depth sensing and surface recognition enhances AR experiences by providing real-time environmental understanding crucial for immersive and interactive applications.

Fundamental Differences: Depth Sensing vs Surface Recognition

Depth sensing utilizes specialized hardware such as LiDAR or time-of-flight cameras to measure the distance between the device and surrounding objects, enabling precise spatial mapping and object placement in augmented reality environments. Surface recognition relies on analyzing visual features and textures from camera feeds to identify and track flat surfaces like walls or tables, facilitating virtual content anchoring without requiring depth data. The fundamental difference lies in depth sensing capturing real-world geometry through direct distance measurements, whereas surface recognition infers geometry through pattern detection and image processing techniques.

How Depth Sensing Powers Immersive AR Experiences

Depth sensing enables augmented reality systems to accurately map the spatial environment by capturing precise distance data using technologies like LiDAR or time-of-flight cameras. This spatial awareness allows AR devices to anchor virtual objects realistically within a three-dimensional space, enhancing user interaction and immersion. Improved depth perception reduces visual discrepancies and occlusion errors, delivering seamless integration between the real and virtual worlds.

Surface Recognition: Enhancing Context Awareness in AR

Surface recognition in augmented reality enhances context awareness by accurately identifying and mapping physical objects and environments, enabling more seamless and interactive user experiences. This technology leverages advanced computer vision algorithms to detect textures, shapes, and spatial relationships, facilitating realistic object placement and interaction within the AR environment. Optimizing surface recognition improves AR applications in fields such as gaming, education, and industrial design by providing precise spatial context and enhancing immersion.

Sensor Technologies Behind Depth Sensing

Depth sensing in augmented reality relies heavily on advanced sensor technologies such as LiDAR, time-of-flight (ToF) cameras, and structured light sensors to accurately measure the distance between the device and real-world objects. LiDAR uses laser pulses to create detailed 3D maps by calculating the time it takes for light to return to the sensor, enabling precise depth measurements even in low-light conditions. Time-of-flight cameras emit infrared light and measure its reflection to generate depth information rapidly, while structured light sensors project a known pattern onto surfaces, detecting distortions to map depth with high resolution.

Algorithms Enabling Accurate Surface Recognition

Advanced algorithms leveraging machine learning and computer vision enable accurate surface recognition by analyzing depth data and spatial features in augmented reality. These algorithms process point clouds and RGB-D images to distinguish between surfaces, enhancing object placement and interaction precision. Depth sensing provides raw distance information, while surface recognition algorithms interpret this data to create detailed environmental maps for seamless AR experiences.

Use Cases: Depth Sensing in Real-world AR Applications

Depth sensing enhances real-world AR applications by providing accurate spatial mapping essential for object occlusion, gesture recognition, and environmental interaction in gaming and industrial training. Technologies like LiDAR and time-of-flight sensors deliver precise distance measurements, enabling AR systems to overlay virtual objects seamlessly within complex environments. This capability is critical for healthcare simulations, automotive HUDs, and remote assistance tools where depth accuracy improves user experience and operational safety.

Surface Recognition in Commercial AR Solutions

Surface recognition in commercial AR solutions enables devices to identify and map real-world objects with high precision, enhancing user interaction and immersion. This technology relies on advanced algorithms and cameras to detect textures, shapes, and contours, facilitating realistic overlay of virtual content on varied surfaces. Unlike depth sensing, surface recognition provides detailed environmental understanding crucial for applications in retail, manufacturing, and healthcare, where accurate spatial alignment is essential.

Challenges and Limitations of Both Approaches

Depth sensing in augmented reality faces challenges such as limited accuracy in varying light conditions and higher power consumption, making real-time processing difficult. Surface recognition struggles with detecting transparent or reflective surfaces and often requires extensive prior mapping, limiting its adaptability in dynamic environments. Both approaches encounter limitations in scalability and integration, affecting the seamless user experience across diverse AR applications.

Future Trends: Integrating Depth Sensing and Surface Recognition in AR

Future trends in augmented reality emphasize the integration of depth sensing and surface recognition technologies to create more immersive and accurate spatial experiences. Combining LiDAR-based depth sensing with advanced machine learning algorithms for surface recognition enhances real-time environmental mapping and object interaction. This fusion enables AR devices to deliver unprecedented precision in overlaying digital content, crucial for applications in navigation, gaming, and industrial design.

Depth Sensing vs Surface Recognition Infographic

techiny.com

techiny.com