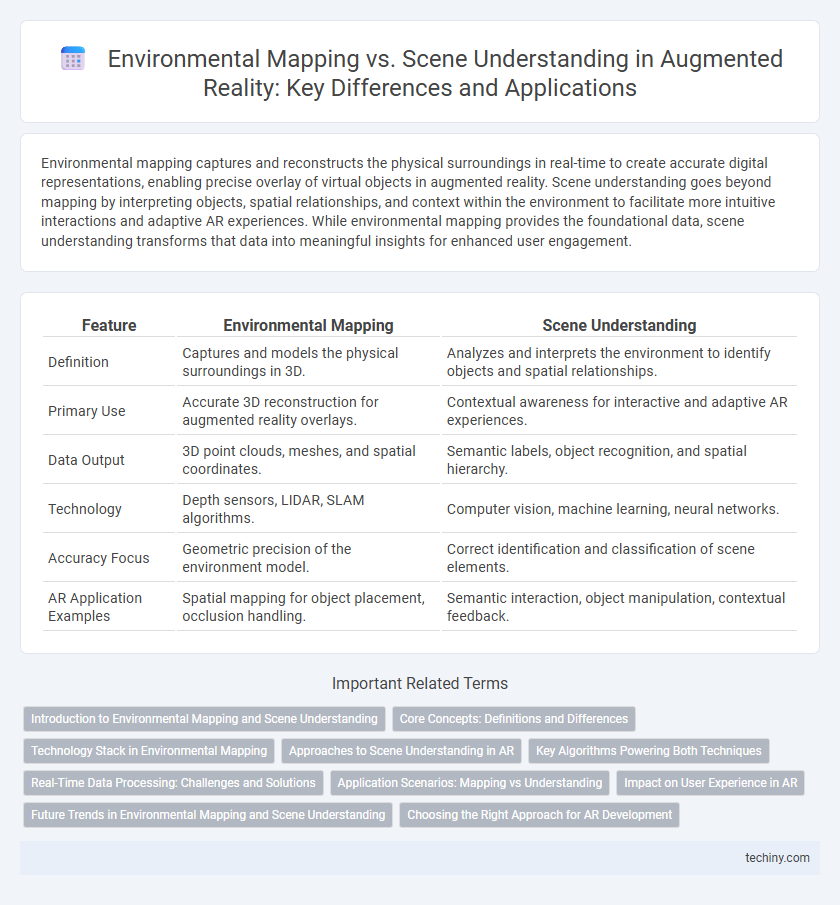

Environmental mapping captures and reconstructs the physical surroundings in real-time to create accurate digital representations, enabling precise overlay of virtual objects in augmented reality. Scene understanding goes beyond mapping by interpreting objects, spatial relationships, and context within the environment to facilitate more intuitive interactions and adaptive AR experiences. While environmental mapping provides the foundational data, scene understanding transforms that data into meaningful insights for enhanced user engagement.

Table of Comparison

| Feature | Environmental Mapping | Scene Understanding |

|---|---|---|

| Definition | Captures and models the physical surroundings in 3D. | Analyzes and interprets the environment to identify objects and spatial relationships. |

| Primary Use | Accurate 3D reconstruction for augmented reality overlays. | Contextual awareness for interactive and adaptive AR experiences. |

| Data Output | 3D point clouds, meshes, and spatial coordinates. | Semantic labels, object recognition, and spatial hierarchy. |

| Technology | Depth sensors, LIDAR, SLAM algorithms. | Computer vision, machine learning, neural networks. |

| Accuracy Focus | Geometric precision of the environment model. | Correct identification and classification of scene elements. |

| AR Application Examples | Spatial mapping for object placement, occlusion handling. | Semantic interaction, object manipulation, contextual feedback. |

Introduction to Environmental Mapping and Scene Understanding

Environmental mapping in augmented reality involves capturing and modeling the physical surroundings to create accurate digital overlays. Scene understanding extends this process by interpreting the mapped data to recognize objects, surfaces, and spatial relationships, enabling more interactive and context-aware AR experiences. Both technologies leverage computer vision and sensor data to enhance the realism and functionality of AR applications.

Core Concepts: Definitions and Differences

Environmental mapping captures the physical surroundings by creating spatial representations of real-world objects and surfaces, enabling accurate placement of virtual elements in augmented reality. Scene understanding interprets these mapped environments to identify and classify objects, semantic context, and interactions within the space, enhancing user experience through contextual awareness. The key difference lies in environmental mapping focusing on geometric data acquisition, while scene understanding emphasizes semantic interpretation and meaningful interaction within the AR environment.

Technology Stack in Environmental Mapping

Environmental mapping in augmented reality relies heavily on sensor fusion technology, combining data from LiDAR, RGB cameras, and inertial measurement units (IMUs) to generate precise 3D spatial representations. Advanced algorithms such as simultaneous localization and mapping (SLAM) play a pivotal role in real-time point cloud construction and feature extraction. This technology stack enables accurate environment reconstruction, forming the foundation for subsequent scene understanding and contextual interactions.

Approaches to Scene Understanding in AR

Approaches to scene understanding in augmented reality leverage environmental mapping techniques such as depth sensing, semantic segmentation, and object recognition to accurately interpret and interact with physical spaces. Environmental mapping collects spatial data to create 3D representations, while advanced algorithms analyze this data to identify objects, surfaces, and contextual information essential for realistic AR overlays. Effective scene understanding enhances user immersion by enabling AR systems to adapt dynamically to changing environments and complex real-world interactions.

Key Algorithms Powering Both Techniques

Environmental mapping relies on simultaneous localization and mapping (SLAM) algorithms such as Extended Kalman Filters and Graph-Based SLAM to create accurate, real-time spatial maps. Scene understanding leverages advanced computer vision models including convolutional neural networks (CNNs) and semantic segmentation to interpret and classify objects within the mapped environment. Key algorithms integrating depth sensing and feature extraction techniques enable seamless interaction between mapping and scene comprehension in augmented reality applications.

Real-Time Data Processing: Challenges and Solutions

Environmental mapping in augmented reality requires fast, accurate reconstruction of physical spaces, demanding high-performance sensors and optimized algorithms to handle dynamic changes in real time. Scene understanding goes beyond mapping by interpreting semantic information such as object recognition and contextual analysis, increasing computational complexity and latency. Solutions include leveraging edge computing, machine learning models optimized for speed, and sensor fusion techniques to balance accuracy and responsiveness in real-time data processing.

Application Scenarios: Mapping vs Understanding

Environmental mapping in augmented reality primarily supports navigation and spatial positioning by creating accurate 3D models of physical surroundings, ideal for applications like indoor navigation and robotic path planning. Scene understanding advances beyond mapping by interpreting objects, surfaces, and contextual elements, enabling applications such as interactive gaming, object recognition, and context-aware AR experiences. Mapping provides structural data crucial for localization, while scene understanding enriches AR applications with semantic insights necessary for meaningful interaction.

Impact on User Experience in AR

Environmental mapping captures spatial geometry to create accurate 3D models, enabling precise object placement and interaction in AR, which significantly enhances immersion and realism. Scene understanding interprets semantic information like object recognition and contextual awareness, improving adaptive content delivery and user engagement by making AR experiences more intuitive and responsive. Combining both technologies results in seamless, context-aware AR applications that elevate overall user satisfaction through richer and more interactive environments.

Future Trends in Environmental Mapping and Scene Understanding

Future trends in Environmental Mapping focus on enhancing real-time data acquisition through advanced sensor fusion, enabling more precise 3D spatial reconstructions for augmented reality applications. Scene Understanding is evolving with the integration of AI-driven semantic segmentation and contextual awareness, improving object recognition and interaction within dynamic environments. These advancements collectively aim to create more immersive, adaptive, and intelligent AR experiences by seamlessly bridging digital content with physical surroundings.

Choosing the Right Approach for AR Development

Environmental mapping captures spatial geometry and surface properties to create accurate 3D representations, essential for placing virtual objects realistically in augmented reality. Scene understanding interprets context, object recognition, and semantic meaning, enabling AR applications to interact intelligently with the environment and users. Selecting the right approach depends on application goals: environmental mapping suits precise spatial alignment, while scene understanding enhances contextual interaction and dynamic content generation.

Environmental Mapping vs Scene Understanding Infographic

techiny.com

techiny.com