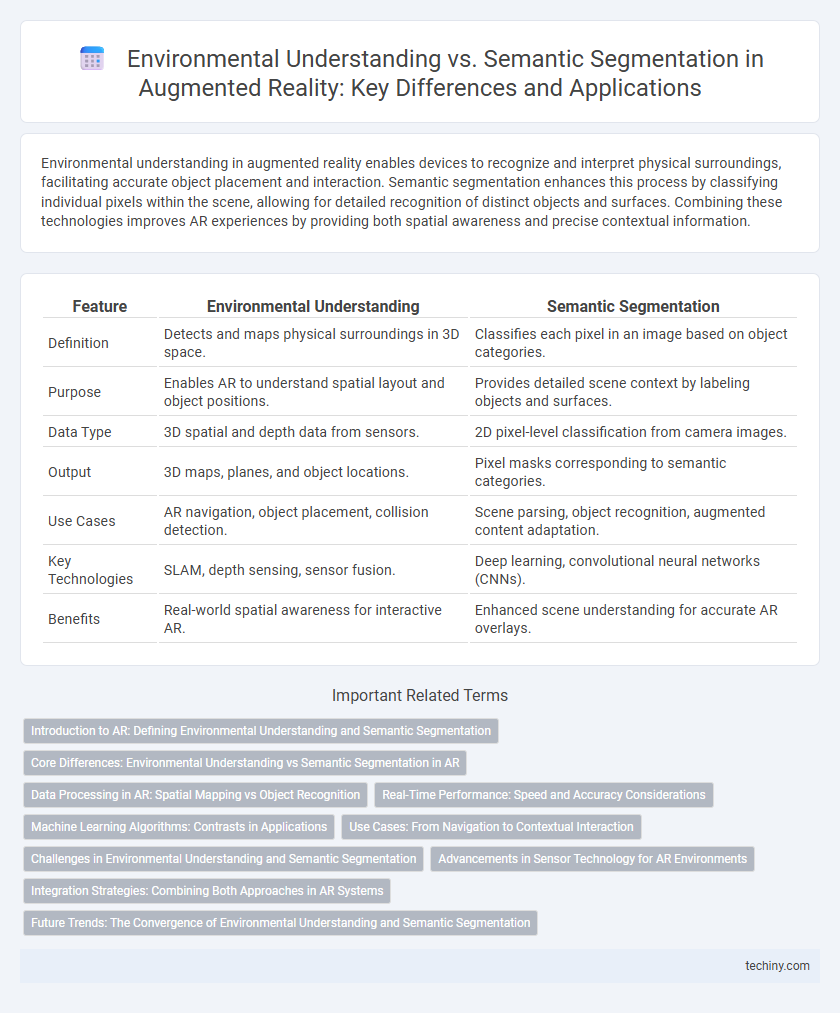

Environmental understanding in augmented reality enables devices to recognize and interpret physical surroundings, facilitating accurate object placement and interaction. Semantic segmentation enhances this process by classifying individual pixels within the scene, allowing for detailed recognition of distinct objects and surfaces. Combining these technologies improves AR experiences by providing both spatial awareness and precise contextual information.

Table of Comparison

| Feature | Environmental Understanding | Semantic Segmentation |

|---|---|---|

| Definition | Detects and maps physical surroundings in 3D space. | Classifies each pixel in an image based on object categories. |

| Purpose | Enables AR to understand spatial layout and object positions. | Provides detailed scene context by labeling objects and surfaces. |

| Data Type | 3D spatial and depth data from sensors. | 2D pixel-level classification from camera images. |

| Output | 3D maps, planes, and object locations. | Pixel masks corresponding to semantic categories. |

| Use Cases | AR navigation, object placement, collision detection. | Scene parsing, object recognition, augmented content adaptation. |

| Key Technologies | SLAM, depth sensing, sensor fusion. | Deep learning, convolutional neural networks (CNNs). |

| Benefits | Real-world spatial awareness for interactive AR. | Enhanced scene understanding for accurate AR overlays. |

Introduction to AR: Defining Environmental Understanding and Semantic Segmentation

Environmental understanding in augmented reality (AR) involves the system's capability to detect, analyze, and interpret physical surroundings to enable realistic interaction between virtual and real elements. Semantic segmentation, a key technique within environmental understanding, categorizes each pixel of the AR scene into meaningful classes such as walls, floors, objects, and people, improving spatial awareness and interaction accuracy. Integrating semantic segmentation enhances AR applications by delivering context-aware experiences that adapt virtual content dynamically to the environment.

Core Differences: Environmental Understanding vs Semantic Segmentation in AR

Environmental understanding in augmented reality focuses on mapping and interpreting the physical space by detecting surfaces, objects, and spatial relationships for accurate interaction, while semantic segmentation categorizes each pixel of the captured scene to identify specific elements like walls, floors, and furniture. Environmental understanding enables AR systems to anchor virtual objects realistically within the environment, whereas semantic segmentation provides detailed scene comprehension crucial for context-aware experiences. The core difference lies in environmental understanding's spatial mapping and interaction capabilities compared to semantic segmentation's pixel-level classification for enhanced scene interpretation.

Data Processing in AR: Spatial Mapping vs Object Recognition

Environmental understanding in augmented reality relies on spatial mapping to capture and reconstruct the physical surroundings, enabling precise placement of digital content. Semantic segmentation enhances data processing by classifying each pixel to identify and differentiate objects, supporting more accurate object recognition and interaction. Combining spatial mapping with semantic segmentation improves AR applications by providing detailed environment models alongside real-time identification of relevant objects for user engagement.

Real-Time Performance: Speed and Accuracy Considerations

Environmental understanding in augmented reality leverages real-time sensor data to rapidly interpret spatial layouts, prioritizing speed to enable seamless user interactions. Semantic segmentation enhances accuracy by classifying each pixel into meaningful categories, but typically requires intensive computation that can challenge real-time performance. Optimizing algorithms with lightweight neural networks and efficient data processing pipelines balances this trade-off, delivering both swift environment mapping and precise object recognition crucial for immersive AR experiences.

Machine Learning Algorithms: Contrasts in Applications

Environmental understanding leverages machine learning algorithms to interpret spatial layouts and object relationships for real-time interaction in augmented reality, emphasizing depth sensing and scene reconstruction. Semantic segmentation employs convolutional neural networks to classify each pixel into predefined categories, enhancing AR by providing detailed object recognition and context awareness. These approaches contrast in their application focus: environmental understanding targets structural comprehension for navigation and placement, while semantic segmentation prioritizes object-level detail for interaction and content overlay.

Use Cases: From Navigation to Contextual Interaction

Environmental understanding in augmented reality (AR) enables devices to map physical spaces, supporting navigation and obstacle avoidance by detecting surfaces and spatial layouts in real time. Semantic segmentation enhances this by classifying elements within the environment, such as distinguishing between walls, furniture, and pedestrians, which facilitates contextual interaction like object recognition and adaptive content placement. Combining these technologies drives practical AR applications in indoor navigation, automotive heads-up displays, and interactive retail experiences, where precise spatial and semantic awareness improves user engagement and functionality.

Challenges in Environmental Understanding and Semantic Segmentation

Environmental understanding in augmented reality faces challenges such as accurately interpreting diverse and dynamic real-world contexts, dealing with occlusions, and ensuring real-time processing on limited hardware. Semantic segmentation struggles with the complexity of distinguishing fine-grained object boundaries and adapting to varying lighting conditions or viewpoints while maintaining high accuracy. Both require advanced machine learning models and large annotated datasets to achieve reliable scene comprehension.

Advancements in Sensor Technology for AR Environments

Advancements in sensor technology have significantly enhanced environmental understanding in augmented reality (AR) by enabling precise spatial mapping and real-time object detection. High-resolution LiDAR and depth-sensing cameras provide detailed 3D data, improving AR systems' ability to interpret complex environments. Semantic segmentation algorithms leverage this rich sensor data to classify and label scene elements, facilitating more immersive and context-aware AR experiences.

Integration Strategies: Combining Both Approaches in AR Systems

Integrating Environmental Understanding and Semantic Segmentation in AR systems enhances spatial awareness and contextual interactions by merging depth sensing, object recognition, and scene labeling. Advanced fusion techniques leverage sensor data and machine learning algorithms to create a cohesive environmental model that supports real-time object detection and adaptive content placement. This hybrid approach optimizes AR experiences for navigation, maintenance, and immersive gaming by improving accuracy and semantic relevance.

Future Trends: The Convergence of Environmental Understanding and Semantic Segmentation

Future trends in augmented reality emphasize the convergence of environmental understanding and semantic segmentation to create more immersive and context-aware experiences. Combining 3D spatial mapping with real-time object recognition enables AR systems to interpret and interact with complex environments more accurately. Advances in machine learning models and sensor fusion are driving this integration, enhancing applications in navigation, gaming, and industrial design.

Environmental Understanding vs Semantic Segmentation Infographic

techiny.com

techiny.com