Gesture control in augmented reality offers a more intuitive and immersive experience by allowing users to interact with digital elements through natural hand movements, reducing the need for physical contact with devices. Touch control remains familiar and precise, providing reliable input methods especially in environments where gesture detection may be hindered by lighting or space constraints. Combining both control schemes can enhance usability by leveraging the strengths of each method in varying contexts.

Table of Comparison

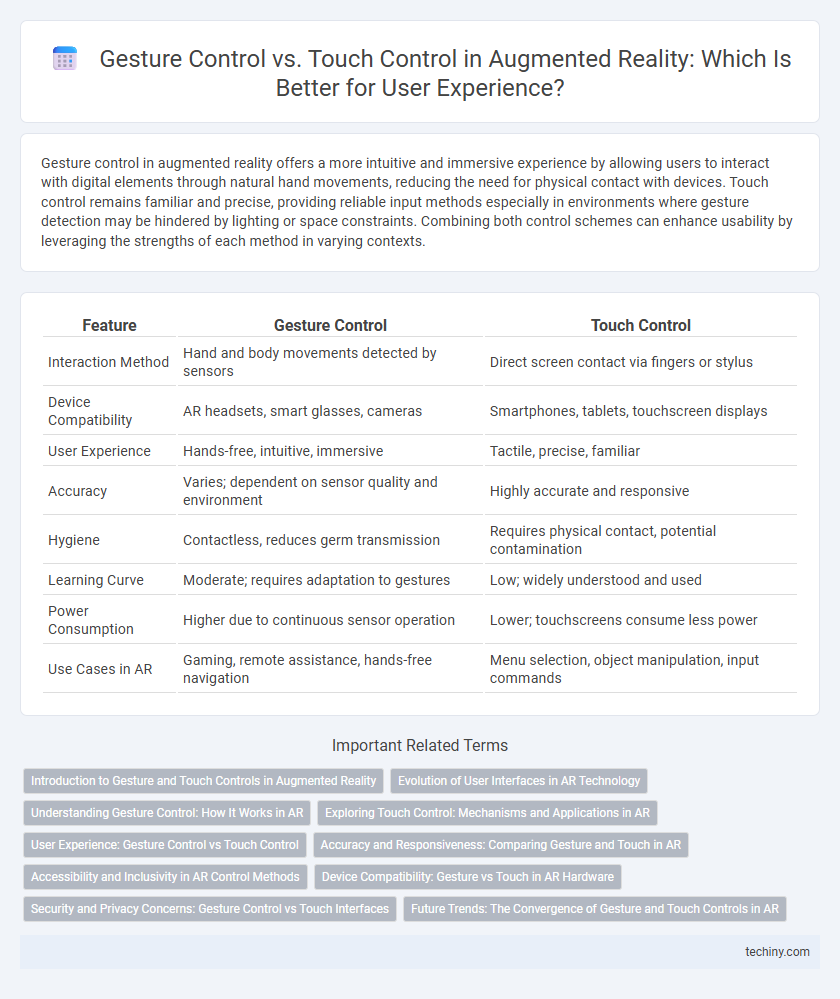

| Feature | Gesture Control | Touch Control |

|---|---|---|

| Interaction Method | Hand and body movements detected by sensors | Direct screen contact via fingers or stylus |

| Device Compatibility | AR headsets, smart glasses, cameras | Smartphones, tablets, touchscreen displays |

| User Experience | Hands-free, intuitive, immersive | Tactile, precise, familiar |

| Accuracy | Varies; dependent on sensor quality and environment | Highly accurate and responsive |

| Hygiene | Contactless, reduces germ transmission | Requires physical contact, potential contamination |

| Learning Curve | Moderate; requires adaptation to gestures | Low; widely understood and used |

| Power Consumption | Higher due to continuous sensor operation | Lower; touchscreens consume less power |

| Use Cases in AR | Gaming, remote assistance, hands-free navigation | Menu selection, object manipulation, input commands |

Introduction to Gesture and Touch Controls in Augmented Reality

Gesture control in augmented reality allows users to interact with digital content through natural hand movements, enhancing immersion without physical contact. Touch control relies on direct contact with touch-sensitive surfaces or devices, providing precise input but limiting freedom of movement. Both methods enable intuitive navigation in AR environments, with gesture control supporting hands-free interaction and touch control offering tactile feedback.

Evolution of User Interfaces in AR Technology

Gesture control in augmented reality has revolutionized user interfaces by enabling more natural and intuitive interactions compared to traditional touch controls, which rely on direct physical contact with devices. Advances in computer vision and sensor technology have allowed gesture recognition systems to interpret complex hand and body movements, enhancing immersive experiences without the limitations of touchscreen size or surface availability. This evolution reflects a shift towards more seamless and spatially aware interaction methods, improving accessibility and engagement in AR applications.

Understanding Gesture Control: How It Works in AR

Gesture control in Augmented Reality (AR) utilizes advanced sensors and cameras to detect hand movements and interpret them as intuitive commands, enabling users to interact with virtual objects without physical contact. Machine learning algorithms process these gestures in real-time, ensuring accurate recognition and seamless integration within AR environments. This hands-free interaction enhances user experience by providing more natural and immersive control compared to traditional touch interfaces.

Exploring Touch Control: Mechanisms and Applications in AR

Touch control in augmented reality (AR) leverages capacitive and resistive sensing technologies to enable precise user interactions on virtual surfaces. Applications of touch control in AR include virtual object manipulation, immersive gaming, and enhanced user interface navigation, providing intuitive and direct engagement within mixed reality environments. Mechanisms such as haptic feedback and multi-touch gestures improve user experience by delivering tactile sensation and complex command inputs.

User Experience: Gesture Control vs Touch Control

Gesture control in augmented reality offers a more immersive and intuitive user experience by enabling natural hand movements to manipulate virtual objects, reducing physical contact and enhancing hygiene. Touch control provides precise and familiar input, benefiting users who prioritize accuracy and tactile feedback on AR devices with touch-sensitive surfaces. Both input methods impact usability, with gesture control fostering seamless interaction in dynamic environments and touch control excelling in detailed tasks.

Accuracy and Responsiveness: Comparing Gesture and Touch in AR

Gesture control in augmented reality delivers high responsiveness by enabling users to interact naturally without physical contact, but can sometimes suffer from accuracy issues due to environmental factors and sensor limitations. Touch control offers precise accuracy through direct tactile feedback, making it highly reliable for fine manipulation in AR interfaces. Responsiveness in touch control generally surpasses gesture input as it relies on immediate physical interaction, reducing latency and enhancing user experience in AR applications.

Accessibility and Inclusivity in AR Control Methods

Gesture control in augmented reality enhances accessibility by enabling users with limited hand mobility to interact without physical contact, supporting inclusive experiences. Touch control, while precise, can be restrictive for individuals with motor impairments or those unable to reach device surfaces comfortably. Combining both methods promotes a versatile AR interface accommodating diverse user needs and physical abilities.

Device Compatibility: Gesture vs Touch in AR Hardware

Gesture control in augmented reality offers broader device compatibility by leveraging cameras and sensors available on various AR headsets and smartphones, enabling hands-free interaction across multiple platforms. Touch control requires capacitive touchscreens, limiting its use primarily to handheld devices and AR glasses with touch-sensitive surfaces. As AR hardware evolves, gesture recognition systems integrate more seamlessly with diverse devices, enhancing accessibility and user experience beyond the constraints of touch interfaces.

Security and Privacy Concerns: Gesture Control vs Touch Interfaces

Gesture control in augmented reality reduces the risk of data breaches by minimizing physical contact with shared devices, thereby limiting the transmission of biometric or touch-based data. Touch interfaces often require direct interaction, increasing the vulnerability to unauthorized data collection and exposure through smudge patterns or unsecured sensors. Gesture control leverages encrypted motion capture and real-time processing, enhancing privacy protections compared to touch control's reliance on hardware that may be prone to interception.

Future Trends: The Convergence of Gesture and Touch Controls in AR

Gesture control and touch control in augmented reality are rapidly converging to offer seamless, intuitive user experiences by integrating hand movements with haptic feedback. Emerging AR devices increasingly combine sophisticated gesture recognition algorithms with tactile interfaces, enabling precise manipulation of virtual objects without sacrificing physical interaction. Future trends emphasize hybrid control systems that leverage machine learning to adaptively blend gesture and touch inputs, enhancing accessibility and immersion in AR environments.

Gesture Control vs Touch Control Infographic

techiny.com

techiny.com