Multimodal interaction in augmented reality integrates multiple sensory inputs such as voice, gesture, and touch, creating a more immersive and natural user experience compared to unimodal interaction, which relies on a single input method. This diverse input approach enables users to engage with AR environments more intuitively, improving accuracy and efficiency in task completion. Enhanced feedback and adaptability in multimodal systems address limitations of unimodal interfaces, making AR applications more accessible and responsive to user needs.

Table of Comparison

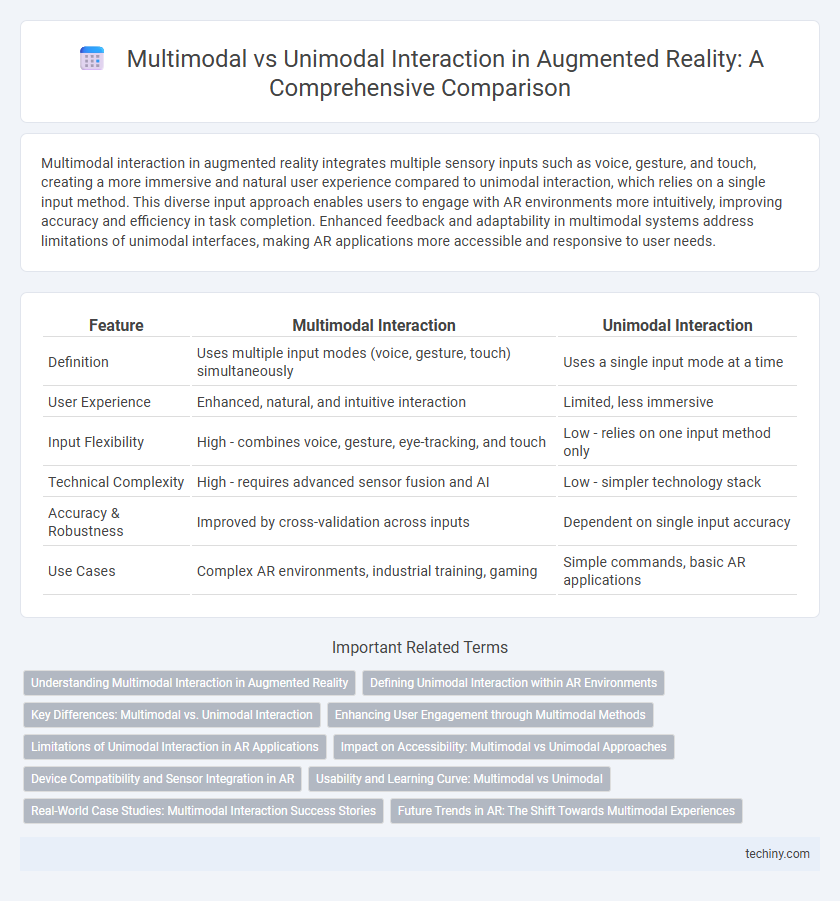

| Feature | Multimodal Interaction | Unimodal Interaction |

|---|---|---|

| Definition | Uses multiple input modes (voice, gesture, touch) simultaneously | Uses a single input mode at a time |

| User Experience | Enhanced, natural, and intuitive interaction | Limited, less immersive |

| Input Flexibility | High - combines voice, gesture, eye-tracking, and touch | Low - relies on one input method only |

| Technical Complexity | High - requires advanced sensor fusion and AI | Low - simpler technology stack |

| Accuracy & Robustness | Improved by cross-validation across inputs | Dependent on single input accuracy |

| Use Cases | Complex AR environments, industrial training, gaming | Simple commands, basic AR applications |

Understanding Multimodal Interaction in Augmented Reality

Multimodal interaction in augmented reality integrates multiple input methods such as voice commands, gestures, and eye tracking to create a more immersive and intuitive user experience. This approach contrasts with unimodal interaction, which relies on a single input modality, often limiting user flexibility and engagement. Advanced sensor fusion and real-time data processing enable seamless interpretation of combined inputs, enhancing task efficiency and accuracy within AR environments.

Defining Unimodal Interaction within AR Environments

Unimodal interaction in augmented reality environments refers to user engagement that relies solely on a single sensory or input modality, such as gesture, voice, or visual tracking. This interaction type limits the user's ability to interact naturally by depending on one channel for commands and feedback, often leading to reduced efficiency and immersion. Understanding unimodal interaction is crucial for designing AR systems that balance simplicity and functionality before integrating more complex multimodal interfaces.

Key Differences: Multimodal vs. Unimodal Interaction

Multimodal interaction in augmented reality integrates multiple input methods such as voice, gesture, and gaze, enabling a more natural and intuitive user experience compared to unimodal interaction, which relies on a single input mode like touch or voice alone. Multimodal systems enhance accuracy and flexibility by interpreting combined signals, reducing errors common in unimodal interfaces that may struggle with ambiguous commands. The key difference lies in multimodal interaction's ability to fuse diverse sensory inputs for richer contextual understanding, while unimodal approaches often face limitations in adaptability and user engagement.

Enhancing User Engagement through Multimodal Methods

Multimodal interaction in augmented reality integrates visual, auditory, and haptic feedback, significantly enhancing user engagement by providing richer sensory experiences compared to unimodal interaction, which relies on a single input method. This fusion of multiple modalities facilitates more intuitive navigation, improves task performance, and increases immersion, driving higher user satisfaction. Research shows that multimodal systems can reduce cognitive load and improve accuracy, making them essential for advanced AR applications in gaming, education, and industrial training.

Limitations of Unimodal Interaction in AR Applications

Unimodal interaction in augmented reality (AR) applications often faces limitations such as reduced user engagement and decreased accuracy due to reliance on a single input mode like gesture or voice recognition. This interaction type can struggle with environmental variability, leading to recognition errors and hindered usability in dynamic or noisy settings. Multimodal interaction addresses these challenges by integrating multiple input methods, enhancing robustness and user experience through complementary data processing.

Impact on Accessibility: Multimodal vs Unimodal Approaches

Multimodal interaction in augmented reality significantly enhances accessibility by integrating voice, gesture, and tactile inputs, accommodating diverse user needs and abilities. Unimodal approaches, relying on a single input method, often limit user engagement and fail to address varying physical or cognitive challenges effectively. The multimodal framework fosters inclusive AR experiences, improving usability for individuals with disabilities and broadening overall device adaptability.

Device Compatibility and Sensor Integration in AR

Multimodal interaction in augmented reality leverages multiple input methods such as voice, gestures, and eye tracking, enhancing device compatibility across smartphones, headsets, and AR glasses by integrating diverse sensors like cameras, accelerometers, and microphones. Unimodal interaction relies on a single input type, limiting sensor integration and often reducing seamless device interoperability in AR environments. Advanced sensor fusion in multimodal systems optimizes real-time data processing, resulting in more intuitive and responsive AR experiences across various hardware platforms.

Usability and Learning Curve: Multimodal vs Unimodal

Multimodal interaction in augmented reality leverages multiple input methods such as voice, gesture, and touch, significantly enhancing usability by accommodating diverse user preferences and environmental contexts. This approach reduces the learning curve compared to unimodal interaction, which relies on a single input mode, often limiting flexibility and increasing user frustration. Research indicates that multimodal systems improve task efficiency and user satisfaction by providing more intuitive and natural control mechanisms in AR environments.

Real-World Case Studies: Multimodal Interaction Success Stories

Multimodal interaction in augmented reality enhances user experience by integrating visual, auditory, and haptic feedback, demonstrated in real-world case studies such as Microsoft HoloLens in medical training and industrial design. Companies like Boeing report a 25% reduction in assembly time due to multimodal AR systems combining gesture recognition and voice commands. Unimodal interaction, limited to single input methods, often results in slower task completion and reduced immersion compared to multimodal frameworks proven effective in professional environments.

Future Trends in AR: The Shift Towards Multimodal Experiences

Future trends in augmented reality emphasize a significant shift from unimodal to multimodal interaction, integrating visual, auditory, and haptic inputs to create more immersive and intuitive experiences. Advanced AR systems leverage multimodal data fusion and artificial intelligence to enhance user engagement, improving accuracy and responsiveness in real-world applications such as healthcare, education, and retail. This transition enables seamless interaction with digital content, setting a new standard for user experience and accessibility in AR environments.

Multimodal Interaction vs Unimodal Interaction Infographic

techiny.com

techiny.com