Remote rendering processes graphical data on powerful cloud servers, delivering high-quality visuals to devices with limited hardware capabilities. Local rendering executes graphics directly on the device, offering lower latency and greater interactivity but requiring robust hardware. Choosing between remote and local rendering depends on factors like network stability, device performance, and application complexity in augmented reality experiences.

Table of Comparison

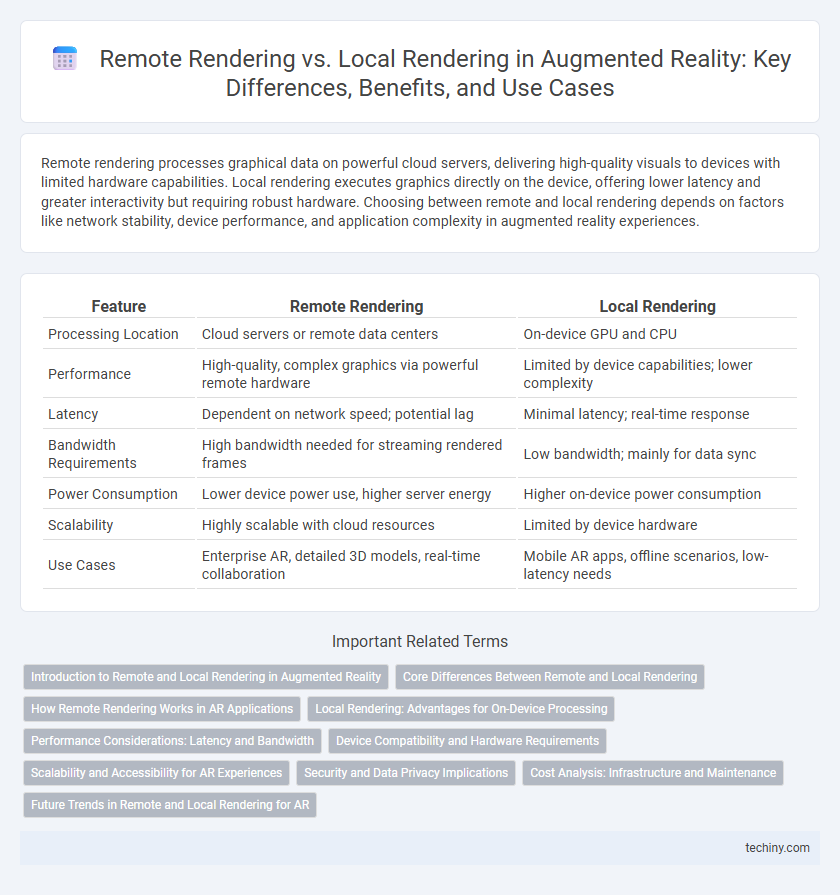

| Feature | Remote Rendering | Local Rendering |

|---|---|---|

| Processing Location | Cloud servers or remote data centers | On-device GPU and CPU |

| Performance | High-quality, complex graphics via powerful remote hardware | Limited by device capabilities; lower complexity |

| Latency | Dependent on network speed; potential lag | Minimal latency; real-time response |

| Bandwidth Requirements | High bandwidth needed for streaming rendered frames | Low bandwidth; mainly for data sync |

| Power Consumption | Lower device power use, higher server energy | Higher on-device power consumption |

| Scalability | Highly scalable with cloud resources | Limited by device hardware |

| Use Cases | Enterprise AR, detailed 3D models, real-time collaboration | Mobile AR apps, offline scenarios, low-latency needs |

Introduction to Remote and Local Rendering in Augmented Reality

Remote rendering in augmented reality involves processing complex 3D graphics on powerful cloud servers, delivering high-quality visuals to lightweight devices with limited graphics capabilities. Local rendering executes graphics computations directly on AR devices like smartphones or headsets, providing lower latency but often constrained by hardware performance. Understanding the balance between remote and local rendering is essential for optimizing user experience, device battery life, and visual fidelity in diverse AR applications.

Core Differences Between Remote and Local Rendering

Remote rendering processes AR visuals on powerful cloud servers, enabling high-quality graphics without taxing local device resources, which benefits mobile and lightweight AR headsets. Local rendering relies on the device's own hardware to generate graphics, offering lower latency and offline functionality but limited by device performance and battery life. Key core differences include processing location, latency impact, hardware dependency, and scalability of graphical fidelity.

How Remote Rendering Works in AR Applications

Remote rendering in AR applications offloads the graphics processing to powerful cloud servers, streaming high-quality images back to the user's device in real time. This process leverages low-latency networks to ensure seamless interaction and reduces the computational load on local hardware, enabling more complex and visually rich augmented reality experiences. By transmitting pre-rendered frames or video streams instead of raw data, remote rendering enhances performance and energy efficiency in AR headsets and mobile devices.

Local Rendering: Advantages for On-Device Processing

Local rendering in augmented reality enables on-device processing that significantly reduces latency, enhancing real-time user interaction and immersion. By executing graphics computations directly on the device's GPU, it decreases dependency on network bandwidth and mitigates connectivity issues. This approach optimizes power efficiency and ensures privacy by keeping sensitive data localized within the AR device.

Performance Considerations: Latency and Bandwidth

Remote rendering in augmented reality relies on cloud servers to process graphics, significantly reducing local device workload but increasing latency due to data transmission delays and high bandwidth requirements. Local rendering performs all computations on the device, minimizing latency and bandwidth use but is limited by the device's GPU power and thermal constraints. Balancing these approaches involves assessing network stability, the complexity of AR content, and user experience expectations to optimize performance.

Device Compatibility and Hardware Requirements

Remote rendering leverages cloud-based servers to process complex graphics, enabling augmented reality experiences on devices with limited hardware capabilities, such as smartphones and lightweight AR glasses. Local rendering demands powerful GPUs and high processing power directly on the device, often restricting compatibility to high-end AR headsets like Microsoft HoloLens or Magic Leap. Choosing remote rendering expands device compatibility by offloading intensive computations, reducing hardware requirements without compromising visual fidelity.

Scalability and Accessibility for AR Experiences

Remote rendering in augmented reality leverages powerful cloud servers to deliver high-quality visuals without taxing local device resources, enabling scalable AR experiences across various hardware capabilities. Local rendering depends on the AR device's onboard GPU, which may limit performance and accessibility for users with less advanced hardware. Prioritizing remote rendering increases accessibility by accommodating a broader range of devices and supporting scalable, resource-intensive AR applications with minimal latency through edge computing.

Security and Data Privacy Implications

Remote rendering in augmented reality enhances security by processing sensitive visual data on secure cloud servers, reducing the risk of data breaches on local devices. Local rendering keeps data processing on the user's device, minimizing exposure to external networks but potentially increasing vulnerability if the device lacks robust security measures. Balancing remote and local rendering strategies is crucial to safeguard AR experiences while managing data privacy risks effectively.

Cost Analysis: Infrastructure and Maintenance

Remote rendering reduces upfront hardware costs by leveraging cloud-based GPU resources, which eliminate the need for expensive local devices in augmented reality applications. Maintenance expenses are minimized due to centralized software updates and hardware upgrades managed by the cloud provider, lowering IT overhead. Local rendering demands higher initial investment in powerful AR devices and continuous hardware upkeep, increasing overall operational costs for institutions deploying augmented reality solutions.

Future Trends in Remote and Local Rendering for AR

Future trends in augmented reality emphasize the integration of cloud-based remote rendering with advanced edge computing to balance latency and visual fidelity. Emerging technologies such as 5G networks and AI-driven optimization enable seamless streaming of high-resolution AR content, reducing the computational burden on local devices while enhancing user experience. Continued innovation in hardware acceleration and network infrastructure promises to bridge the performance gap between remote and local rendering, making hybrid models the forefront approach in AR applications.

Remote Rendering vs Local Rendering Infographic

techiny.com

techiny.com