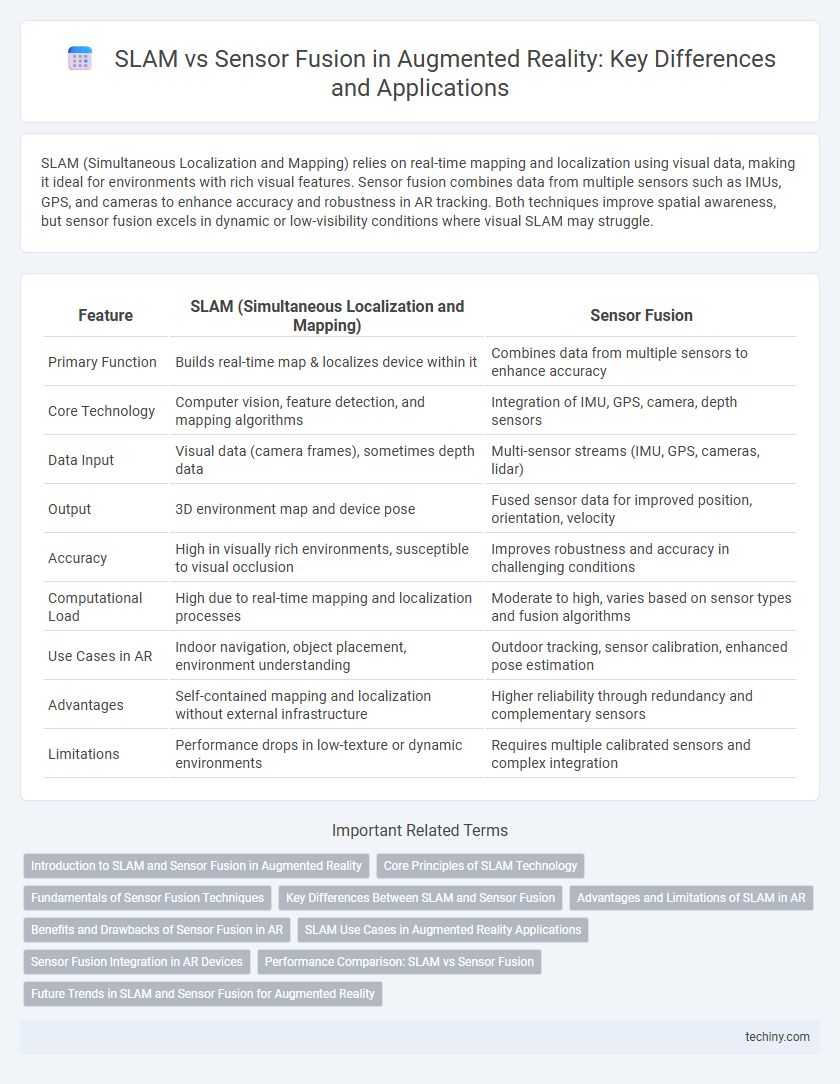

SLAM (Simultaneous Localization and Mapping) relies on real-time mapping and localization using visual data, making it ideal for environments with rich visual features. Sensor fusion combines data from multiple sensors such as IMUs, GPS, and cameras to enhance accuracy and robustness in AR tracking. Both techniques improve spatial awareness, but sensor fusion excels in dynamic or low-visibility conditions where visual SLAM may struggle.

Table of Comparison

| Feature | SLAM (Simultaneous Localization and Mapping) | Sensor Fusion |

|---|---|---|

| Primary Function | Builds real-time map & localizes device within it | Combines data from multiple sensors to enhance accuracy |

| Core Technology | Computer vision, feature detection, and mapping algorithms | Integration of IMU, GPS, camera, depth sensors |

| Data Input | Visual data (camera frames), sometimes depth data | Multi-sensor streams (IMU, GPS, cameras, lidar) |

| Output | 3D environment map and device pose | Fused sensor data for improved position, orientation, velocity |

| Accuracy | High in visually rich environments, susceptible to visual occlusion | Improves robustness and accuracy in challenging conditions |

| Computational Load | High due to real-time mapping and localization processes | Moderate to high, varies based on sensor types and fusion algorithms |

| Use Cases in AR | Indoor navigation, object placement, environment understanding | Outdoor tracking, sensor calibration, enhanced pose estimation |

| Advantages | Self-contained mapping and localization without external infrastructure | Higher reliability through redundancy and complementary sensors |

| Limitations | Performance drops in low-texture or dynamic environments | Requires multiple calibrated sensors and complex integration |

Introduction to SLAM and Sensor Fusion in Augmented Reality

Simultaneous Localization and Mapping (SLAM) enables Augmented Reality (AR) devices to create real-time 3D maps while tracking their position within the environment, critical for immersive and accurate AR experiences. Sensor fusion combines data from multiple sensors such as cameras, inertial measurement units (IMUs), and depth sensors to enhance environmental understanding and improve tracking robustness. Integrating SLAM with sensor fusion optimizes spatial awareness, enables precise motion tracking, and reduces drift in AR applications.

Core Principles of SLAM Technology

SLAM technology relies on simultaneous localization and mapping by using sensor data to create a real-time 3D map of the environment while tracking the device's position. It processes visual inputs from cameras alongside inertial measurements to accurately estimate movement and spatial relationships without external references. Sensor fusion integrates multiple data sources, but SLAM's core principle centers on iterative mapping and localization through continuous environment feedback.

Fundamentals of Sensor Fusion Techniques

Sensor fusion techniques integrate data from multiple sensors such as cameras, inertial measurement units (IMUs), and LiDAR to improve the accuracy and robustness of augmented reality (AR) tracking. Unlike SLAM (Simultaneous Localization and Mapping), which primarily focuses on building and updating a map while localizing the device within it, sensor fusion combines diverse sensory inputs to provide a comprehensive understanding of the environment. Fundamental sensor fusion methods include Kalman filtering and particle filtering, which optimally estimate device position and orientation by minimizing uncertainty from sensor noise.

Key Differences Between SLAM and Sensor Fusion

SLAM (Simultaneous Localization and Mapping) primarily builds real-time maps of unknown environments while tracking the device's location using visual or lidar data. Sensor fusion integrates multiple sensor inputs, such as IMU, GPS, and cameras, to improve accuracy and robustness in position estimation for AR applications. The key difference lies in SLAM's emphasis on dynamic mapping and localization versus sensor fusion's focus on combining diverse sensors to enhance spatial understanding.

Advantages and Limitations of SLAM in AR

Simultaneous Localization and Mapping (SLAM) in augmented reality enables real-time environment mapping and precise tracking using camera input, offering high spatial accuracy without relying on external sensors. Its limitations include sensitivity to dynamic environments and high computational demands, which can affect performance and battery life on mobile devices. Unlike sensor fusion, which combines multiple data sources like IMU and GPS for robust positioning, SLAM depends heavily on visual features that may degrade in low-light or featureless settings.

Benefits and Drawbacks of Sensor Fusion in AR

Sensor fusion in augmented reality (AR) combines data from multiple sensors, such as cameras, gyroscopes, and accelerometers, to enhance tracking accuracy and environmental understanding beyond what simultaneous localization and mapping (SLAM) alone can achieve. This approach reduces drift and improves robustness in dynamic or feature-poor environments but often demands higher computational resources and can introduce latency. While sensor fusion offers better real-time performance and resilience to occlusions, its complexity and reliance on calibrated sensors present challenges for seamless AR experiences.

SLAM Use Cases in Augmented Reality Applications

Simultaneous Localization and Mapping (SLAM) enables augmented reality (AR) devices to create real-time, accurate 3D maps of unknown environments, enhancing spatial awareness for applications like indoor navigation, gaming, and industrial maintenance. SLAM's ability to track a user's position and orientation without relying exclusively on external sensors distinguishes it from sensor fusion techniques, which integrate data from multiple sensors such as GPS, IMUs, and cameras. Key AR use cases leveraging SLAM include immersive training simulations, augmented retail experiences, and interactive architectural visualization, where precise environmental mapping is critical.

Sensor Fusion Integration in AR Devices

Sensor fusion integration in AR devices enhances spatial awareness by combining data from multiple sensors such as cameras, gyroscopes, accelerometers, and LiDAR. This multi-sensor approach improves tracking accuracy and robustness in diverse environments, outperforming standalone SLAM systems that rely primarily on visual inputs. By merging sensor information in real-time, AR devices achieve more stable and precise localization, essential for immersive and responsive augmented experiences.

Performance Comparison: SLAM vs Sensor Fusion

Simultaneous Localization and Mapping (SLAM) excels in creating real-time, accurate 3D maps by leveraging visual and inertial data, providing robust localization in dynamic environments. Sensor fusion combines data from multiple sensors such as IMUs, GPS, and cameras to enhance tracking accuracy and reliability, especially in challenging conditions where one sensor alone may fail. Performance comparison shows SLAM performs better in detailed mapping and visual accuracy, while sensor fusion offers superior robustness and resilience against sensor noise and occlusion in augmented reality applications.

Future Trends in SLAM and Sensor Fusion for Augmented Reality

Future trends in SLAM for augmented reality emphasize enhanced real-time 3D mapping accuracy using deep learning algorithms and edge computing to reduce latency. Sensor fusion advancements integrate data from LiDAR, IMUs, and cameras, improving environmental understanding and robustness in diverse conditions. Emerging AR devices will leverage hybrid SLAM and sensor fusion techniques to enable seamless interaction and precise localization in complex, dynamic environments.

SLAM vs Sensor fusion Infographic

techiny.com

techiny.com