In Big Data applications, achieving a balance between consistency and availability is critical due to the CAP theorem, which states that a distributed system can only guarantee two of the three properties: Consistency, Availability, and Partition Tolerance. Prioritizing consistency ensures that all nodes reflect the same data simultaneously, essential for accuracy in transactional systems, while prioritizing availability guarantees system responsiveness even during network partitions, crucial for real-time analytics and petabyte-scale data processing. Understanding these trade-offs drives the design of scalable Big Data architectures that either emphasize strict data accuracy or uninterrupted data access based on specific application requirements.

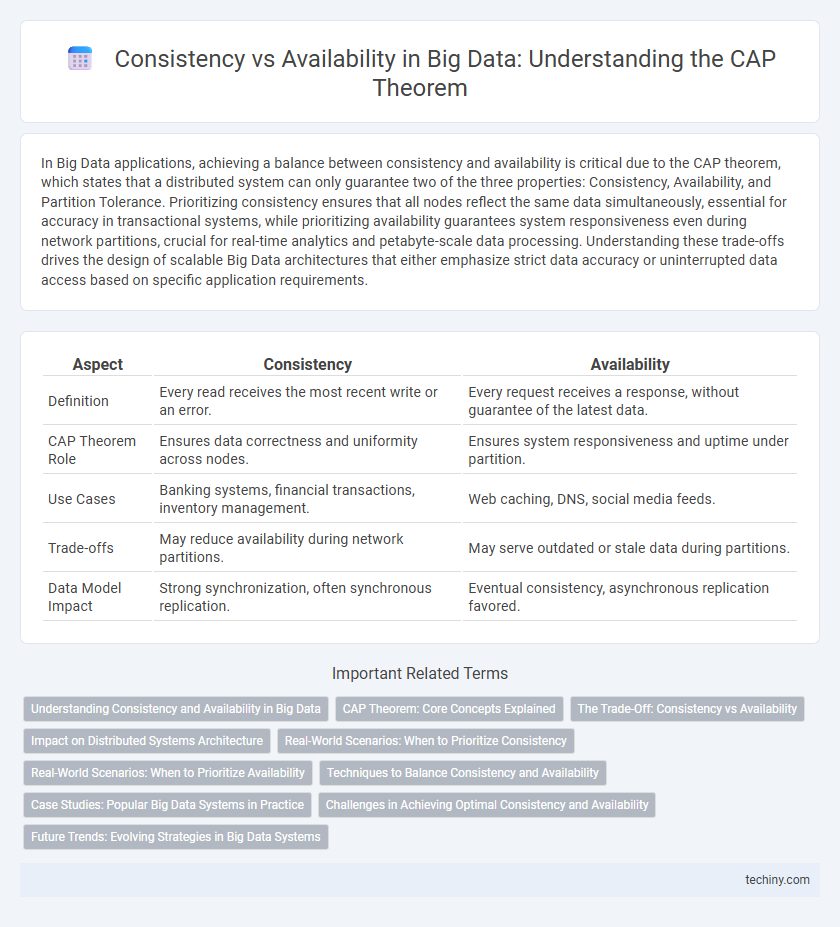

Table of Comparison

| Aspect | Consistency | Availability |

|---|---|---|

| Definition | Every read receives the most recent write or an error. | Every request receives a response, without guarantee of the latest data. |

| CAP Theorem Role | Ensures data correctness and uniformity across nodes. | Ensures system responsiveness and uptime under partition. |

| Use Cases | Banking systems, financial transactions, inventory management. | Web caching, DNS, social media feeds. |

| Trade-offs | May reduce availability during network partitions. | May serve outdated or stale data during partitions. |

| Data Model Impact | Strong synchronization, often synchronous replication. | Eventual consistency, asynchronous replication favored. |

Understanding Consistency and Availability in Big Data

Consistency in Big Data ensures that every read receives the most recent write or an error, maintaining data accuracy across distributed systems. Availability guarantees that every request receives a response, regardless of data synchronization status, prioritizing system uptime and accessibility. Balancing consistency and availability in Big Data architectures involves trade-offs critical to application requirements and system design.

CAP Theorem: Core Concepts Explained

CAP Theorem defines three critical system properties: Consistency, Availability, and Partition Tolerance, highlighting the trade-offs in distributed databases. Consistency ensures every read receives the most recent write, while Availability guarantees every request receives a response, regardless of partition. In real-world big data systems, designers must prioritize either Consistency or Availability during network partitions, as achieving both simultaneously is impossible under CAP constraints.

The Trade-Off: Consistency vs Availability

The CAP theorem delineates a fundamental trade-off between consistency and availability in distributed Big Data systems, where ensuring strong consistency often reduces system availability during network partitions. High consistency guarantees that all nodes reflect the same data state, critical for transaction accuracy, while maximizing availability allows continuous access despite potential data staleness. Designing Big Data architectures requires balancing these aspects based on application requirements, such as banking systems prioritizing consistency and social media services favoring availability.

Impact on Distributed Systems Architecture

Consistency ensures all nodes in a distributed system reflect the same data simultaneously, which can introduce latency due to synchronization overhead. Availability prioritizes system responsiveness, allowing nodes to process requests independently even if some data replicas are temporarily inconsistent. Balancing consistency and availability directly influences system design choices, impacting fault tolerance, data integrity, and user experience in large-scale distributed databases.

Real-World Scenarios: When to Prioritize Consistency

In financial services and healthcare, prioritizing consistency ensures data accuracy and integrity, critical for transactions, patient records, and compliance requirements. E-commerce platforms processing orders benefit from consistent inventory and payment systems to avoid overselling and maintain customer trust. Real-time analytics in fraud detection demand strong consistency to prevent errors and enable timely decision-making based on accurate data.

Real-World Scenarios: When to Prioritize Availability

In Big Data systems, prioritizing availability over consistency is crucial in real-world scenarios like online retail platforms and social media networks, where uninterrupted user access outweighs immediate data synchronization. Systems designed for high availability tolerate temporary inconsistencies to ensure continuous operation during network partitions or failures. This approach enables real-time user engagement and transactional resilience, essential for maintaining user experience and service reliability under heavy load conditions.

Techniques to Balance Consistency and Availability

Techniques to balance consistency and availability in Big Data systems include quorum-based replication, where read and write operations require a majority of nodes to agree, ensuring stronger consistency while maintaining availability. Eventual consistency models leverage asynchronous updates to allow high availability but provide mechanisms like version vectors and conflict resolution protocols to reconcile data discrepancies. Hybrid approaches such as tunable consistency settings enable dynamic adjustment between consistency and availability based on application requirements and network conditions.

Case Studies: Popular Big Data Systems in Practice

Big data systems like Apache Cassandra prioritize availability over strict consistency by using eventual consistency models to ensure uninterrupted access during network partitions. HBase opts for strong consistency, sacrificing availability to maintain accurate data reads in distributed environments. Amazon DynamoDB employs tunable consistency settings, allowing developers to balance consistency and availability based on application requirements and latency tolerance.

Challenges in Achieving Optimal Consistency and Availability

Achieving optimal consistency and availability in Big Data systems involves navigating the inherent trade-offs outlined by the CAP theorem, where network partitions force a choice between maintaining data accuracy and uptime. Challenges include managing latency in distributed databases and handling conflicting updates without sacrificing performance or user experience. Ensuring strong consistency can lead to reduced availability during network failures, while prioritizing availability may increase the risk of data anomalies, complicating system design and operational strategies.

Future Trends: Evolving Strategies in Big Data Systems

Future trends in Big Data systems emphasize dynamic balancing of consistency and availability through adaptive algorithms and machine learning techniques. Emerging architectures leverage edge computing and hybrid cloud environments to optimize data consistency without compromising availability. Advanced consensus protocols and real-time analytics enable scalable solutions that meet the evolving demands of distributed Big Data applications.

Consistency vs Availability (in CAP theorem) Infographic

techiny.com

techiny.com