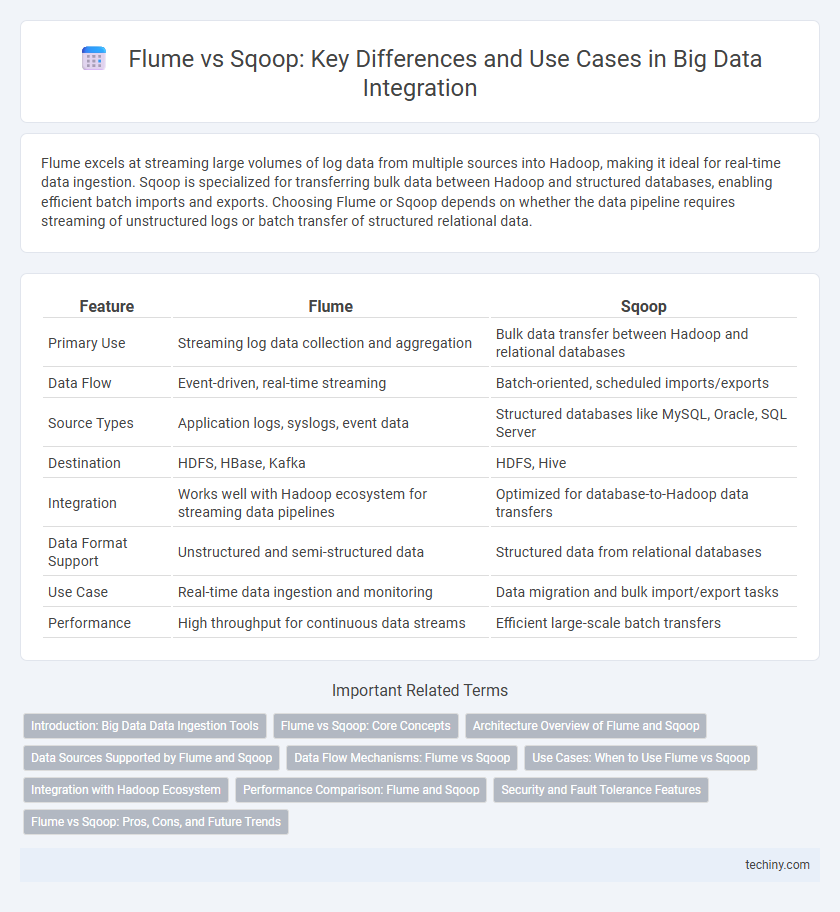

Flume excels at streaming large volumes of log data from multiple sources into Hadoop, making it ideal for real-time data ingestion. Sqoop is specialized for transferring bulk data between Hadoop and structured databases, enabling efficient batch imports and exports. Choosing Flume or Sqoop depends on whether the data pipeline requires streaming of unstructured logs or batch transfer of structured relational data.

Table of Comparison

| Feature | Flume | Sqoop |

|---|---|---|

| Primary Use | Streaming log data collection and aggregation | Bulk data transfer between Hadoop and relational databases |

| Data Flow | Event-driven, real-time streaming | Batch-oriented, scheduled imports/exports |

| Source Types | Application logs, syslogs, event data | Structured databases like MySQL, Oracle, SQL Server |

| Destination | HDFS, HBase, Kafka | HDFS, Hive |

| Integration | Works well with Hadoop ecosystem for streaming data pipelines | Optimized for database-to-Hadoop data transfers |

| Data Format Support | Unstructured and semi-structured data | Structured data from relational databases |

| Use Case | Real-time data ingestion and monitoring | Data migration and bulk import/export tasks |

| Performance | High throughput for continuous data streams | Efficient large-scale batch transfers |

Introduction: Big Data Data Ingestion Tools

Flume and Sqoop are key Big Data ingestion tools designed for handling large-scale data transfers. Flume specializes in streaming data collection from various sources such as log files to Hadoop Distributed File System (HDFS), enabling real-time analytics. Sqoop excels in efficiently importing bulk structured data from relational databases like MySQL and Oracle into Hadoop ecosystems for batch processing.

Flume vs Sqoop: Core Concepts

Flume is a distributed, reliable, and available service for efficiently collecting, aggregating, and moving large amounts of streaming log data into Hadoop's HDFS, designed for high-throughput ingestion from numerous sources. Sqoop specializes in transferring bulk data between structured databases like MySQL or Oracle and Hadoop's HDFS, focusing on batch processing and data import/export. Flume operates on event-driven data pipelines ideal for real-time log data, while Sqoop handles relational database integration through map-reduce jobs for data movement.

Architecture Overview of Flume and Sqoop

Flume architecture is based on a simple, flexible, and robust design consisting of sources, channels, and sinks that enable efficient streaming of large volumes of log data from multiple sources to centralized storage such as HDFS. Sqoop architecture revolves around a command-line interface that automates the transfer of bulk data between Hadoop and structured data stores like relational databases using connectors and mappers to optimize data import and export. While Flume excels in real-time data ingestion with a distributed event-driven model, Sqoop is specifically designed for batch-oriented data transfer, leveraging MapReduce jobs to ensure reliable and scalable movement of structured data.

Data Sources Supported by Flume and Sqoop

Flume excels in collecting and aggregating streaming data from diverse sources such as application logs, network traffic, and social media feeds, emphasizing real-time ingestion and event-driven data flows. Sqoop specializes in transferring structured data between relational databases like MySQL, Oracle, and SQL Server and Hadoop ecosystems, enabling efficient batch import and export of tabular data. Both tools address distinct data source types, with Flume optimized for unstructured, high-velocity data streams and Sqoop designed for large-scale, batch-oriented relational database integration.

Data Flow Mechanisms: Flume vs Sqoop

Flume excels in ingesting large volumes of streaming log data from various sources and transporting it efficiently to HDFS through a flexible, agent-based architecture. Sqoop specializes in bulk transfer of structured data between relational databases and Hadoop ecosystems using optimized parallel import and export processes. While Flume supports real-time, continuous data flow for unstructured or semi-structured data, Sqoop is designed for batch-oriented, high-throughput import/export of structured data.

Use Cases: When to Use Flume vs Sqoop

Flume is ideal for collecting and aggregating large volumes of streaming log data from multiple sources into Hadoop for real-time analytics. Sqoop excels at efficiently transferring bulk data between Hadoop and structured data stores like relational databases for batch processing. Use Flume for continuous event ingestion scenarios and Sqoop for periodic, high-throughput ETL tasks involving structured data.

Integration with Hadoop Ecosystem

Flume seamlessly integrates with the Hadoop ecosystem by efficiently ingesting large volumes of streaming data into HDFS and HBase, supporting real-time log and event data collection. Sqoop specializes in transferring bulk data between relational databases and Hadoop, enabling fast data import/export with Hive and HDFS for batch processing workflows. Both tools enhance data pipeline workflows but serve distinct roles: Flume for continuous data streaming and Sqoop for structured data migration.

Performance Comparison: Flume and Sqoop

Flume excels in streaming large volumes of log data with low latency, optimizing real-time data ingestion into Hadoop ecosystems. Sqoop outperforms in bulk data transfer, efficiently importing and exporting massive structured datasets between Hadoop and relational databases. Performance of Flume is best for event-driven data flows, while Sqoop provides superior throughput for batch-oriented ETL operations.

Security and Fault Tolerance Features

Flume offers robust fault tolerance through reliable data delivery with automatic failover and data recovery mechanisms, ensuring continuous event ingestion even under node failures. Sqoop emphasizes security by integrating with Kerberos authentication and supports secure data transfer between Hadoop and relational databases, preserving data integrity. Both tools incorporate encryption and audit logging to safeguard data pipelines, but Flume is optimized for real-time streaming resilience while Sqoop focuses on secure batch data imports.

Flume vs Sqoop: Pros, Cons, and Future Trends

Flume excels in ingesting streaming data with high throughput and reliability, making it ideal for real-time log collection, while Sqoop specializes in efficient batch transfer of bulk data between Hadoop and relational databases. Flume's scalability and flexibility come at the cost of complex configuration, whereas Sqoop offers simplicity but limited support for streaming and diverse data sources. Future trends indicate enhanced integration of Flume with real-time analytics platforms and improved Sqoop capabilities for handling big data migrations with support for cloud-native databases.

Flume vs Sqoop Infographic

techiny.com

techiny.com