Load balancers distribute incoming network traffic across multiple servers to ensure high availability and reliability, optimizing resource utilization and preventing overloads. API gateways provide a single entry point for managing, authenticating, and routing API requests, offering features such as rate limiting, logging, and protocol translation. While load balancers focus on distributing workload at the transport layer, API gateways operate at the application layer to enhance API management and security.

Table of Comparison

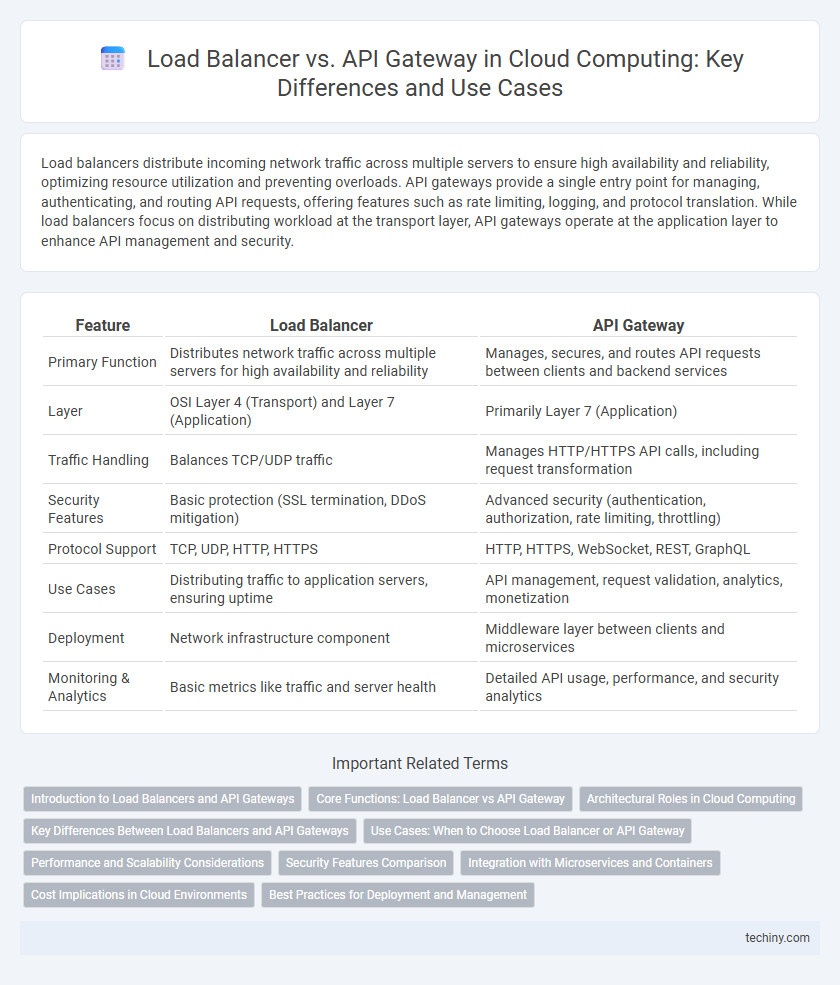

| Feature | Load Balancer | API Gateway |

|---|---|---|

| Primary Function | Distributes network traffic across multiple servers for high availability and reliability | Manages, secures, and routes API requests between clients and backend services |

| Layer | OSI Layer 4 (Transport) and Layer 7 (Application) | Primarily Layer 7 (Application) |

| Traffic Handling | Balances TCP/UDP traffic | Manages HTTP/HTTPS API calls, including request transformation |

| Security Features | Basic protection (SSL termination, DDoS mitigation) | Advanced security (authentication, authorization, rate limiting, throttling) |

| Protocol Support | TCP, UDP, HTTP, HTTPS | HTTP, HTTPS, WebSocket, REST, GraphQL |

| Use Cases | Distributing traffic to application servers, ensuring uptime | API management, request validation, analytics, monetization |

| Deployment | Network infrastructure component | Middleware layer between clients and microservices |

| Monitoring & Analytics | Basic metrics like traffic and server health | Detailed API usage, performance, and security analytics |

Introduction to Load Balancers and API Gateways

Load balancers distribute incoming network traffic across multiple servers, enhancing application availability and reliability by preventing any single server from becoming a bottleneck. API gateways act as intermediaries that manage, secure, and route API requests, providing features like authentication, rate limiting, and analytics. Both play crucial roles in cloud computing architectures by optimizing resource utilization and ensuring seamless communication between clients and backend services.

Core Functions: Load Balancer vs API Gateway

Load balancers distribute incoming network traffic across multiple servers to ensure high availability and reliability, optimizing resource use and preventing overloads in cloud computing environments. API gateways manage API requests, providing functionalities such as request routing, authentication, rate limiting, and protocol translation, acting as an entry point for API calls. While load balancers focus on traffic distribution at the network or transport layer, API gateways operate at the application layer, handling complex API management tasks.

Architectural Roles in Cloud Computing

Load balancers distribute incoming network traffic evenly across multiple servers to enhance application availability and fault tolerance in cloud environments. API gateways act as a single entry point that manages and secures API calls, handling tasks like request routing, authentication, and rate limiting. While load balancers primarily optimize traffic flow at the network level, API gateways provide a higher-level interface for microservices communication and API management.

Key Differences Between Load Balancers and API Gateways

Load balancers distribute incoming network traffic evenly across multiple servers to optimize resource use, improve response times, and ensure high availability. API gateways act as a unified entry point for API calls, providing request routing, composition, protocol translation, and advanced security features such as authentication and rate limiting. While load balancers primarily manage traffic at the transport layer, API gateways operate at the application layer, offering more granular control over API management and integration.

Use Cases: When to Choose Load Balancer or API Gateway

Load balancers efficiently distribute network traffic across multiple servers, making them ideal for improving application availability and scaling traditional web services or databases. API gateways manage, secure, and orchestrate API calls, offering features like request routing, rate limiting, and authentication, which suits microservices and modern API-driven architectures. Choose load balancers for high availability and performance at the transport layer, while API gateways are best for fine-grained API management and enhanced security at the application layer.

Performance and Scalability Considerations

Load balancers efficiently distribute network traffic across multiple servers, enhancing application performance by preventing overload and ensuring high availability under heavy loads. API gateways manage and route API calls with added features like authentication, rate limiting, and caching, which can introduce processing overhead but provide granular control over scalability. For high-performance and scalable cloud architectures, combining load balancers for traffic distribution with API gateways for API management optimizes both response time and system resilience.

Security Features Comparison

Load balancers primarily enhance security by distributing incoming traffic to prevent overload and protect against DDoS attacks, incorporating SSL termination for encrypted connections. API gateways offer advanced security features including authentication, authorization, rate limiting, and payload inspection to safeguard APIs from threats like injection attacks and unauthorized access. Both play crucial roles, but API gateways provide more granular control over API security policies and user management.

Integration with Microservices and Containers

Load balancers efficiently distribute network traffic across microservices and container instances to ensure high availability and scalability by managing TCP/UDP or HTTP/HTTPS connections at the transport or network layer. API gateways provide a unified entry point with advanced features such as request routing, protocol translation, authentication, and rate limiting, specifically designed for managing RESTful microservices in containerized environments. Integrating load balancers with Kubernetes or Docker Swarm optimizes container orchestration, while API gateways like Kong or AWS API Gateway facilitate seamless microservice communication and secure API management.

Cost Implications in Cloud Environments

Load Balancers distribute incoming network traffic across multiple servers, often incurring costs based on the number of processed connections and data transfer, making them cost-efficient for high-volume applications with predictable traffic patterns. API Gateways add value by managing authentication, rate limiting, and request routing but typically involve higher pricing tiers due to added functionalities and API call charges. Choosing between Load Balancers and API Gateways depends on balancing operational expenses with the complexity of application requirements and traffic management in cloud environments.

Best Practices for Deployment and Management

Load balancers optimize cloud infrastructure by distributing incoming traffic across multiple servers to enhance availability and reliability, making them essential for handling high-volume applications. API gateways manage, secure, and monitor API traffic, offering features like authentication, rate limiting, and request routing to ensure efficient API lifecycle management. Best practices include deploying load balancers close to application servers for latency reduction, using API gateways to enforce security policies, and regularly monitoring performance metrics to dynamically adjust configurations for scalability and fault tolerance.

Load Balancer vs API Gateway Infographic

techiny.com

techiny.com