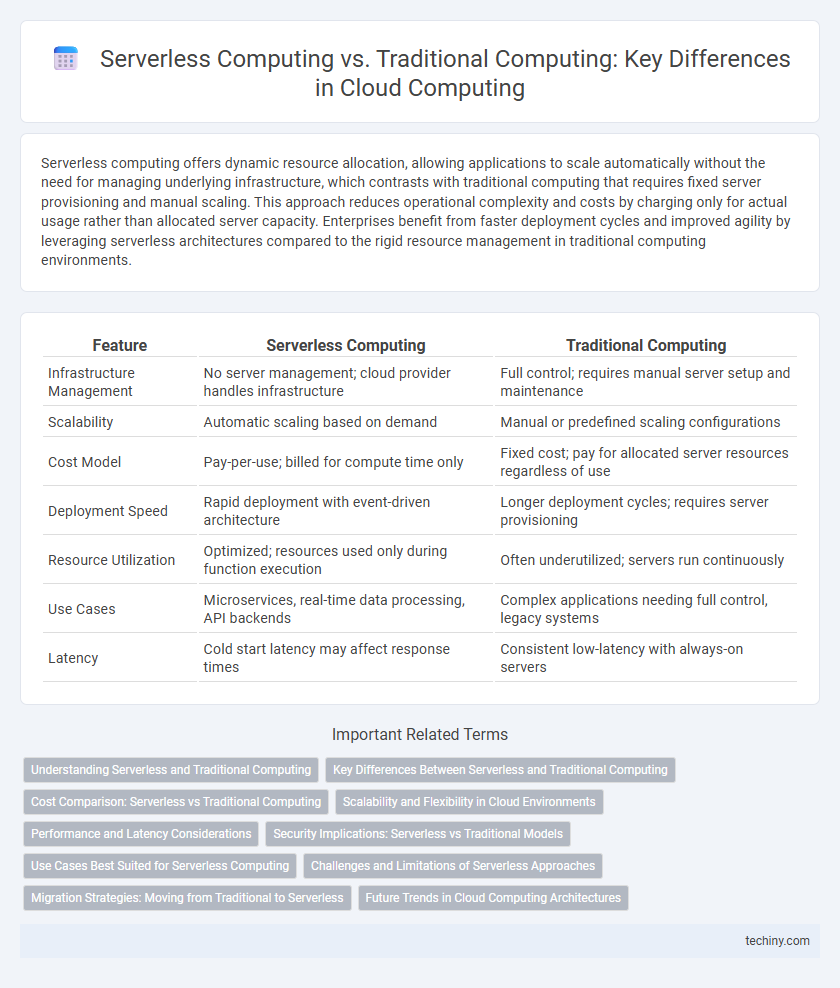

Serverless computing offers dynamic resource allocation, allowing applications to scale automatically without the need for managing underlying infrastructure, which contrasts with traditional computing that requires fixed server provisioning and manual scaling. This approach reduces operational complexity and costs by charging only for actual usage rather than allocated server capacity. Enterprises benefit from faster deployment cycles and improved agility by leveraging serverless architectures compared to the rigid resource management in traditional computing environments.

Table of Comparison

| Feature | Serverless Computing | Traditional Computing |

|---|---|---|

| Infrastructure Management | No server management; cloud provider handles infrastructure | Full control; requires manual server setup and maintenance |

| Scalability | Automatic scaling based on demand | Manual or predefined scaling configurations |

| Cost Model | Pay-per-use; billed for compute time only | Fixed cost; pay for allocated server resources regardless of use |

| Deployment Speed | Rapid deployment with event-driven architecture | Longer deployment cycles; requires server provisioning |

| Resource Utilization | Optimized; resources used only during function execution | Often underutilized; servers run continuously |

| Use Cases | Microservices, real-time data processing, API backends | Complex applications needing full control, legacy systems |

| Latency | Cold start latency may affect response times | Consistent low-latency with always-on servers |

Understanding Serverless and Traditional Computing

Serverless computing eliminates server management by allowing developers to run code on demand without provisioning or maintaining infrastructure, optimizing scalability and cost efficiency. Traditional computing requires dedicated server resources and manual handling of infrastructure, often leading to fixed capacity and higher operational overhead. Understanding these fundamental differences helps organizations choose the right model based on workload variability, cost constraints, and development agility.

Key Differences Between Serverless and Traditional Computing

Serverless computing eliminates the need for server management by automatically handling infrastructure, allowing developers to focus solely on code deployment. Traditional computing requires manual provisioning, scaling, and maintenance of servers, leading to higher operational overhead. Serverless offers dynamic scaling and a pay-as-you-go pricing model, while traditional computing involves fixed resource allocation and upfront costs.

Cost Comparison: Serverless vs Traditional Computing

Serverless computing eliminates the need for provisioning and maintaining dedicated servers, significantly reducing operational costs by charging only for actual usage rather than fixed capacity. Traditional computing incurs higher expenses due to continuous server maintenance, hardware investments, and resource over-provisioning to handle peak demand. Cost efficiency in serverless models scales dynamically with workload, making it ideal for unpredictable or variable traffic, while traditional infrastructure suits steady, predictable workloads despite higher baseline costs.

Scalability and Flexibility in Cloud Environments

Serverless computing offers dynamic scalability by automatically allocating resources based on real-time demand, eliminating the need for manual server management. Traditional computing relies on pre-provisioned infrastructure, which can lead to over-provisioning or resource shortages during traffic spikes. Cloud environments benefit from serverless models as they provide greater flexibility, allowing developers to focus on code while the platform handles scalability and availability.

Performance and Latency Considerations

Serverless computing offers dynamic resource allocation that can reduce latency for bursty workloads compared to traditional computing, which relies on pre-provisioned servers. Cold start delays in serverless architectures may introduce latency spikes, whereas traditional servers provide consistent performance with dedicated resources. Performance optimization in serverless environments depends on efficient function deployment and event-driven invocation, while traditional computing benefits from predictable throughput and resource control.

Security Implications: Serverless vs Traditional Models

Serverless computing reduces the attack surface by abstracting infrastructure management and automating security patching, but introduces challenges like function event injection and limited control over runtime environments. Traditional computing offers greater control over security configurations and compliance but requires continuous manual management of patches, firewalls, and access controls. Both models demand robust identity and access management, yet serverless architectures rely heavily on cloud provider security frameworks, impacting threat detection and incident response strategies.

Use Cases Best Suited for Serverless Computing

Serverless computing excels in event-driven applications such as real-time data processing, IoT backends, and microservices architectures where automatic scaling and pay-per-use billing reduce operational overhead. It is ideal for unpredictable workloads that experience sudden traffic spikes, like mobile app backends or chatbots, enabling rapid deployment without server management. Use cases involving asynchronous tasks, file processing, and API gateways benefit from serverless platforms due to their fine-grained resource allocation and seamless integration with cloud services.

Challenges and Limitations of Serverless Approaches

Serverless computing faces challenges such as cold start latency, which causes delays in function execution due to container initialization, impacting performance-sensitive applications. Limited control over the underlying infrastructure restricts customization and complicates debugging and monitoring compared to traditional computing environments. Resource constraints, including execution time limits and statelessness, pose significant limitations for complex workflows and stateful applications in serverless architectures.

Migration Strategies: Moving from Traditional to Serverless

Migrating from traditional computing to serverless computing involves redesigning applications to leverage event-driven architectures and microservices, minimizing server management. Key strategies include identifying stateless components, refactoring legacy code for compatibility with Function-as-a-Service (FaaS) platforms, and adopting Infrastructure as Code (IaC) tools to automate deployment. Emphasizing gradual transition through hybrid models enables smoother migration while optimizing cost, scalability, and operational efficiency in cloud environments.

Future Trends in Cloud Computing Architectures

Serverless computing is rapidly reshaping cloud architectures by enabling scalable, event-driven applications without the need for infrastructure management, contrasting sharply with traditional computing's fixed resource allocation. Future trends indicate a shift towards hybrid models combining the agility of serverless with the control of traditional architectures to optimize cost, performance, and security. Advances in edge computing and AI integration further accelerate this evolution, making cloud services more dynamic and responsive to real-time demands.

Serverless Computing vs Traditional Computing Infographic

techiny.com

techiny.com