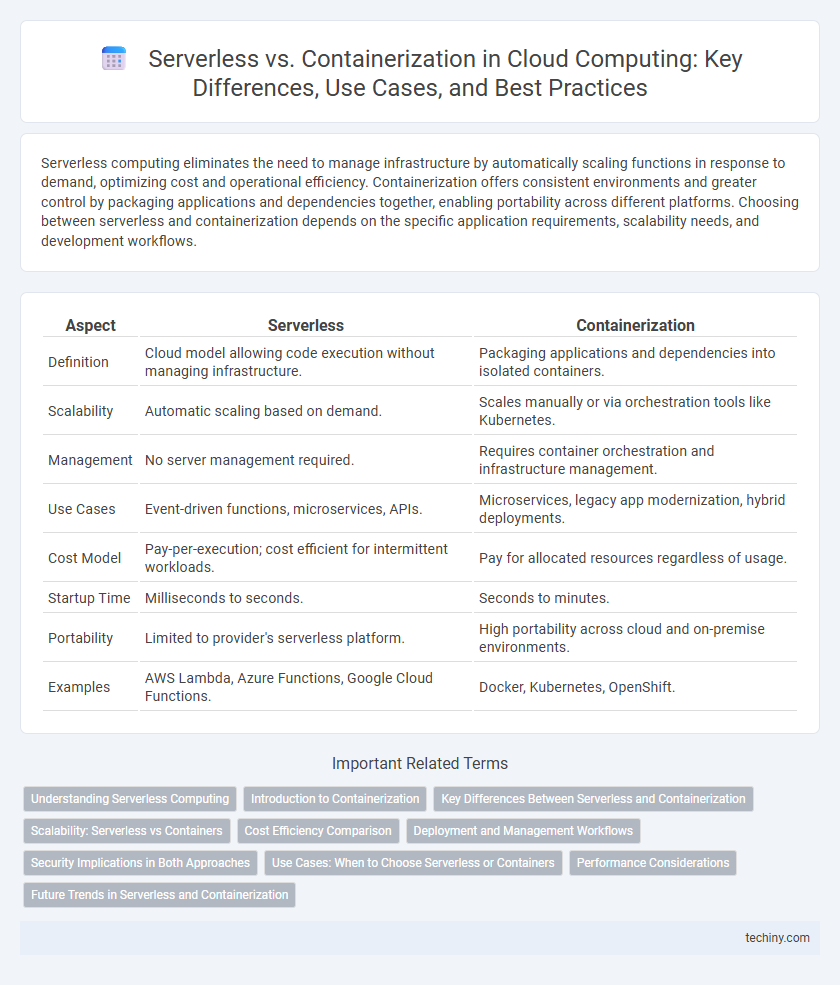

Serverless computing eliminates the need to manage infrastructure by automatically scaling functions in response to demand, optimizing cost and operational efficiency. Containerization offers consistent environments and greater control by packaging applications and dependencies together, enabling portability across different platforms. Choosing between serverless and containerization depends on the specific application requirements, scalability needs, and development workflows.

Table of Comparison

| Aspect | Serverless | Containerization |

|---|---|---|

| Definition | Cloud model allowing code execution without managing infrastructure. | Packaging applications and dependencies into isolated containers. |

| Scalability | Automatic scaling based on demand. | Scales manually or via orchestration tools like Kubernetes. |

| Management | No server management required. | Requires container orchestration and infrastructure management. |

| Use Cases | Event-driven functions, microservices, APIs. | Microservices, legacy app modernization, hybrid deployments. |

| Cost Model | Pay-per-execution; cost efficient for intermittent workloads. | Pay for allocated resources regardless of usage. |

| Startup Time | Milliseconds to seconds. | Seconds to minutes. |

| Portability | Limited to provider's serverless platform. | High portability across cloud and on-premise environments. |

| Examples | AWS Lambda, Azure Functions, Google Cloud Functions. | Docker, Kubernetes, OpenShift. |

Understanding Serverless Computing

Serverless computing enables developers to run applications without managing underlying infrastructure, automatically scaling resources based on demand. It abstracts server management, reducing operational complexity and allowing faster deployment of cloud-native applications. Key benefits include cost efficiency, event-driven execution, and seamless integration with cloud service providers such as AWS Lambda, Azure Functions, and Google Cloud Functions.

Introduction to Containerization

Containerization encapsulates applications and their dependencies into lightweight, portable units that run consistently across various computing environments. Unlike traditional virtualization, containers share the host operating system kernel, enabling faster startup times and efficient resource utilization. This technology underpins modern cloud-native architectures, facilitating scalable deployment and simplified management of microservices.

Key Differences Between Serverless and Containerization

Serverless computing automatically manages infrastructure, scaling, and resource allocation, enabling developers to focus solely on code execution without server management. In contrast, containerization packages applications and their dependencies into isolated environments, offering consistent deployment across various platforms but requiring explicit infrastructure management. Serverless excels in event-driven, unpredictable workloads, while containerization provides greater control and flexibility for complex applications with persistent states.

Scalability: Serverless vs Containers

Serverless computing offers automatic scaling by dynamically allocating resources in response to incoming requests, making it ideal for unpredictable workloads with rapid fluctuations. Containers provide scalable environments through orchestration platforms like Kubernetes, enabling fine-grained control over scaling policies and resource allocation for consistent, long-running applications. Both approaches enhance scalability, but serverless excels in event-driven, transient functions while containers support complex, persistent services with customizable scaling strategies.

Cost Efficiency Comparison

Serverless computing eliminates the need for provisioning and managing servers, allowing businesses to pay only for actual usage, which significantly reduces operational costs compared to traditional infrastructure. Containerization requires continuous management of container orchestration platforms like Kubernetes, resulting in higher fixed costs despite its scalability benefits. Serverless models optimize cost efficiency by automatically scaling resources with demand, minimizing idle resource expenses that are common in containerized environments.

Deployment and Management Workflows

Serverless computing offers simplified deployment by abstracting infrastructure management, allowing developers to focus solely on code without provisioning servers. Containerization involves packaging applications and dependencies into portable containers, requiring orchestration tools like Kubernetes for deployment and comprehensive management workflows. Serverless reduces operational overhead, while containerization provides greater control over the application environment and scaling strategies.

Security Implications in Both Approaches

Serverless architectures minimize attack surfaces by abstracting infrastructure management, reducing direct exposure to OS-level vulnerabilities, yet they may introduce risks tied to function-level permissions and third-party dependencies. Containerization offers granular control over security configurations and isolation through namespaces and cgroups but requires diligent patch management and vulnerability scanning to mitigate risks from container breakouts and misconfigurations. Both approaches necessitate robust identity and access management, encryption practices, and continuous monitoring to secure workloads effectively in cloud environments.

Use Cases: When to Choose Serverless or Containers

Serverless architectures are ideal for event-driven applications, microservices with unpredictable workloads, and rapid development cycles where infrastructure management is minimized. Containerization suits complex, stateful applications requiring fine-grained control over the environment, consistent deployment across hybrid or multi-cloud setups, and scenarios needing long-running processes. Choosing between serverless and containers depends on factors like application scaling needs, operational complexity, and cost optimization strategies.

Performance Considerations

Serverless computing offers automatic scaling and reduced management overhead, optimizing resource allocation for event-driven workloads while potentially introducing cold start latency that can affect response times. Containerization provides consistent performance with dedicated resource control and faster startup times, making it ideal for steady-state applications requiring predictable latency. Choosing between serverless and containerization depends on workload patterns, latency sensitivity, and scalability requirements to maximize performance efficiency.

Future Trends in Serverless and Containerization

Future trends in serverless computing emphasize enhanced scalability, improved event-driven architecture, and deeper integration with AI and machine learning services, enabling more efficient resource utilization and cost savings. Containerization is evolving with advancements in Kubernetes orchestration, improved security measures like runtime shields, and edge computing support, facilitating seamless deployment across hybrid and multi-cloud environments. The convergence of serverless and container technologies is expected to drive innovation in microservices architecture, promoting faster development cycles and greater operational flexibility.

Serverless vs Containerization Infographic

techiny.com

techiny.com