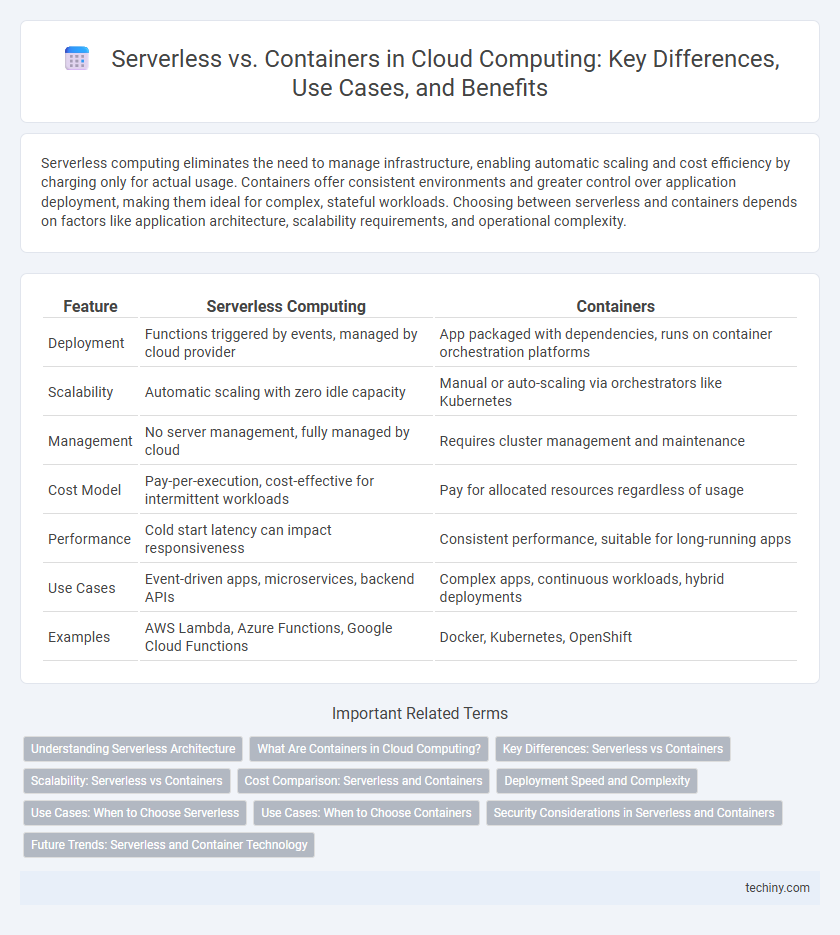

Serverless computing eliminates the need to manage infrastructure, enabling automatic scaling and cost efficiency by charging only for actual usage. Containers offer consistent environments and greater control over application deployment, making them ideal for complex, stateful workloads. Choosing between serverless and containers depends on factors like application architecture, scalability requirements, and operational complexity.

Table of Comparison

| Feature | Serverless Computing | Containers |

|---|---|---|

| Deployment | Functions triggered by events, managed by cloud provider | App packaged with dependencies, runs on container orchestration platforms |

| Scalability | Automatic scaling with zero idle capacity | Manual or auto-scaling via orchestrators like Kubernetes |

| Management | No server management, fully managed by cloud | Requires cluster management and maintenance |

| Cost Model | Pay-per-execution, cost-effective for intermittent workloads | Pay for allocated resources regardless of usage |

| Performance | Cold start latency can impact responsiveness | Consistent performance, suitable for long-running apps |

| Use Cases | Event-driven apps, microservices, backend APIs | Complex apps, continuous workloads, hybrid deployments |

| Examples | AWS Lambda, Azure Functions, Google Cloud Functions | Docker, Kubernetes, OpenShift |

Understanding Serverless Architecture

Serverless architecture eliminates the need for managing underlying infrastructure by automatically scaling and allocating resources based on demand, enabling developers to focus solely on writing code. Unlike containers, which require orchestration and maintenance of runtime environments, serverless functions execute in stateless, event-driven environments with optimized cost-efficiency and reduced operational overhead. This architecture leverages cloud provider-managed services, such as AWS Lambda or Azure Functions, to handle scaling, availability, and fault tolerance transparently.

What Are Containers in Cloud Computing?

Containers in cloud computing are lightweight, portable execution environments that package applications and their dependencies into a single unit, ensuring consistency across multiple computing environments. They enable rapid deployment, scaling, and resource efficiency by isolating applications from the underlying infrastructure, unlike traditional virtual machines which include full operating systems. Container orchestration tools like Kubernetes optimize resource management and automate scaling, making containers a preferred choice for microservices and agile development workflows.

Key Differences: Serverless vs Containers

Serverless computing abstracts infrastructure management by automatically scaling functions based on demand, eliminating the need to provision or manage servers, whereas containers encapsulate applications with their dependencies, offering consistent environments across development and production. Containers provide greater control over runtime and resource allocation, enabling customization and persistent state management, while serverless platforms optimize resource use through ephemeral, event-driven execution. The choice between serverless and containers hinges on factors such as scalability requirements, operational complexity, and application architecture preferences.

Scalability: Serverless vs Containers

Serverless architectures automatically scale based on demand, eliminating the need for manual resource management, thus providing virtually unlimited scalability with cost efficiency. Containers offer scalable solutions but require orchestration tools like Kubernetes to manage and automate scaling, introducing complexity in setup and maintenance. Serverless excels in handling unpredictable workloads, while containers provide granular control over scaling for consistent, long-running applications.

Cost Comparison: Serverless and Containers

Serverless computing eliminates the need to manage server infrastructure, offering a pay-as-you-go pricing model that charges only for actual execution time, which can significantly reduce costs for variable or low-traffic workloads. Containers require provisioning and managing container orchestration platforms like Kubernetes, incurring costs related to reserved resources, even during idle times, which may increase expenses for continuous or predictable workloads. Evaluating workload patterns and scaling requirements is essential to determine whether serverless's event-driven pricing or containers' resource allocation yields better cost efficiency.

Deployment Speed and Complexity

Serverless architectures enable rapid deployment by abstracting infrastructure management, allowing developers to focus solely on code which significantly reduces setup time. Containers require more configuration and orchestration, such as managing Docker images and Kubernetes clusters, leading to greater initial complexity but offering more control over the deployment environment. The deployment speed of serverless functions typically surpasses containers, while containerized solutions provide flexibility that supports complex application scenarios.

Use Cases: When to Choose Serverless

Serverless computing is ideal for event-driven applications, such as real-time data processing, IoT backends, and chatbots, where automatic scaling and minimal infrastructure management are priorities. It excels in microservices architectures requiring rapid deployment, cost-efficiency, and seamless scaling based on demand. Organizations benefit from serverless when workloads experience variable traffic patterns or unpredictable spikes, eliminating the need for constant server provisioning.

Use Cases: When to Choose Containers

Containers are ideal for complex applications requiring consistent environments across diverse development, testing, and production stages, especially when microservices architecture is involved. They provide fine-grained control over dependencies and runtime, making them suitable for stateful applications and workloads needing persistent storage. Use containers when orchestrating scalable applications with Kubernetes or when migrating legacy applications that demand predictable, isolated environments.

Security Considerations in Serverless and Containers

Serverless architecture offers a reduced attack surface due to its ephemeral nature and managed infrastructure, minimizing vulnerabilities related to patch management and OS-level security. In contrast, containers require diligent configuration and continuous monitoring to prevent exploits from misconfigured images, container escape vulnerabilities, and insecure API exposure. Both demand strong identity and access management (IAM) policies, but serverless environments benefit from granular permissions at the function level, whereas containers necessitate runtime security tools to detect and mitigate threats.

Future Trends: Serverless and Container Technology

Serverless and container technologies are evolving rapidly, with serverless architecture gaining momentum due to its automated scaling and cost efficiency, enabling developers to focus solely on code execution. Container technology is advancing through enhanced orchestration tools like Kubernetes and integration with hybrid cloud environments, providing greater flexibility and control in deploying applications. Future trends indicate a convergence of serverless and container solutions, driven by the need for optimized resource utilization, improved security, and seamless application portability across multi-cloud infrastructures.

Serverless vs Containers Infographic

techiny.com

techiny.com