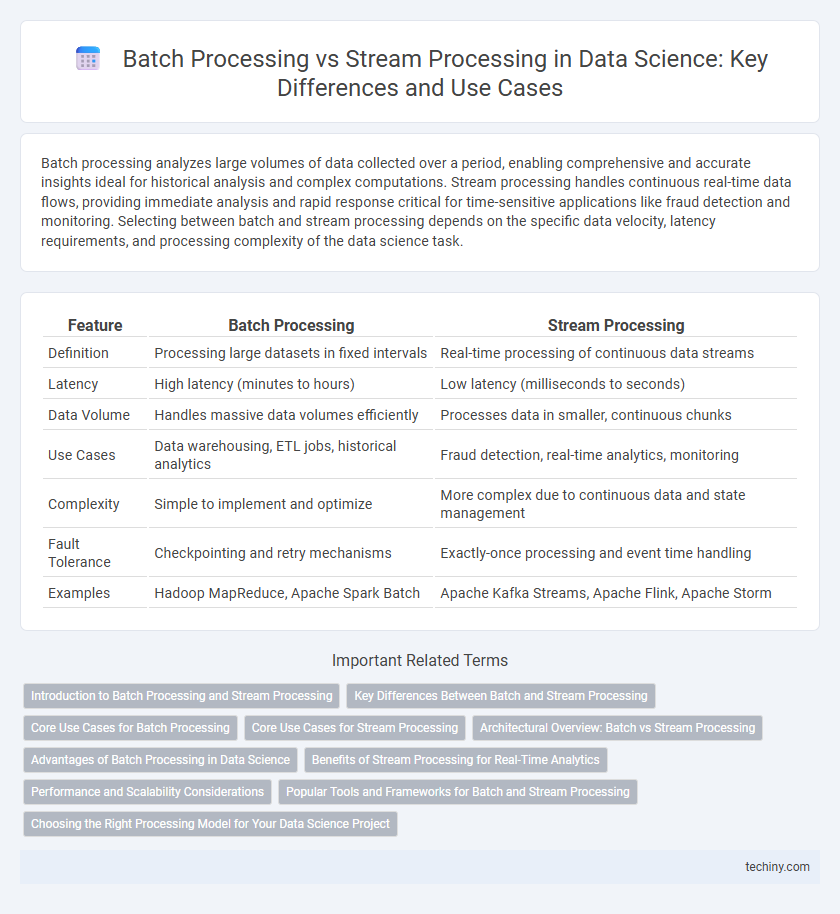

Batch processing analyzes large volumes of data collected over a period, enabling comprehensive and accurate insights ideal for historical analysis and complex computations. Stream processing handles continuous real-time data flows, providing immediate analysis and rapid response critical for time-sensitive applications like fraud detection and monitoring. Selecting between batch and stream processing depends on the specific data velocity, latency requirements, and processing complexity of the data science task.

Table of Comparison

| Feature | Batch Processing | Stream Processing |

|---|---|---|

| Definition | Processing large datasets in fixed intervals | Real-time processing of continuous data streams |

| Latency | High latency (minutes to hours) | Low latency (milliseconds to seconds) |

| Data Volume | Handles massive data volumes efficiently | Processes data in smaller, continuous chunks |

| Use Cases | Data warehousing, ETL jobs, historical analytics | Fraud detection, real-time analytics, monitoring |

| Complexity | Simple to implement and optimize | More complex due to continuous data and state management |

| Fault Tolerance | Checkpointing and retry mechanisms | Exactly-once processing and event time handling |

| Examples | Hadoop MapReduce, Apache Spark Batch | Apache Kafka Streams, Apache Flink, Apache Storm |

Introduction to Batch Processing and Stream Processing

Batch processing handles large volumes of data collected over a period, executing jobs sequentially to analyze and transform datasets efficiently. Stream processing ingests and analyzes data continuously in real-time, enabling immediate insights and rapid response to dynamic data flows. Both techniques are essential in data science for managing different workloads and optimizing data pipeline performance.

Key Differences Between Batch and Stream Processing

Batch processing handles large volumes of accumulated data at scheduled intervals, optimizing throughput and resource utilization for complex analytics. Stream processing analyzes data in real time, enabling immediate insights and rapid response to continuous data streams such as sensor outputs or social media feeds. Key differences include latency, with batch processing exhibiting higher latency due to data aggregation, whereas stream processing prioritizes low latency for time-sensitive applications.

Core Use Cases for Batch Processing

Batch processing excels in scenarios requiring large-scale data analysis, such as financial reporting, data warehousing, and complex machine learning model training. It enables efficient management of massive historical datasets by processing data in scheduled intervals, ensuring high throughput and system resource optimization. Core use cases include end-of-day transaction processing, ETL jobs for data integration, and offline analytics in business intelligence applications.

Core Use Cases for Stream Processing

Stream processing excels in real-time analytics, enabling immediate insights from continuous data flows such as social media feeds, sensor data, and financial transactions. It is crucial for fraud detection, where milliseconds can prevent losses by identifying suspicious patterns instantly. Operational monitoring and dynamic pricing are additional core use cases, leveraging real-time data to optimize business decisions and improve customer experiences.

Architectural Overview: Batch vs Stream Processing

Batch processing architecture involves collecting and storing large volumes of data before processing it in scheduled intervals, utilizing systems like Hadoop MapReduce and Apache Spark for high-throughput analytics. Stream processing architecture processes data in real-time as it arrives, relying on frameworks such as Apache Kafka, Apache Flink, and Apache Storm to enable low-latency decision-making and continuous computation. The architectural distinction centers on data handling patterns, with batch systems optimizing throughput and fault tolerance, while stream systems prioritize immediacy and event-driven responsiveness.

Advantages of Batch Processing in Data Science

Batch processing in data science allows for efficient handling of large volumes of data by processing it in scheduled groups, which reduces system overhead and optimizes resource utilization. It enables complex analytical computations and machine learning model training on entire datasets, leading to more accurate and comprehensive insights. Batch processing also supports data consistency and fault tolerance, making it suitable for environments requiring reliable and repeatable results.

Benefits of Stream Processing for Real-Time Analytics

Stream processing enables real-time analytics by continuously ingesting and analyzing data as it arrives, providing immediate insights that batch processing cannot deliver. This approach supports low-latency decision-making critical for applications like fraud detection, dynamic pricing, and personalized recommendations. Leveraging tools like Apache Kafka and Apache Flink, stream processing handles high-velocity data efficiently, ensuring scalability and robustness in data-driven environments.

Performance and Scalability Considerations

Batch processing excels in handling large volumes of data with high throughput by processing data in chunks, optimizing resource utilization for complex computations. Stream processing offers low-latency responses by processing data continuously, enabling real-time analytics and faster decision-making. Scalability in batch systems relies on parallel processing frameworks like Apache Hadoop, while stream processing scales horizontally using distributed platforms such as Apache Kafka and Apache Flink.

Popular Tools and Frameworks for Batch and Stream Processing

Apache Hadoop and Apache Spark dominate batch processing with their scalable, distributed computing capabilities, enabling large-scale data analytics. Stream processing relies heavily on Apache Kafka, Apache Flink, and Apache Storm, which provide low-latency, real-time data processing ecosystems. Databricks and Google Dataflow integrate batch and stream processing, offering unified platforms that enhance data engineering workflows.

Choosing the Right Processing Model for Your Data Science Project

Batch processing excels in handling large volumes of historical data by processing it in scheduled intervals, making it ideal for complex analytics and report generation. Stream processing enables real-time data ingestion and analysis, providing immediate insights crucial for applications such as fraud detection and live monitoring. Selecting the appropriate processing model depends on data velocity, latency requirements, and the specific analytical goals of the data science project.

batch processing vs stream processing Infographic

techiny.com

techiny.com