Big O notation describes the upper bound of an algorithm's runtime, indicating the worst-case scenario for time complexity, while Big Theta notation provides a tight bound, representing both the upper and lower limits of an algorithm's growth rate. Understanding the distinction between Big O and Big Theta is crucial for accurately analyzing the efficiency and performance of data science algorithms under varying inputs. This knowledge helps data scientists optimize code by selecting algorithms that offer predictable and efficient execution across different datasets.

Table of Comparison

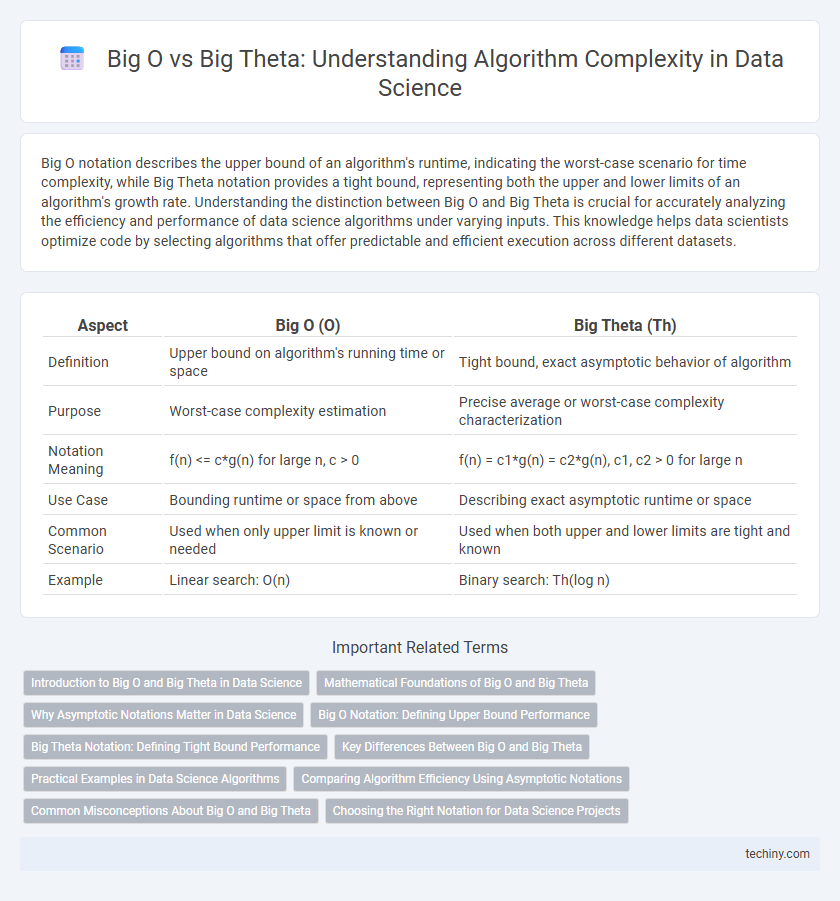

| Aspect | Big O (O) | Big Theta (Th) |

|---|---|---|

| Definition | Upper bound on algorithm's running time or space | Tight bound, exact asymptotic behavior of algorithm |

| Purpose | Worst-case complexity estimation | Precise average or worst-case complexity characterization |

| Notation Meaning | f(n) <= c*g(n) for large n, c > 0 | f(n) = c1*g(n) = c2*g(n), c1, c2 > 0 for large n |

| Use Case | Bounding runtime or space from above | Describing exact asymptotic runtime or space |

| Common Scenario | Used when only upper limit is known or needed | Used when both upper and lower limits are tight and known |

| Example | Linear search: O(n) | Binary search: Th(log n) |

Introduction to Big O and Big Theta in Data Science

Big O notation describes the upper bound of an algorithm's running time, providing a worst-case scenario for data processing efficiency in data science tasks. Big Theta notation represents a tight bound, indicating both the upper and lower limits of an algorithm's performance, enabling accurate complexity analysis. Understanding these notations helps data scientists optimize algorithms for large datasets by evaluating efficiency and resource requirements.

Mathematical Foundations of Big O and Big Theta

Big O notation describes the upper bound of an algorithm's growth rate, ensuring the function does not exceed a certain complexity as input size approaches infinity. Big Theta notation provides a tight bound, capturing both the upper and lower limits, indicating the exact asymptotic behavior of the function. Mathematically, Big O is defined as f(n) = O(g(n)) if there exist constants c > 0 and n0 such that f(n) <= c*g(n) for all n >= n0, while Big Theta is defined as f(n) = Th(g(n)) if there exist constants c1, c2 > 0 and n0 such that c1*g(n) <= f(n) <= c2*g(n) for all n >= n0.

Why Asymptotic Notations Matter in Data Science

Asymptotic notations like Big O and Big Theta are crucial in data science for evaluating algorithm efficiency and scalability, ensuring models handle large datasets effectively. Big O provides an upper bound on runtime complexity, helping identify worst-case scenarios, while Big Theta offers a tight bound, reflecting both upper and lower limits for performance. Understanding these notations helps data scientists optimize algorithms, reduce computational costs, and improve overall system responsiveness in data-intensive applications.

Big O Notation: Defining Upper Bound Performance

Big O notation defines the upper bound of an algorithm's performance by describing the worst-case scenario of time or space complexity, ensuring that the algorithm's growth rate does not exceed a specified limit. It provides a framework for evaluating scalability by abstracting away constants and lower-order terms, focusing solely on the dominant factors impacting runtime as input size grows. Understanding Big O enables data scientists to optimize algorithms for efficiency, particularly in handling large datasets where performance guarantees are critical.

Big Theta Notation: Defining Tight Bound Performance

Big Theta (Th) notation provides a tight bound on an algorithm's running time by describing both its upper and lower limits, ensuring precise performance characterization. It clearly defines the exact asymptotic behavior of an algorithm, making it essential for understanding average-case efficiency in data science computations. Unlike Big O notation, which only indicates an upper bound, Big Theta captures the true growth rate, facilitating more accurate algorithm comparisons.

Key Differences Between Big O and Big Theta

Big O notation describes the upper bound of an algorithm's time complexity, providing the worst-case scenario for performance. Big Theta notation represents a tight bound, indicating both the upper and lower limits, characterizing the exact asymptotic behavior. Key differences include Big O's focus on the maximum growth rate, while Big Theta precisely defines the algorithm's growth rate within constant factors.

Practical Examples in Data Science Algorithms

Big O notation describes the worst-case time complexity of data science algorithms, such as sorting large datasets using QuickSort with O(n log n) complexity to ensure scalability. Big Theta notation provides a tighter bound by defining both upper and lower limits, exemplified by MergeSort's Th(n log n) runtime, indicating consistent performance regardless of input distribution. Practical applications in clustering algorithms like K-means demonstrate average-case Big Theta behavior, guiding efficient resource allocation and performance optimization in real-world data science projects.

Comparing Algorithm Efficiency Using Asymptotic Notations

Big O notation describes an upper bound on an algorithm's growth rate, indicating the worst-case performance, while Big Theta provides a tight bound that characterizes both upper and lower limits, representing the algorithm's exact asymptotic behavior. Comparing algorithm efficiency using Big O and Big Theta allows data scientists to evaluate performance guarantees under different input sizes, ensuring informed decisions for scalability and optimization. Understanding these asymptotic notations is crucial for selecting algorithms with predictable time and space complexity in large-scale data processing tasks.

Common Misconceptions About Big O and Big Theta

Big O notation describes the upper bound of an algorithm's growth rate, while Big Theta provides a tight bound, encompassing both upper and lower bounds; a common misconception is that Big O always represents precise runtime, which can lead to misunderstanding algorithm efficiency. Many mistakenly use Big O to indicate average-case complexity, when it strictly denotes worst-case scenarios, unlike Big Theta that captures exact asymptotic behavior. Misinterpreting these differences often results in flawed performance analysis and suboptimal algorithm selection in data science projects.

Choosing the Right Notation for Data Science Projects

Big O notation describes the upper bound of an algorithm's runtime, essential for worst-case performance analysis in data science projects. Big Theta provides a tight bound, representing both upper and lower limits, offering a more precise understanding when average-case complexity is crucial. Selecting the appropriate notation depends on whether the focus is on worst-case guarantees (Big O) or exact growth rates (Big Theta), impacting algorithm optimization and resource allocation in data science workflows.

Big O vs Big Theta Infographic

techiny.com

techiny.com