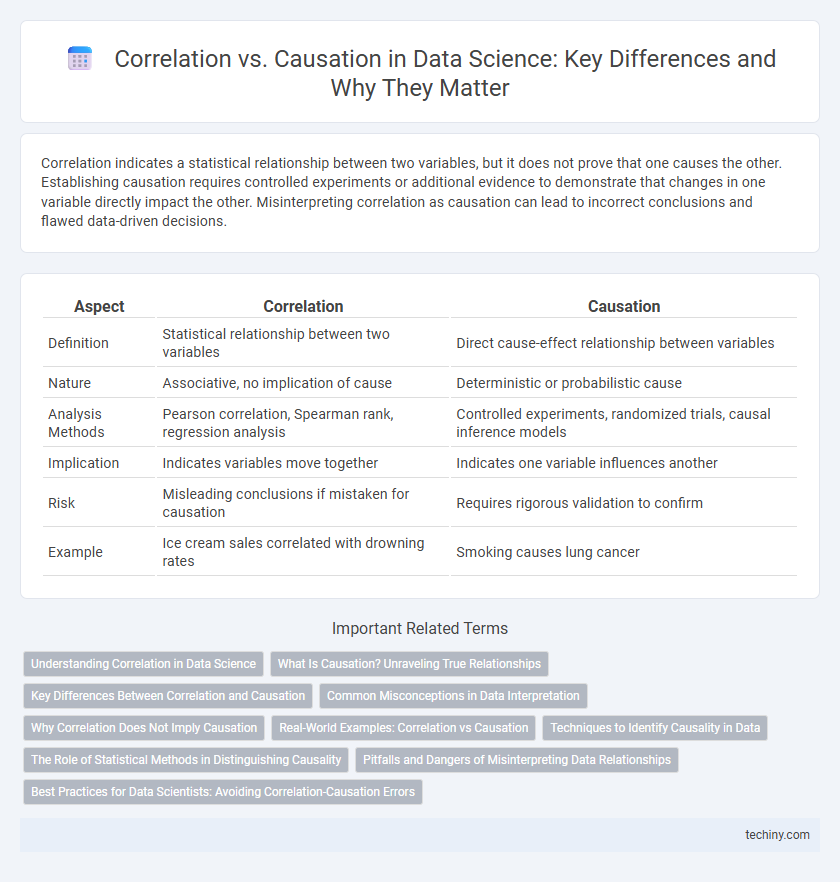

Correlation indicates a statistical relationship between two variables, but it does not prove that one causes the other. Establishing causation requires controlled experiments or additional evidence to demonstrate that changes in one variable directly impact the other. Misinterpreting correlation as causation can lead to incorrect conclusions and flawed data-driven decisions.

Table of Comparison

| Aspect | Correlation | Causation |

|---|---|---|

| Definition | Statistical relationship between two variables | Direct cause-effect relationship between variables |

| Nature | Associative, no implication of cause | Deterministic or probabilistic cause |

| Analysis Methods | Pearson correlation, Spearman rank, regression analysis | Controlled experiments, randomized trials, causal inference models |

| Implication | Indicates variables move together | Indicates one variable influences another |

| Risk | Misleading conclusions if mistaken for causation | Requires rigorous validation to confirm |

| Example | Ice cream sales correlated with drowning rates | Smoking causes lung cancer |

Understanding Correlation in Data Science

Correlation in data science measures the strength and direction of a relationship between two variables, providing insights into patterns and associations within large datasets. It quantifies how changes in one variable correspond to changes in another but does not imply that one variable causes the other to change. Understanding correlation is essential for exploratory data analysis, feature selection, and building predictive models, while avoiding the common pitfall of inferring causation from mere correlation.

What Is Causation? Unraveling True Relationships

Causation in data science refers to a relationship where one variable directly affects another, establishing a cause-and-effect connection that goes beyond mere correlation. Identifying causation requires rigorous methods like controlled experiments, randomized trials, or causal inference techniques to avoid misleading conclusions drawn from coincidental associations. Understanding true causation enables data scientists to make reliable predictions, design effective interventions, and drive decision-making with deeper insights into complex systems.

Key Differences Between Correlation and Causation

Correlation measures the statistical relationship between two variables, indicating how they move together without implying one causes the other. Causation establishes a direct cause-and-effect link where changes in one variable produce changes in another, validated through experimental or longitudinal studies. Understanding this distinction is crucial in data science to avoid misleading conclusions and ensure accurate predictive modeling and decision-making.

Common Misconceptions in Data Interpretation

Misinterpreting correlation as causation leads to flawed conclusions in data science, as correlated variables do not inherently imply one causes the other. Common misconceptions include assuming that a strong correlation confirms a direct cause-effect relationship without considering confounding factors or underlying mechanisms. Proper data interpretation requires rigorous analysis, including controlled experiments or causal inference techniques, to distinguish genuine causality from mere association.

Why Correlation Does Not Imply Causation

Correlation identifies a statistical relationship between two variables but does not establish a direct cause-and-effect link. Confounding variables and hidden biases can create misleading associations that do not represent true causal mechanisms. Understanding the distinction is critical in data science to avoid erroneous conclusions and to design experiments that validate causality.

Real-World Examples: Correlation vs Causation

Analyzing the correlation between ice cream sales and drowning incidents reveals no causal relationship; both increase during summer months due to higher temperatures, demonstrating a classic example of correlation without causation. In healthcare, a study might show a strong correlation between exercise frequency and lower heart disease risk, but controlled experiments are essential to establish causation. Data scientists use these real-world examples to emphasize that identifying correlation is not sufficient for inferring causality, highlighting the need for rigorous experimental design and statistical testing.

Techniques to Identify Causality in Data

Techniques to identify causality in data include randomized controlled trials (RCTs), which isolate variables to establish direct cause-and-effect relationships, and Granger causality tests that analyze time series data for predictive causation. Structural equation modeling (SEM) and instrumental variable analysis help uncover latent causal constructs and address confounding factors, enhancing the robustness of causal inference. Employing these methods is essential in data science to distinguish true causality from mere correlation, ensuring accurate decision-making and model reliability.

The Role of Statistical Methods in Distinguishing Causality

Statistical methods such as randomized controlled trials, instrumental variable analysis, and Granger causality tests play a crucial role in distinguishing causation from mere correlation in data science. These techniques help isolate the true causal relationships by controlling for confounding variables and eliminating spurious associations found in observational data. Proper application of these methods ensures more reliable interpretation of data patterns, guiding accurate decision-making and predictive modeling.

Pitfalls and Dangers of Misinterpreting Data Relationships

Misinterpreting correlation as causation in data science can lead to flawed conclusions and misguided decisions, as correlated variables may have no direct causal link. Ignoring confounding factors and spurious associations increases the risk of biased insights, undermining the reliability of predictive models. Accurate interpretation requires rigorous statistical testing and domain expertise to distinguish genuine causal relationships from mere correlations.

Best Practices for Data Scientists: Avoiding Correlation-Causation Errors

Data scientists must rigorously distinguish correlation from causation by applying robust experimental designs such as randomized controlled trials and employing causal inference methods like instrumental variables and difference-in-differences analysis. Ensuring data quality and leveraging domain expertise are critical to accurately interpret relationships and avoid spurious conclusions. Transparent reporting of analytical assumptions and validation through replication studies further strengthens the reliability of causal claims in data science projects.

Correlation vs Causation Infographic

techiny.com

techiny.com