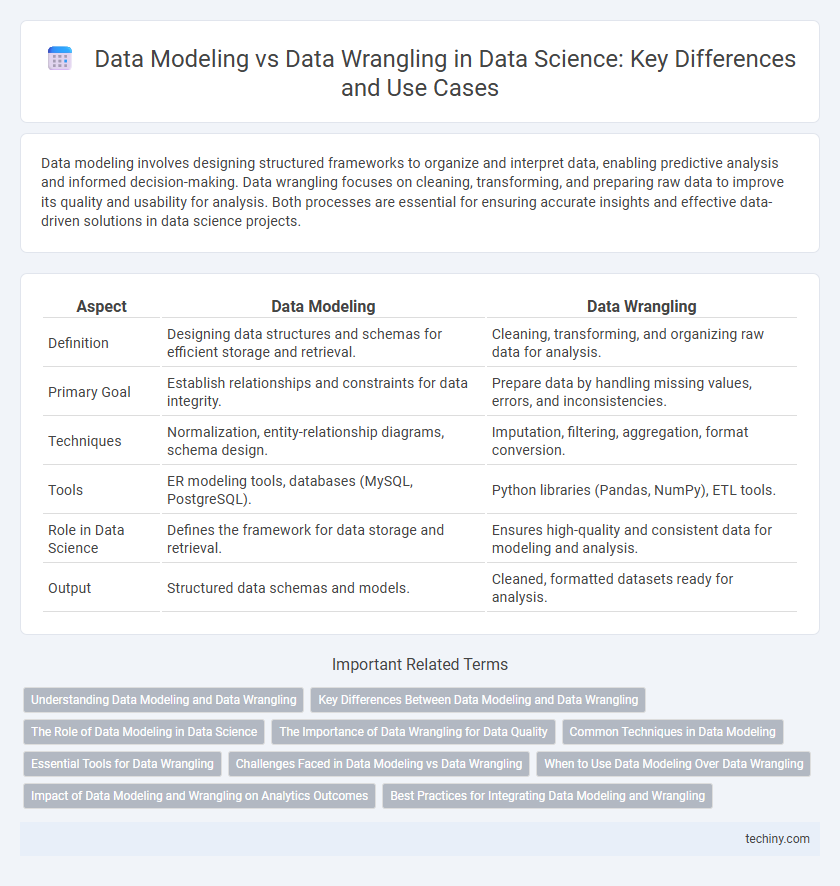

Data modeling involves designing structured frameworks to organize and interpret data, enabling predictive analysis and informed decision-making. Data wrangling focuses on cleaning, transforming, and preparing raw data to improve its quality and usability for analysis. Both processes are essential for ensuring accurate insights and effective data-driven solutions in data science projects.

Table of Comparison

| Aspect | Data Modeling | Data Wrangling |

|---|---|---|

| Definition | Designing data structures and schemas for efficient storage and retrieval. | Cleaning, transforming, and organizing raw data for analysis. |

| Primary Goal | Establish relationships and constraints for data integrity. | Prepare data by handling missing values, errors, and inconsistencies. |

| Techniques | Normalization, entity-relationship diagrams, schema design. | Imputation, filtering, aggregation, format conversion. |

| Tools | ER modeling tools, databases (MySQL, PostgreSQL). | Python libraries (Pandas, NumPy), ETL tools. |

| Role in Data Science | Defines the framework for data storage and retrieval. | Ensures high-quality and consistent data for modeling and analysis. |

| Output | Structured data schemas and models. | Cleaned, formatted datasets ready for analysis. |

Understanding Data Modeling and Data Wrangling

Data modeling defines the structure and relationships of data to create a coherent framework for analysis, using techniques such as entity-relationship diagrams and normalization. Data wrangling involves cleaning, transforming, and organizing raw data into a usable format, addressing issues like missing values, inconsistencies, and formatting errors. Mastery of both processes enhances data quality and analytical accuracy in data science projects.

Key Differences Between Data Modeling and Data Wrangling

Data modeling involves structuring and organizing data into schemas and relationships to enable efficient storage, retrieval, and analysis, while data wrangling is the process of cleaning, transforming, and preparing raw data for analysis. Data wrangling emphasizes handling data inconsistencies, missing values, and format conversions, whereas data modeling focuses on defining data types, constraints, and relationships in databases or data warehouses. The key difference lies in data wrangling being a preparatory step to ensure data quality, whereas data modeling provides the blueprint for how data is logically stored and accessed.

The Role of Data Modeling in Data Science

Data modeling plays a crucial role in data science by structuring raw data into meaningful formats that support analytical processes and predictive algorithms. While data wrangling prepares and cleanses data to ensure quality and consistency, data modeling defines the relationships and rules that enable effective database design and insightful interpretation. Effective data modeling streamlines data integration, enhances query performance, and ultimately drives better decision-making through organized and accessible datasets.

The Importance of Data Wrangling for Data Quality

Data wrangling plays a critical role in ensuring data quality by cleaning, transforming, and organizing raw data into a usable format for analysis and modeling. Effective data wrangling addresses inconsistencies, missing values, and errors, which directly impacts the accuracy and reliability of data models. High-quality data obtained through rigorous wrangling processes enhances predictive performance and supports more informed decision-making in data science projects.

Common Techniques in Data Modeling

Common techniques in data modeling include entity-relationship diagrams (ERDs), normalization, and dimensional modeling, which help structure and organize data for efficient analysis. Utilizing tools like UML and schema design, data modelers define relationships, constraints, and hierarchies to ensure data integrity and optimize query performance. These techniques enable the creation of logical, physical, and conceptual models that serve as blueprints for database construction and advanced analytics.

Essential Tools for Data Wrangling

Essential tools for data wrangling include Python libraries like Pandas and NumPy, which enable efficient manipulation and cleaning of structured data. OpenRefine offers a user-friendly interface for transforming messy data into usable formats, supporting tasks such as data deduplication and normalization. Cloud-based platforms like Trifacta provide scalable solutions for data wrangling workflows, integrating seamlessly with big data ecosystems to enhance data quality before modeling.

Challenges Faced in Data Modeling vs Data Wrangling

Data modeling faces challenges such as accurately representing complex real-world relationships, selecting appropriate schemas, and ensuring data integrity and consistency across diverse datasets. Data wrangling struggles with handling messy, inconsistent, and incomplete data, requiring extensive preprocessing and transformation to prepare datasets for analysis. Both processes demand domain expertise and sophisticated tools to overcome issues like scalability, data quality, and integration of heterogeneous data sources.

When to Use Data Modeling Over Data Wrangling

Data modeling is essential when defining the structure, relationships, and constraints of data to support analytics, reporting, or machine learning applications. Use data modeling over data wrangling when establishing a scalable framework for organizing large datasets, ensuring data integrity, and enabling efficient query performance. Data wrangling is more appropriate for cleaning and transforming raw data to prepare it for analysis, while data modeling focuses on creating a coherent schema for long-term data management.

Impact of Data Modeling and Wrangling on Analytics Outcomes

Data modeling structures raw data into organized formats, enabling accurate and efficient analytical queries, which directly enhances the reliability of insights and decision-making. Data wrangling cleanses and transforms unstructured data, improving the quality and consistency of datasets used in analysis, thereby reducing errors and biases in results. The combined impact of robust data modeling and effective data wrangling is critical for producing precise, actionable analytics outcomes that drive business value.

Best Practices for Integrating Data Modeling and Wrangling

Effective integration of data modeling and data wrangling hinges on establishing clear data governance frameworks that ensure data quality and consistency across all stages. Employing iterative workflows with automated validation checks enhances accuracy and scalability while minimizing errors during transformation and schema design. Leveraging tools like Apache Spark and Python's Pandas streamlines data preprocessing and dynamic modeling adjustments, promoting seamless collaboration between data engineers and scientists.

data modeling vs data wrangling Infographic

techiny.com

techiny.com