Ensemble learning combines multiple individual models to improve prediction accuracy and reduce overfitting by leveraging diverse strengths. Individual models may perform well on specific tasks but often lack the robustness that ensembles provide through collective decision-making. This approach enhances overall model generalization and reliability in data science applications.

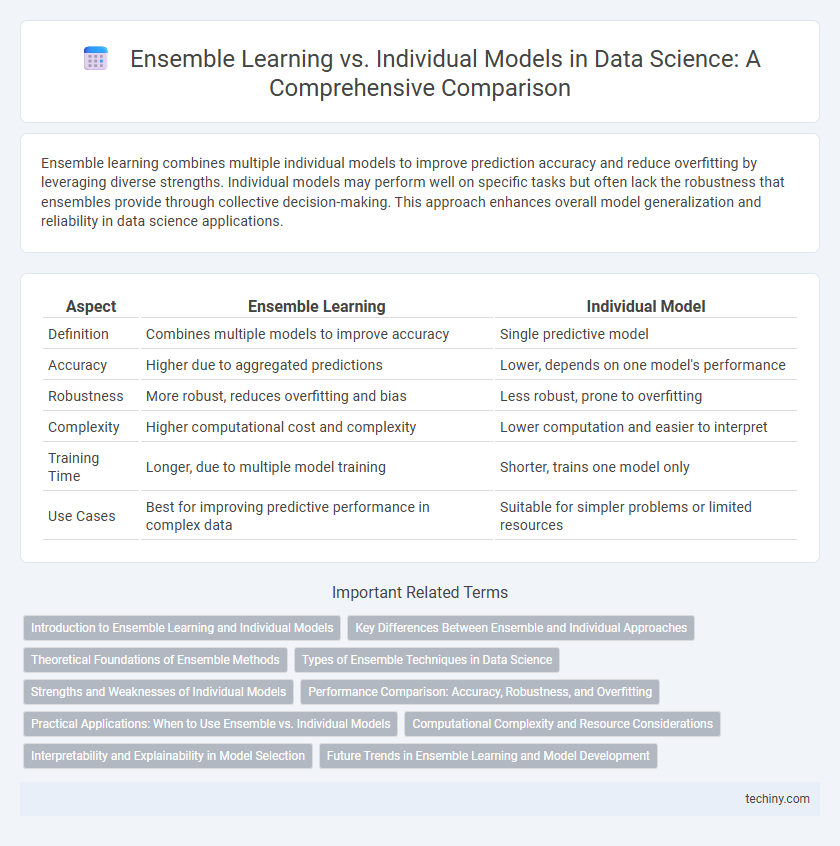

Table of Comparison

| Aspect | Ensemble Learning | Individual Model |

|---|---|---|

| Definition | Combines multiple models to improve accuracy | Single predictive model |

| Accuracy | Higher due to aggregated predictions | Lower, depends on one model's performance |

| Robustness | More robust, reduces overfitting and bias | Less robust, prone to overfitting |

| Complexity | Higher computational cost and complexity | Lower computation and easier to interpret |

| Training Time | Longer, due to multiple model training | Shorter, trains one model only |

| Use Cases | Best for improving predictive performance in complex data | Suitable for simpler problems or limited resources |

Introduction to Ensemble Learning and Individual Models

Ensemble learning combines multiple individual models to improve predictive performance and reduce overfitting compared to single models. Techniques such as bagging, boosting, and stacking leverage the strengths of diverse base learners, enhancing accuracy and robustness in data science tasks. Individual models operate independently, often prone to higher variance or bias, while ensembles aggregate their predictions for superior generalization.

Key Differences Between Ensemble and Individual Approaches

Ensemble learning combines multiple individual models to enhance predictive accuracy and reduce overfitting, leveraging diversity among base learners such as decision trees or neural networks. Individual models rely on a single algorithm, which may be faster but often lacks the robustness and error reduction provided by ensemble methods like bagging, boosting, or stacking. Performance metrics such as accuracy, precision, and recall consistently show ensembles outperform single models in complex datasets by aggregating varied model predictions.

Theoretical Foundations of Ensemble Methods

Ensemble learning combines multiple individual models to improve prediction accuracy by reducing variance, bias, or improving predictions through aggregation techniques such as bagging, boosting, and stacking. Theoretical foundations rely on the bias-variance tradeoff, error decomposition, and diversity among base learners to achieve better generalization compared to single models. Ensemble methods leverage statistical principles and computational learning theory to enhance robustness and stability in complex data science problems.

Types of Ensemble Techniques in Data Science

Ensemble learning in data science combines multiple models to improve predictive performance and reduce overfitting compared to individual models. Key types of ensemble techniques include bagging, which builds multiple versions of a predictor and aggregates their results, boosting that sequentially adjusts weights to focus on difficult instances, and stacking that blends various models using a meta-learner. Each technique leverages diverse model strengths to enhance accuracy and robustness in complex data environments.

Strengths and Weaknesses of Individual Models

Individual models in data science, such as decision trees or logistic regression, offer simplicity, faster training times, and ease of interpretation. However, they often suffer from high variance or bias, leading to lower predictive accuracy and potential overfitting or underfitting on complex datasets. Their limited generalization capability reduces robustness compared to ensemble learning methods that combine multiple models to improve performance.

Performance Comparison: Accuracy, Robustness, and Overfitting

Ensemble learning methods consistently outperform individual models in accuracy by combining multiple predictions to reduce bias and variance. They demonstrate greater robustness against noisy data and outliers, ensuring stable performance across diverse datasets. While individual models are prone to overfitting, ensemble techniques like bagging and boosting mitigate this risk through model aggregation and iterative refinement.

Practical Applications: When to Use Ensemble vs. Individual Models

Ensemble learning improves prediction accuracy and robustness by combining multiple models, making it ideal for complex datasets with high variability or noise, such as fraud detection and medical diagnosis. Individual models are preferred for simpler tasks or when interpretability and faster computation are critical, like in real-time recommendation systems or basic classification problems. Choosing between ensemble and individual models depends on trade-offs between accuracy, interpretability, computational resources, and application-specific requirements.

Computational Complexity and Resource Considerations

Ensemble learning methods often exhibit higher computational complexity compared to individual models due to the need for training multiple base learners, which increases processing time and memory usage. Resource considerations are critical, as ensembles demand greater storage capacity and more intensive hardware requirements, potentially limiting their deployment in resource-constrained environments. Choosing between ensemble learning and individual models involves balancing improved predictive performance against increased computational and resource overhead.

Interpretability and Explainability in Model Selection

Ensemble learning techniques, such as random forests and gradient boosting, often outperform individual models in predictive accuracy but can compromise interpretability due to their complexity and multiple combined classifiers. Individual models like logistic regression or decision trees provide clearer explainability through straightforward feature coefficients or decision paths, facilitating model transparency in data-driven decisions. Balancing accuracy and interpretability is crucial in model selection, especially in regulated domains where understanding model rationale impacts trust and compliance.

Future Trends in Ensemble Learning and Model Development

Future trends in ensemble learning emphasize the integration of adaptive algorithms that dynamically select and weight individual models for improved accuracy and robustness. Advancements in deep learning and automated machine learning (AutoML) are driving the evolution of hybrid ensemble frameworks that optimize predictive performance across diverse datasets. Emerging research highlights the potential of meta-learning to enhance ensemble strategies, enabling models to generalize better and reduce overfitting in complex data environments.

ensemble learning vs individual model Infographic

techiny.com

techiny.com