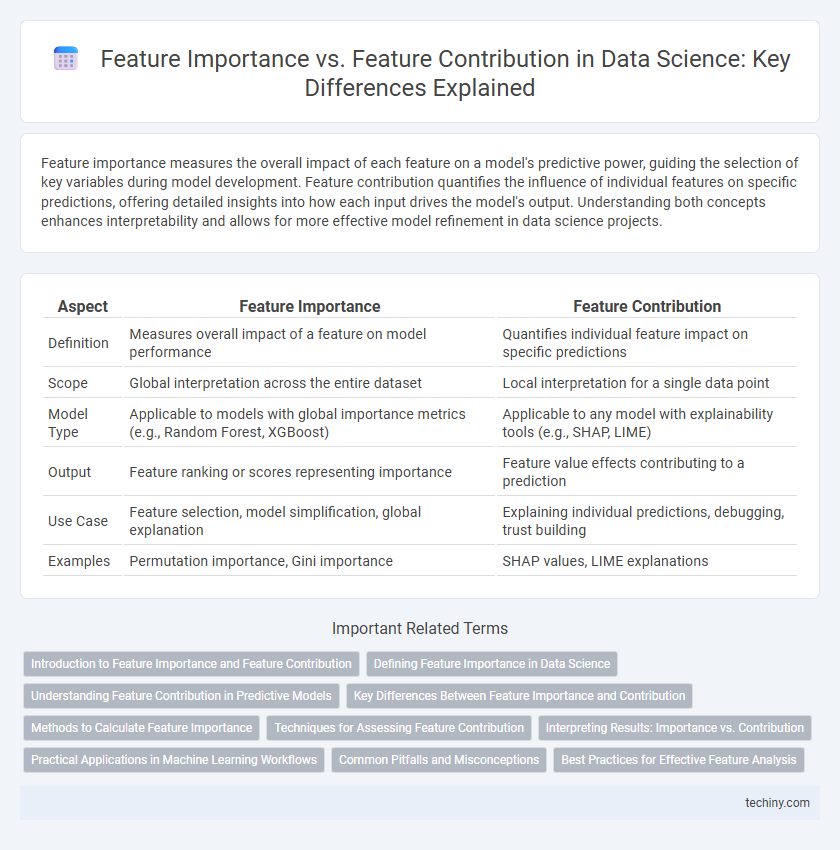

Feature importance measures the overall impact of each feature on a model's predictive power, guiding the selection of key variables during model development. Feature contribution quantifies the influence of individual features on specific predictions, offering detailed insights into how each input drives the model's output. Understanding both concepts enhances interpretability and allows for more effective model refinement in data science projects.

Table of Comparison

| Aspect | Feature Importance | Feature Contribution |

|---|---|---|

| Definition | Measures overall impact of a feature on model performance | Quantifies individual feature impact on specific predictions |

| Scope | Global interpretation across the entire dataset | Local interpretation for a single data point |

| Model Type | Applicable to models with global importance metrics (e.g., Random Forest, XGBoost) | Applicable to any model with explainability tools (e.g., SHAP, LIME) |

| Output | Feature ranking or scores representing importance | Feature value effects contributing to a prediction |

| Use Case | Feature selection, model simplification, global explanation | Explaining individual predictions, debugging, trust building |

| Examples | Permutation importance, Gini importance | SHAP values, LIME explanations |

Introduction to Feature Importance and Feature Contribution

Feature importance quantifies the impact of each input variable on a model's predictive accuracy, often derived from algorithms like random forests or gradient boosting. Feature contribution breaks down individual predictions by showing how much each feature influences the output for a specific data point, commonly used in interpretability methods like SHAP or LIME. Understanding both concepts enhances model transparency and enables targeted feature engineering for improved data science outcomes.

Defining Feature Importance in Data Science

Feature importance in data science quantifies the impact of individual variables on a model's predictive power, guiding feature selection and model interpretation. Techniques such as permutation importance, SHAP values, and Gini importance rank features by their influence on the outcome. Understanding feature importance enhances model transparency and helps identify key drivers behind predictions for both supervised and unsupervised learning tasks.

Understanding Feature Contribution in Predictive Models

Feature contribution quantifies the specific impact of each input variable on an individual prediction, revealing how changes in feature values influence model outputs. Unlike feature importance, which measures the overall relevance of features across the entire dataset, feature contribution provides granular insights critical for interpreting model decisions at a local level. Techniques such as SHAP values and LIME enable detailed assessment of feature contributions, enhancing transparency and trust in predictive analytics.

Key Differences Between Feature Importance and Contribution

Feature importance quantifies the overall impact of each feature on a model's predictive accuracy, providing a global view of which variables drive predictions across the entire dataset. Feature contribution, on the other hand, explains the influence of features on individual predictions, offering local interpretability by attributing specific values to each feature's effect on a particular instance. Understanding these distinctions is crucial for interpreting model behavior, improving transparency in data science workflows, and aligning model explanations with business objectives.

Methods to Calculate Feature Importance

Methods to calculate feature importance in data science include permutation importance, SHAP (SHapley Additive exPlanations), and tree-based model feature importance like Gini importance or gain from Random Forests and XGBoost. Permutation importance measures the increase in model error after shuffling a feature's values, indicating its impact on prediction accuracy. SHAP values provide consistent and locally accurate feature contributions derived from cooperative game theory, offering detailed insight into how each feature influences individual predictions.

Techniques for Assessing Feature Contribution

Techniques for assessing feature contribution in data science include SHAP (SHapley Additive exPlanations) values, which quantify individual feature impacts on model predictions with game-theoretic foundations, and permutation importance, measuring prediction accuracy changes after feature value shuffling. Partial dependence plots (PDP) visualize the average effect of a feature on the predicted outcome by marginalizing over other features, while LIME (Local Interpretable Model-agnostic Explanations) approximates local model behavior to explain feature contributions for individual predictions. These methods enable granular insights beyond global feature importance scores, enhancing transparency and interpretability of complex models.

Interpreting Results: Importance vs. Contribution

Feature importance quantifies the overall impact of each variable on the predictive model's performance, often measured by metrics such as information gain or permutation importance. Feature contribution, in contrast, explains an individual prediction by attributing a specific portion of the output to each feature, typically using methods like SHAP or LIME. Understanding the distinction enhances interpretability by identifying which features drive model accuracy globally versus those influencing single-instance decisions.

Practical Applications in Machine Learning Workflows

Feature importance quantifies the overall impact of a feature on a model's predictive performance, guiding feature selection and model interpretation in supervised learning tasks. Feature contribution breaks down the influence of individual features on specific predictions, enabling granular analysis for explaining model decisions and debugging. These distinctions improve workflow efficiency by enhancing model transparency, optimizing feature engineering, and facilitating compliance with regulatory requirements in machine learning pipelines.

Common Pitfalls and Misconceptions

Feature importance often gets confused with feature contribution, leading to misinterpretations in model explanations; importance scores indicate the overall impact of a feature on model performance, while contribution measures the effect on individual predictions. A common pitfall is assuming that high feature importance always translates to positive influence on every prediction, ignoring context-specific variability captured by contribution values. Misconceptions arise when stakeholders treat importance metrics as causal, overlooking that they only reflect statistical associations within the training data.

Best Practices for Effective Feature Analysis

Feature importance quantifies the relative impact of each variable in predictive modeling, guiding feature selection and model interpretation. Feature contribution provides instance-level insights, revealing how individual features influence specific predictions in techniques like SHAP or LIME. Best practices for effective feature analysis include using multiple methods to validate results, ensuring data quality and preprocessing consistency, and aligning feature interpretation with domain knowledge for actionable insights.

feature importance vs feature contribution Infographic

techiny.com

techiny.com