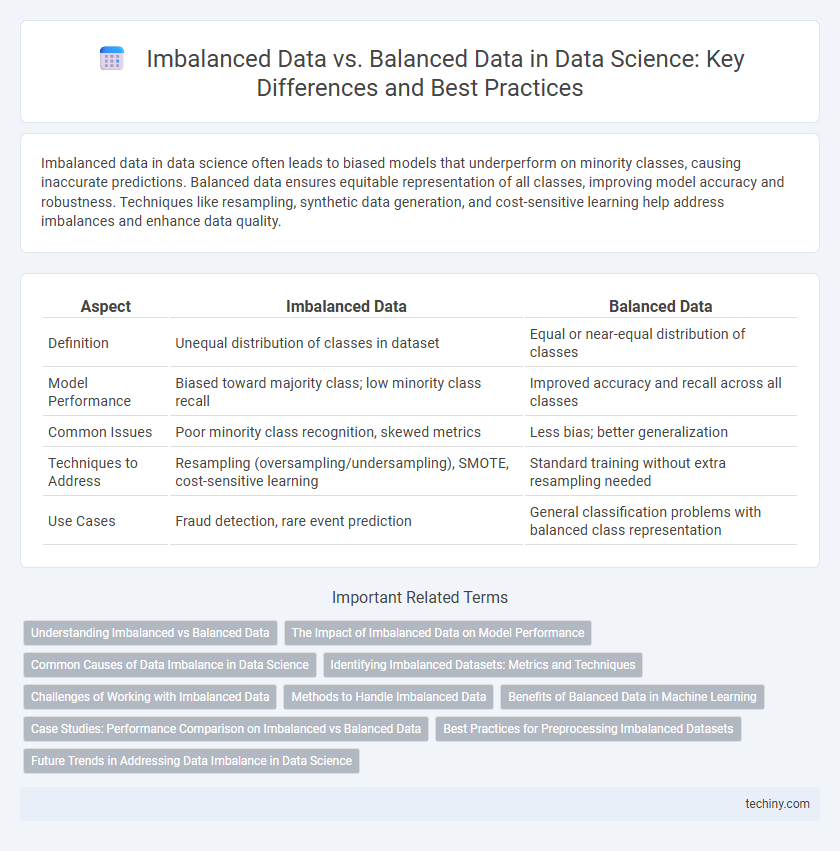

Imbalanced data in data science often leads to biased models that underperform on minority classes, causing inaccurate predictions. Balanced data ensures equitable representation of all classes, improving model accuracy and robustness. Techniques like resampling, synthetic data generation, and cost-sensitive learning help address imbalances and enhance data quality.

Table of Comparison

| Aspect | Imbalanced Data | Balanced Data |

|---|---|---|

| Definition | Unequal distribution of classes in dataset | Equal or near-equal distribution of classes |

| Model Performance | Biased toward majority class; low minority class recall | Improved accuracy and recall across all classes |

| Common Issues | Poor minority class recognition, skewed metrics | Less bias; better generalization |

| Techniques to Address | Resampling (oversampling/undersampling), SMOTE, cost-sensitive learning | Standard training without extra resampling needed |

| Use Cases | Fraud detection, rare event prediction | General classification problems with balanced class representation |

Understanding Imbalanced vs Balanced Data

Imbalanced data in data science refers to datasets where certain classes significantly outnumber others, causing biased model predictions and reduced accuracy. Balanced data contains relatively equal representation across classes, enabling models to learn more generalized patterns and enhance performance metrics such as precision, recall, and F1-score. Understanding the distinction between imbalanced and balanced data is crucial for selecting appropriate techniques like resampling, synthetic data generation (SMOTE), or cost-sensitive learning to mitigate classification bias and improve model robustness.

The Impact of Imbalanced Data on Model Performance

Imbalanced data significantly skews model performance by biasing predictions towards the majority class, leading to poor generalization on minority classes. Metrics like accuracy become misleading, necessitating the use of balanced evaluation criteria such as F1-score, precision-recall curves, and AUC-ROC to accurately assess model effectiveness. Addressing imbalance through resampling techniques, synthetic data generation, or algorithmic adjustments is critical to enhance fairness and predictive accuracy in real-world data science applications.

Common Causes of Data Imbalance in Data Science

Common causes of data imbalance in data science include rare event occurrences, insufficient data collection methods, and class distribution disparities arising from natural or operational biases. Imbalanced data often results from uneven sampling, where minority classes are underrepresented due to difficulty in capturing or labeling essential instances. This imbalance can severely impact machine learning model performance by skewing predictions towards majority classes and neglecting rare but crucial patterns.

Identifying Imbalanced Datasets: Metrics and Techniques

Imbalanced datasets in data science are characterized by a disproportionate distribution of classes, often evaluated using metrics such as the Gini index, entropy, and the area under the ROC curve (AUC-ROC). Techniques like confusion matrices, precision-recall curves, and the F1 score are essential for quantifying imbalance and assessing model performance beyond accuracy. Employing statistical tests and visualization methods, such as histogram plots and box plots, further aids in identifying minority and majority class imbalances for effective data preprocessing.

Challenges of Working with Imbalanced Data

Imbalanced data in data science poses significant challenges such as biased model predictions favoring the majority class, leading to poor performance on minority classes. Evaluation metrics like accuracy become misleading, necessitating the use of precision, recall, F1-score, or AUC for better assessment. Techniques including resampling, synthetic data generation (SMOTE), and cost-sensitive learning are essential to address skewed class distributions effectively.

Methods to Handle Imbalanced Data

Techniques to address imbalanced data include resampling methods such as oversampling the minority class with Synthetic Minority Over-sampling Technique (SMOTE) and undersampling the majority class to achieve class balance. Algorithmic approaches involve cost-sensitive learning where misclassification costs are adjusted to penalize errors on minority classes more heavily. Ensemble methods like Balanced Random Forest and EasyEnsemble combine multiple models and balanced sampling to improve predictive performance on imbalanced datasets.

Benefits of Balanced Data in Machine Learning

Balanced data in machine learning enhances model accuracy by providing equal representation of all classes, reducing bias towards the majority class. This balance improves the model's ability to generalize, leading to better performance on unseen data and more reliable predictions. Consequently, classifiers trained on balanced datasets demonstrate higher precision, recall, and F1 scores across all categories.

Case Studies: Performance Comparison on Imbalanced vs Balanced Data

Case studies reveal that models trained on balanced data consistently outperform those trained on imbalanced datasets, especially in metrics such as recall and F1-score. For example, in fraud detection datasets with a 1:100 ratio, balancing techniques like SMOTE improve detection rates by up to 30%. Conversely, models on imbalanced data often exhibit high accuracy but fail to identify minority class instances, leading to significant real-world performance degradation.

Best Practices for Preprocessing Imbalanced Datasets

Effective preprocessing of imbalanced datasets in data science involves techniques such as oversampling the minority class using methods like SMOTE or ADASYN, and undersampling the majority class to achieve balanced class distributions. Feature scaling, normalization, and careful feature selection are essential to maintain model performance and reduce bias toward the majority class. Employing stratified sampling and cross-validation further ensures that the model generalizes well across classes despite initial data imbalance.

Future Trends in Addressing Data Imbalance in Data Science

Emerging techniques such as adaptive synthetic sampling and generative adversarial networks (GANs) are revolutionizing the handling of imbalanced datasets by creating more representative and diverse synthetic data points. Advances in ensemble learning methods and cost-sensitive algorithms are enhancing model robustness by prioritizing minority class accuracy without compromising overall performance. Integration of automated machine learning (AutoML) pipelines is streamlining imbalance detection and mitigation, enabling scalable and efficient deployment in real-world data science applications.

imbalanced data vs balanced data Infographic

techiny.com

techiny.com